Tutorials

How to use ComfyUI API nodes

ComfyUI is known for running local image and video AI models. Recently, it added support for running proprietary close models …

Clone Your Voice Using AI (ComfyUI)

Have you ever wondered how those deepfakes of celebrities like Mr. Beast were able to clone their voices? Well, those …

How to run Wan VACE video-to-video in ComfyUI

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing AI model that you can run …

Wan VACE ComfyUI reference-to-video tutorial

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing model developed by the Alibaba team …

How to run LTX Video 13B on ComfyUI (image-to-video)

LTX Video is a popular local AI model known for its generation speed and low VRAM usage. The LTXV-13B model …

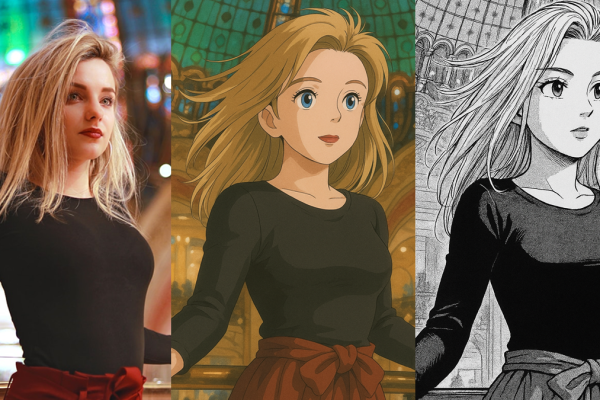

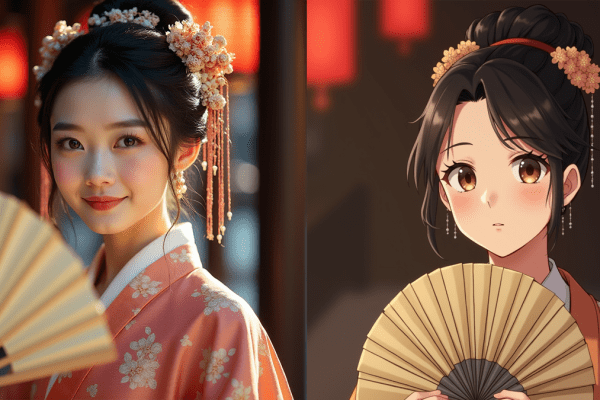

Stylize photos with ChatGPT

Do you know you can use ChatGPT to stylize photos? This free, straightforward method yields impressive results. In this tutorial, …

How to generate OmniHuman-1 lip sync video

Lip sync is notoriously tricky to get right with AI because we naturally talk with body movement. OmniHuman-1 is a …

How to create FramePack videos on Google Colab

FramePack is a video generation method that allows you to create long AI videos with limited VRAM. If you don’t …

How to create videos with Google Veo 2

You can now use Veo 2, Google’s AI-powered video generation model, on Google AI Studio. It supports text-to-image and, more …

FramePack: long AI video with low VRAM

Framepack is a video generation method that consumes low VRAM (6 GB) regardless of the video length. It supports image-to-video, …

Speeding up Hunyuan Video 3x with Teacache

The Hunyuan Video is the one of the highest quality video models that can be run on a local PC …

How to speed up Wan 2.1 Video with Teacache and Sage Attention

Wan 2.1 Video is a state-of-the-art AI model that you can use locally on your PC. However, it does take …

How to use Wan 2.1 LoRA to rotate and inflate characters

Wan 2.1 Video is a generative AI video model that produces high-quality video on consumer-grade computers. Remade AI, an AI …

How to use LTX Video 0.9.5 on ComfyUI

LTX Video 0.9.5 is an improved version of the LTX local video model. The model is very fast — it …

How to run Hunyuan Image-to-video on ComfyUI

The Hunyuan Video model has been a huge hit in the open-source AI community. It can generate high-quality videos from …

How to run Wan 2.1 Video on ComfyUI

Wan 2.1 Video is a series of open foundational video models. It supports a wide range of video-generation tasks. It …

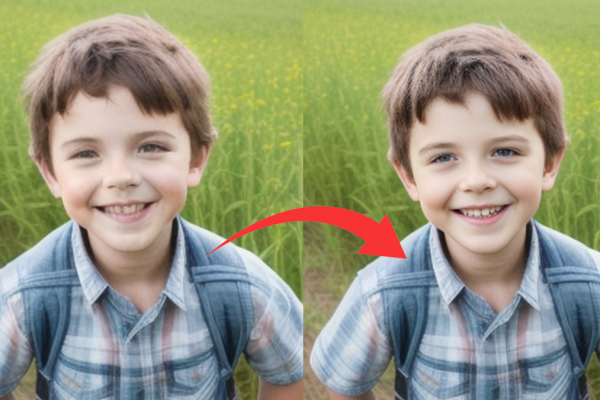

CodeFormer: Enhancing facial detail in ComfyUI

CodeFormer is a robust face restoration tool that enhances facial features, making them more realistic and detailed. Integrating CodeFormer into …

3 ways to fix Queue button missing in ComfyUI

Sometimes, the “Queue” button disappears in my ComfyUI for no reason. It may be due to glitches in the updated …

How to run Lumina Image 2.0 on ComfyUI

Lumina Image 2.0 is an open-source AI model that generates images from text descriptions. It excels in artistic styles and …

TeaCache: 2x speed up in ComfyUI

Do you wish AI to run faster on your PC? TeaCache can speed up diffusion models with negligible changes in …

How to use Hunyuan video LoRA to create consistent characters

Low-Rank Adaptation (LoRA) has emerged as a game-changing technique for finetuning image models like Flux and Stable Diffusion. By focusing …

How to remove background in ComfyUI

Background removal is an essential tool for digital artists and graphic designers. It cuts clutter and enhances focus. You can …

How to direct Hunyuan video with an image

Hunyuan Video is a local video model which turns a text description into a video. But what if you want …

How to generate Hunyuan video on ComfyUI

Hunyuan Video is a new local and open-source video model with exceptional quality. It can generate a short video clip …

How to use image prompts with Flux model (Redux)

Images speak volumes. They express what words cannot capture, such as style and mood. That’s why the Image prompt adapter …

How to outpaint with Flux Fill model

The Flux Fill model is an excellent choice for inpainting. Do you know it works equally well for outpainting (extending …

Fast Local video: LTX Video

LTX Video is a fast, local video AI model full of potential. The Diffusion Transformer (DiT) Video model supports generating …

How to use Flux.1 Fill model for inpainting

Using the standard Flux checkpoint for inpainting is not ideal. You must carefully adjust the denoising strength. Setting it too …

Local image-to-video with CogVideoX

Local AI video has gone a long way since the release of the first local video model. The quality is …

How to run Mochi1 text-to-video on ComfyUI

Mochi1 is one of the best video AI models you can run locally on a PC. It turns your text …

Stable Diffusion 3.5 Medium model on ComfyUI

Stable Diffusion 3.5 Medium is an AI image model that runs on consumer-grade GPU cards. It has 2.6 billion parameters, …

How to use Flux LoRA on ComfyUI

Flux is a state-of-the-art image model. It excels in generating realistic photos and following the prompt, but some styles can …

How to install Stable Diffusion 3.5 Large model on ComfyUI

Our old friend Stability AI has released the Stable Diffusion 3.5 Large model and a faster Turbo variant. Attempting to …

Flux AI: A Beginner-Friendly Overview

Since the release of Flux.1 AI models on August 1, 2024, we have seen a flurry of activities around it …

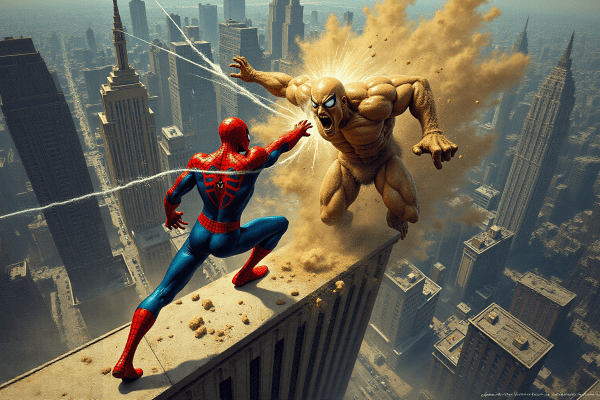

SDXL vs Flux1.dev models comparison

SDXL and Flux1.dev are two popular local AI image models. Both have a relatively high native resolution of 1024×1024. Currently, …

How to run ComfyUI on Google Colab

ComfyUI is a popular way to run local Stable Diffusion and Flux AI image models. It is a great complement …

How to run SD Forge WebUI on Google Colab

Stable Diffusion Forge WebUI has emerged as a popular way to run Stable Diffusion and Flux AI image models. It …

How to direct motion in Kling AI video

Kling AI is one of the best online video generators that can turn any image into a video. Although Kling …

How to train Flux LoRA models

The Flux Dev AI model is a great leap forward in local Diffusion models. It delivers quality surpassing Stable Diffusion …

CogvideoX 5B: High quality local video generator

Cognvideo is a state-of-the-art AI video generator similar to Kling, except you can generate the video locally on your PC …

How to use Controlnet with Flux AI model

ControlNet is an indispensable tool for controlling the precise composition of AI images. You can use ControlNet to specify human …

How to use preset styles for Flux and Stable Diffusion

Style presets are commonly used styles for Stable Diffusion and Flux AI models. You can use them to quickly apply …

How to use Flux AI model on Mac

The Flux AI model is the highest-quality open-source text-to-image AI model you can run locally without online censorship. However, being …

How to generate free AI videos with MiniMax AI

Chinese tech company MiniMax AI has released a new text-to-video tool called Video-01 that can turn text into short, high-quality …

How to install ComfyUI Manager

ComfyUI is a popular, open-source user interface for Stable Diffusion, Flux, and other AI image and video generators. It makes …

How to use Flux Lora on Forge

LoRA models are essential tools to supplement the Flux AI checkpoint models. They can enable Flux to generate content it …

How to use Kling text-to-video and image-to-video

Kling is a state-of-the-art AI video generator. It takes a text or an image prompt to generate a short video …

Pony Diffusion XL v6 prompt tags

Pony Diffusion XL v6 is a versatile Stable Diffusion model for artistic creation. Many SD users have a lot of …

Img2img and inpainting with Flux AI model

Image-to-image allows you to generate a new image based on an existing one. Inpainting lets you regenerate a small area …

How to run Flux AI with low VRAM

Flux AI is the best open-source AI image generator you can run locally on your PC (As of August 2024) …

How to install Flux AI model on ComfyUI

Flux is a family of text-to-image diffusion models developed by Black Forest Labs. As of Aug 2024, it is the …

Pony Diffusion v6 XL

Pony Diffusion v6 XL is a Stable Diffusion model for generating stunning visuals of humans, horses, and anything in between …

How to generate consistent style with Stable Diffusion using Style Aligned and Reference ControlNet

Generating images with a consistent style is a valuable technique in Stable Diffusion for creative works like logos or book …

How to run Stable Diffusion 3 locally

You can now run the Stable Diffusion 3 Medium model locally on your machine. As of the time of writing, …

How to run ComfyUI, A1111, and Forge on AWS

Running Stable Diffusion in the cloud (AWS) has many advantages. You rent the hardware on-demand and only pay for the …

Self-Attention Guidance: Improve image background

Self-attention Guidance (SAG) enhances details in an image while preserving the overall composition. It is useful for fixing nonsensical details …

How to create consistent character from different viewing angles

Do you ever need to create consistent AI characters from different viewing angles? The method in this article makes a …

Hyper-SD and Hyper-SDXL fast models

Hyper-SD and Hyper-SDXL are distilled Stable Diffusion models that claim to generate high-quality images in 1 to 8 steps. We …

Perturbed Attention Guidance

Perturbed Attention Guidance is a simple modification to the sampling process to enhance your Stable Diffusion images. I will cover: …

Align Your Steps: How-to guide and review

Align Your Steps (AYS) is a change in the sampling process proposed by the Nvidia team to solve the reverse …

Stable Diffusion 3: A comparison with SDXL and Stable Cascade

Stable Diffusion 3 is the latest and largest image Stable Diffusion model. It promises to outperform previous models like Stable …

How to use Stable Diffusion 3 API

Stable Diffusion 3 is the latest text-to-image model by Stability AI. It promises to outperform previous models like Stable Cascade …

How to generate SVD video with SD Forge

You can use Stable Diffusion WebUI Forge to generate Stable Video Diffusion (SVD) videos. SD Forge provides an interface to …

Stable Video 3D: Orbital view based on a single image

Stable Video 3D (SV3D) is a generative AI model that creates a 3D orbital view using a single image input …

Generate images with transparent backgrounds with Stable Diffusion

An image with a transparent background is useful for downstream design work. You can use the image with different backgrounds …

How to use Soft Inpainting

Soft inpainting seamlessly blends the original and the inpainted content. You can avoid hard boundaries in a complex scene by …

How to fix hands in Stable Diffusion

Stable Diffusion has a hand problem. It is pretty common to see deformed hands or missing/extra fingers. In this article, …

Stable Diffusion 3

Stable Diffusion 3 is announced, followed by a research paper detailing the model. The model is not publicly available yet, …

Stable Cascade Model

Stable Cascade is a new text-to-image model released by Stability AI, the creator of Stable Diffusion. It features higher image …

How to install SD Forge

Stable Diffusion WebUI Forge (SD Forge) is an alternative version of Stable Diffusion WebUI that features faster image generation for …

How to use InstantID to copy faces

Looking for a way to put someone in Stable Diffusion? InstantID is a Stable Diffusion addon for copying a face …

IP-Adapters: All you need to know

IP-adapter (Image Prompt adapter) is a Stable Diffusion add-on for using images as prompts, similar to Midjourney and DaLLE 3 …