Stable Diffusion 3 is the latest and largest image Stable Diffusion model. It promises to outperform previous models like Stable Cascade and Stable Diffusion XL in text generation and prompt following.

In this post, I will compare the Stable Diffusion 3 model with the Stable Cascade and XL model from a user’s perspective. The following areas are tested:

- Text rendering

- Prompt following

- Rendering Faces

- Rendering Hands

- Styles

Table of Contents

Using Stable Diffusion 3

Stable Diffusion 3 is currently available through Stability’s developer API. You can find instructions to use it in this post.

Read this post for an overview of the Stable Diffusion 3 model.

Text rendering

Generating legible text on images has long been challenging for AI image generators. Stable Diffusion 1.5 sucks at it. Stable Diffusion XL is an improvement. Stable Cascade is a quantum leap.

The good news is Stable Diffusion 3’s text generation is at the next level.

Let’s test the three models with the following prompt, which intends to generate a challenging text.

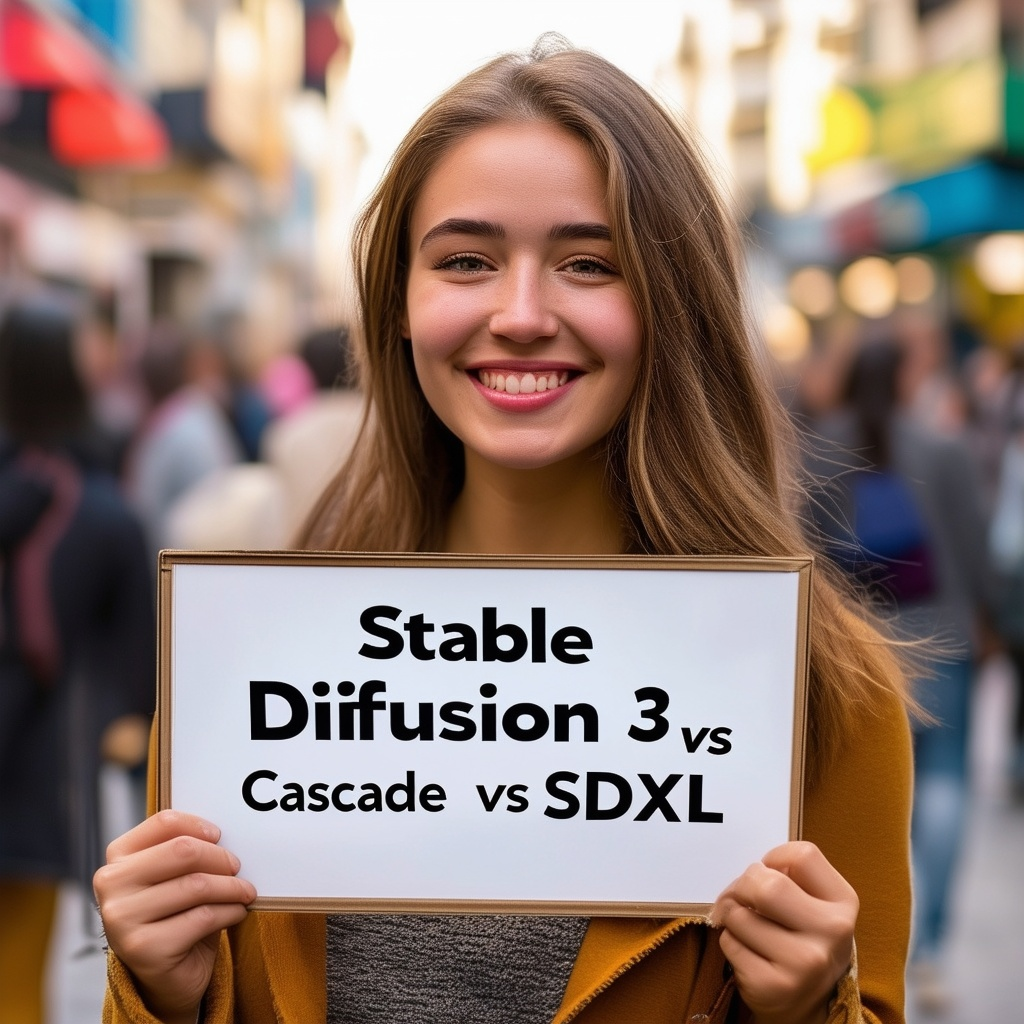

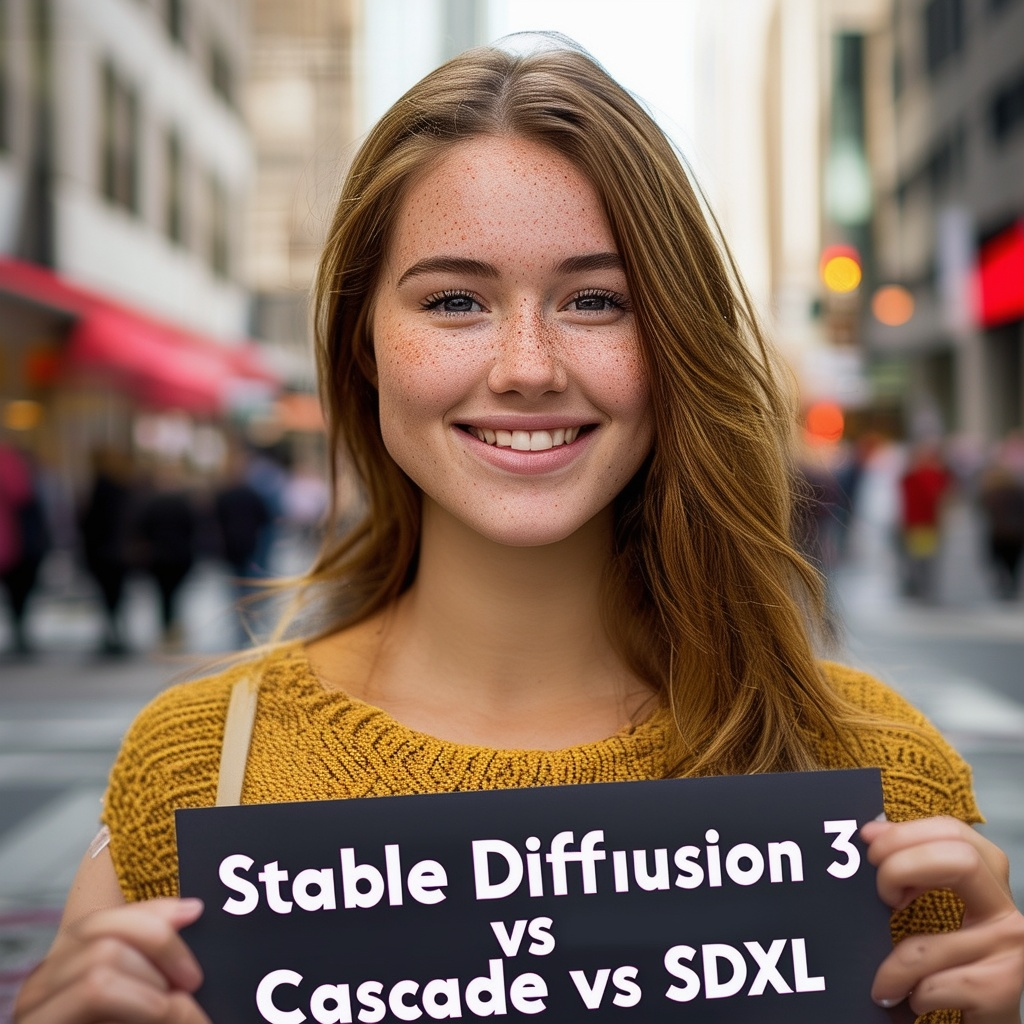

a portrait photo of a 25-year old beautiful woman, busy street street, smiling, holding a sign “Stable Diffusion 3 vs Cascade vs SDXL”

Here are images from the SDXL model. You can see the text generation is far from being correct.

The Stable Cascade generates clearer text but the result is far from satisfactory.

Finally, Stable Diffusion 3 performs the best in text generation, representing the best text generation model in the Stable Diffusion series so far.

Ideas for text generation

What can you do with this new capability? You can now experiment with funny signs.

City street, a sign on a property that says “No trespassing. We are tired of hiding the bodies”

We need ControlNet to generate text effect images in Stable Diffusion 1.5 and XL. Not anymore in SD3!

The word “Fire” made of fire and lava, high temperature

Or now you can play with longer texts.

The sentence “Let’s snow somewhere else” made of snow, mountain

Prompt-following

Prompt-following is touted as a major improvement.

I will challenge the prompt-following ability of the three Stable Diffusion models in the following:

- Controlling poses

- Object compositions

Controlling poses

Prompt:

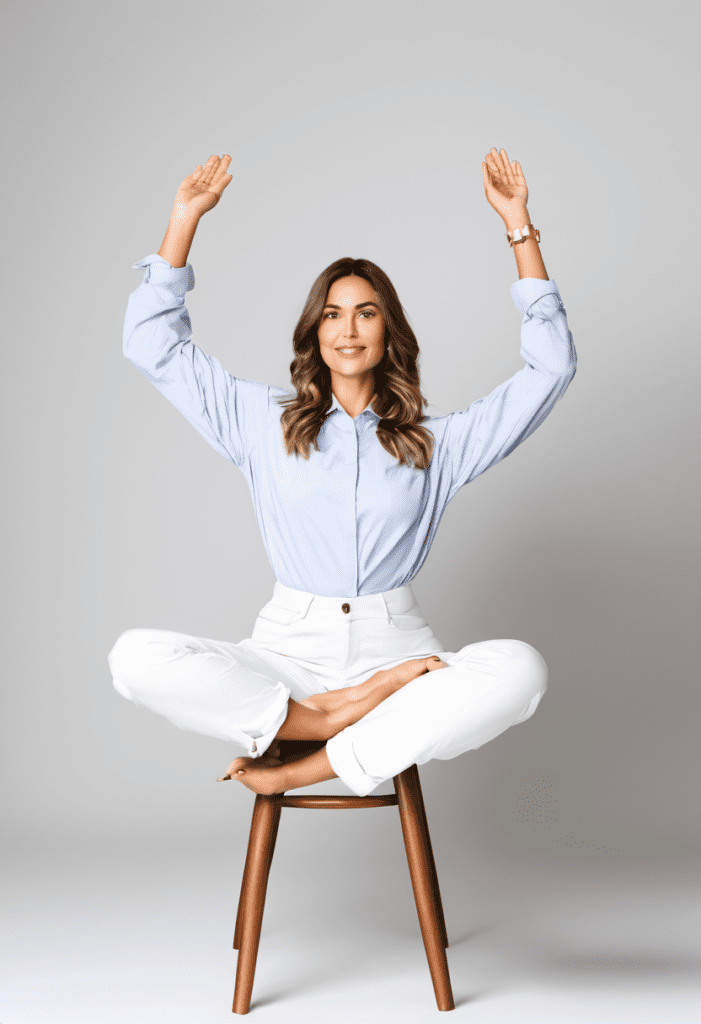

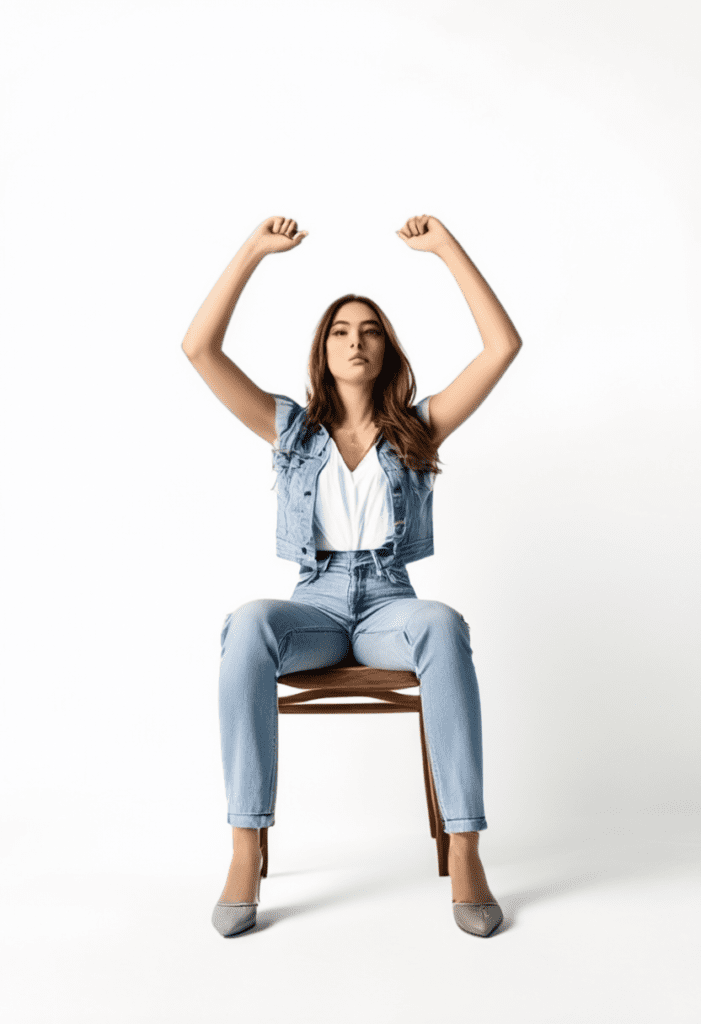

Photo of a woman sitting on a chair with both hands above her head, white background

Negative prompt:

disfigured, deformed, ugly, detailed face

Below are the images from the SDXL model.

Below are the images from the Stable Cascade model.

And finally, the Stable Diffusion 3 model.

All three models do pretty well with this human pose. Perhaps it is not challenging enough? Here are some observations.

- It takes less cherry-picking for SD3 to get good images. (It better be because I am paying for each image generation through the API!)

- The images from SD3 are strangely uniform – All in an all-black outfit. Perhaps the model is a bit overcooked? I’m sure a fine-tuned model can fix that.

- To SD3’s credit, its images

Maybe this pose is not challenging enough? Let’s try another one:

Photo of a boy looking up and raise one arm, stand on one foot, white background

SDXL couldn’t generate that exact pose we asked for, but its half correct.

Stable Cascade is more correct. There is a higher chance that the kid is raising one hand.

Stable Diffusion 3 gets the hand part correct most of the time, although it is still not standing on one foot.

Conclusion: In many cases, the accuracy of human poses of Stable Diffusion 3 is similar to SDXL and Cascade. For challenging poses, Stable Diffusion 3 has an edge over the other two.

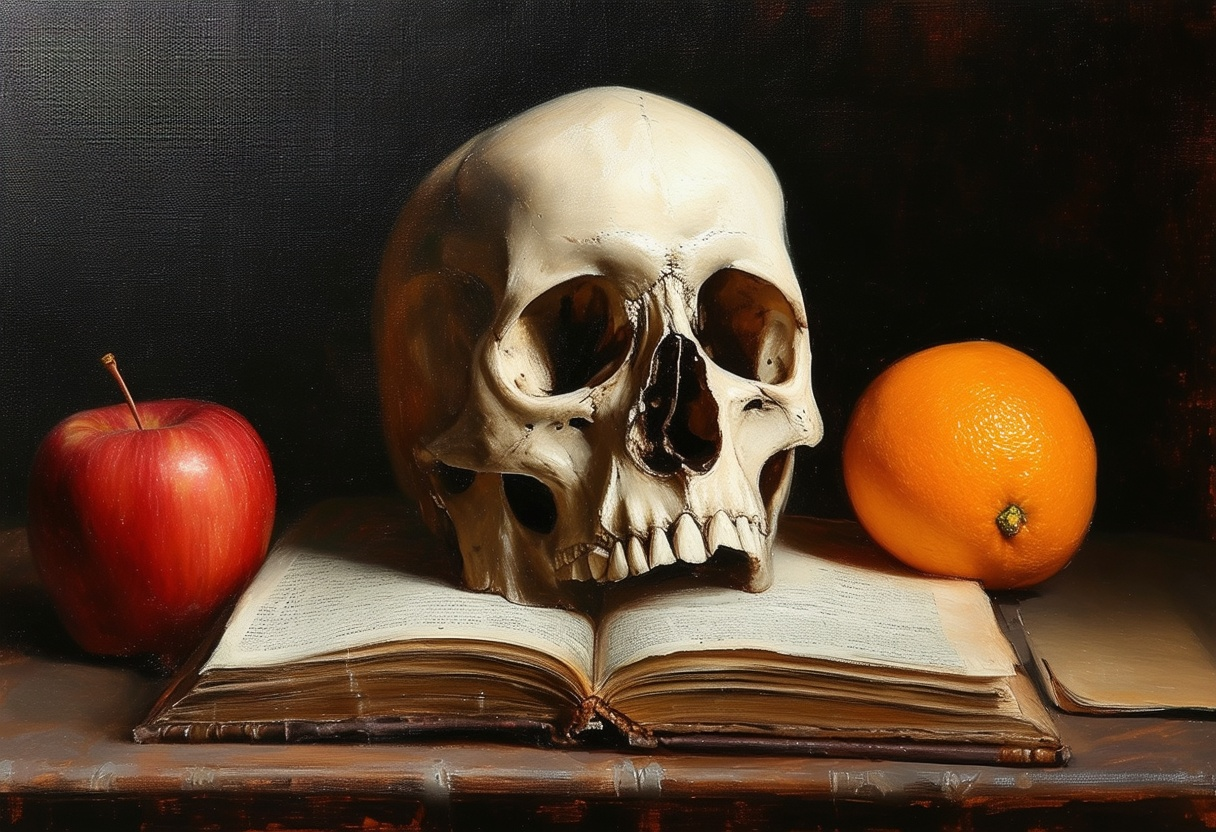

Object composition

The next test of objection composition: How well does the model follow the object placement.

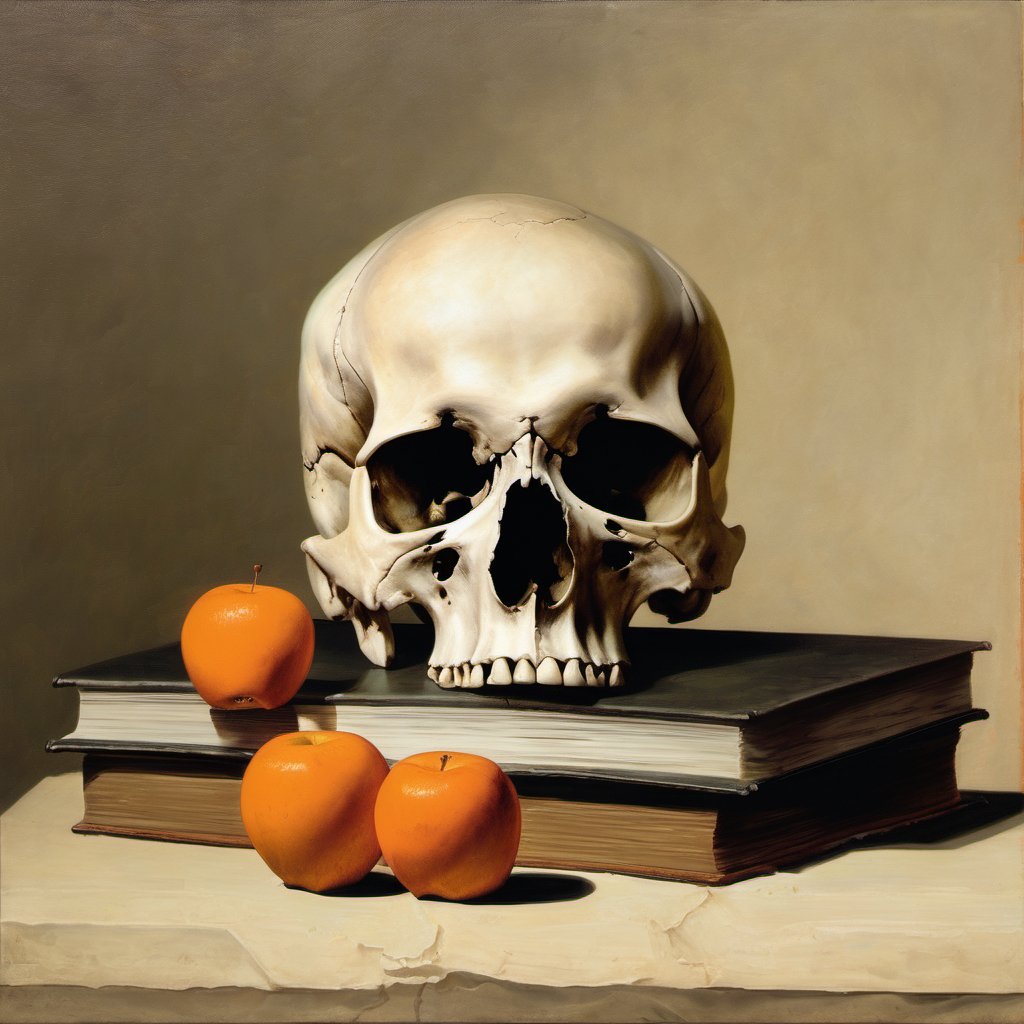

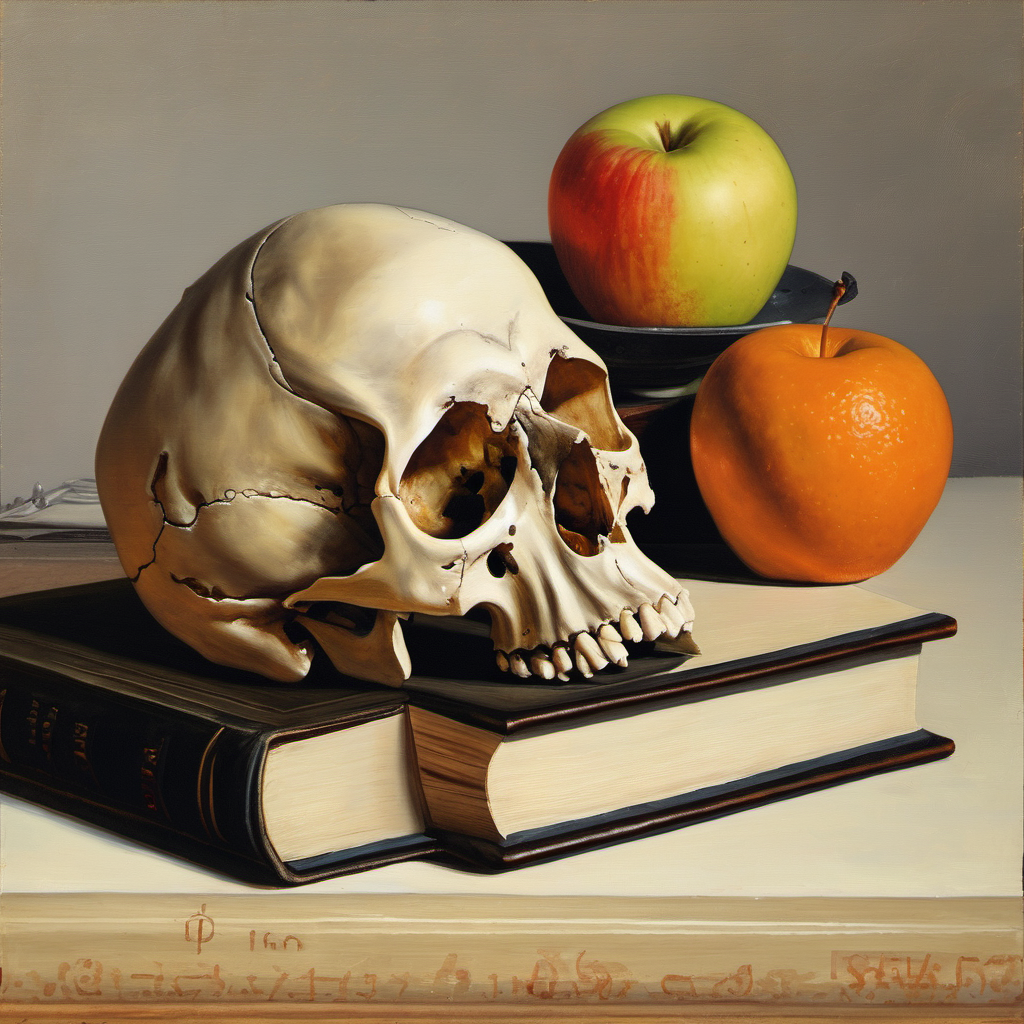

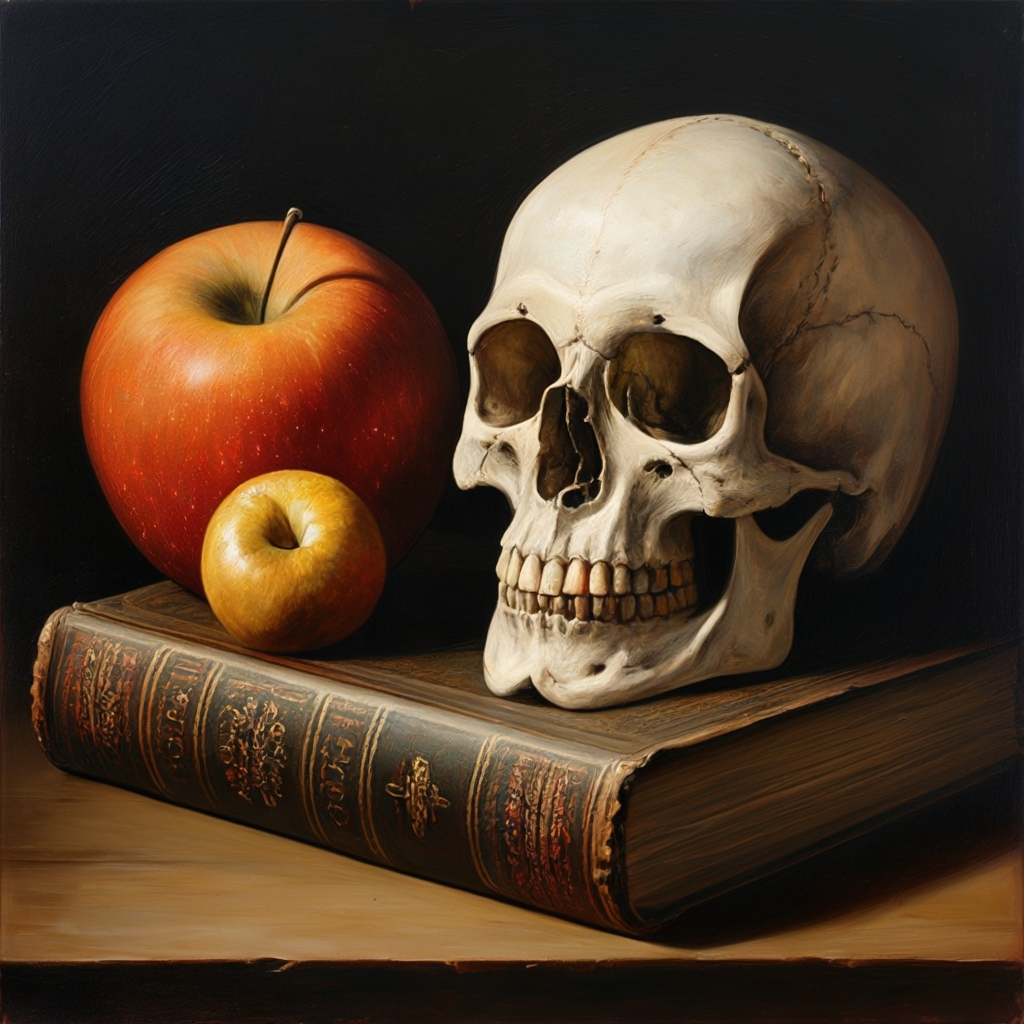

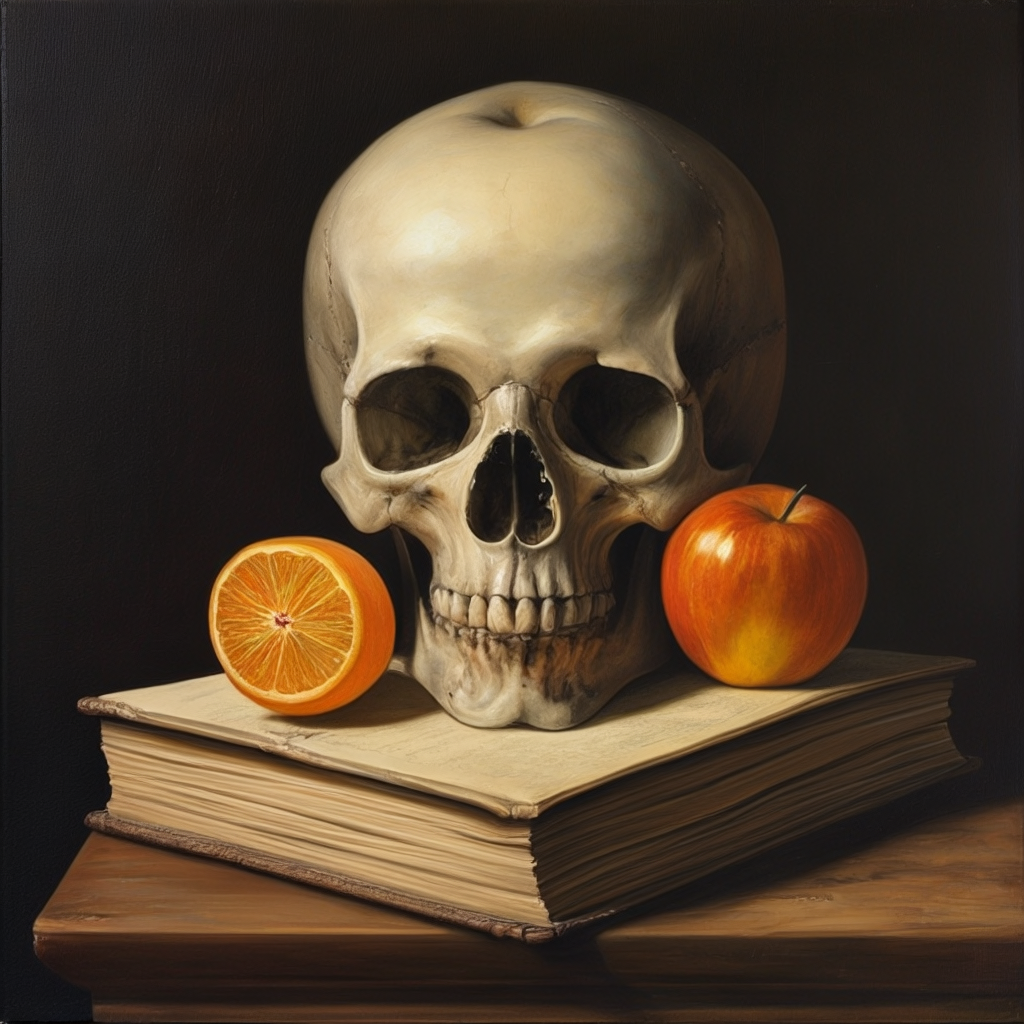

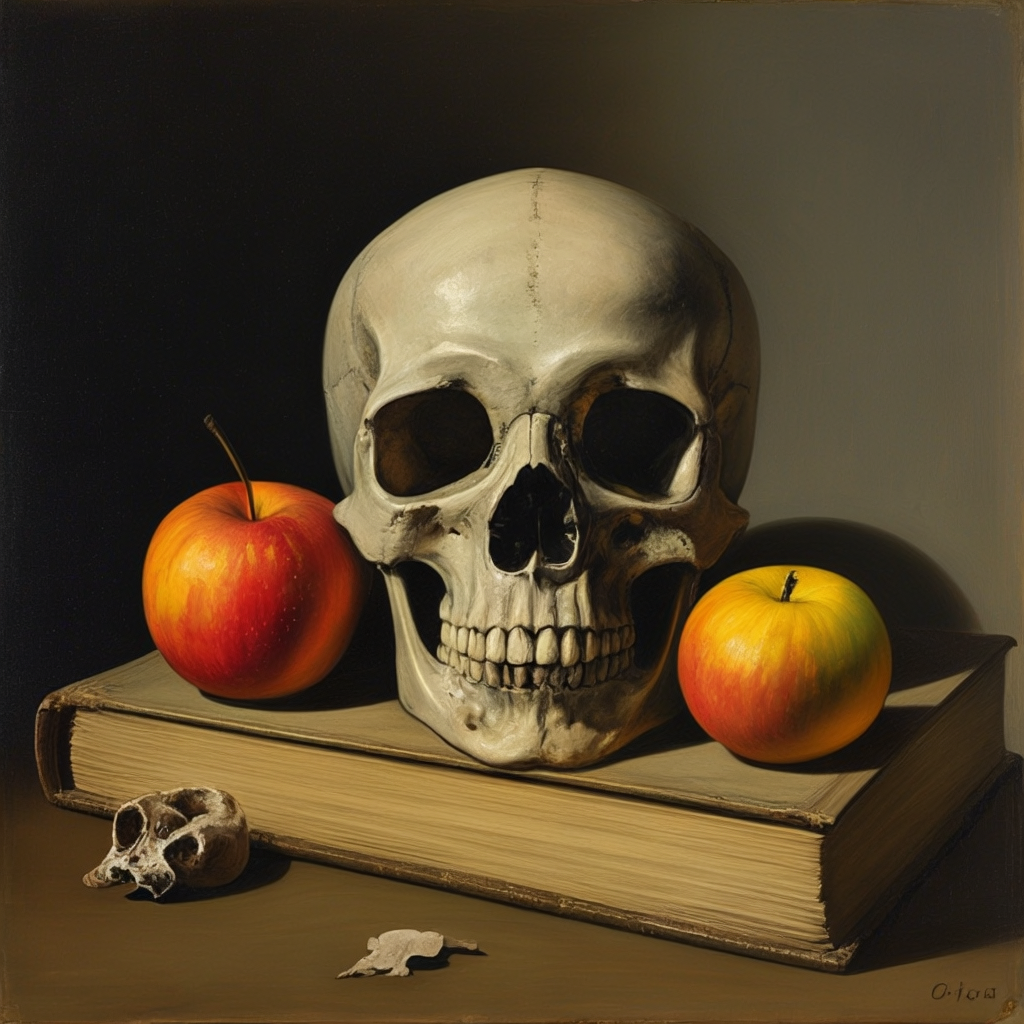

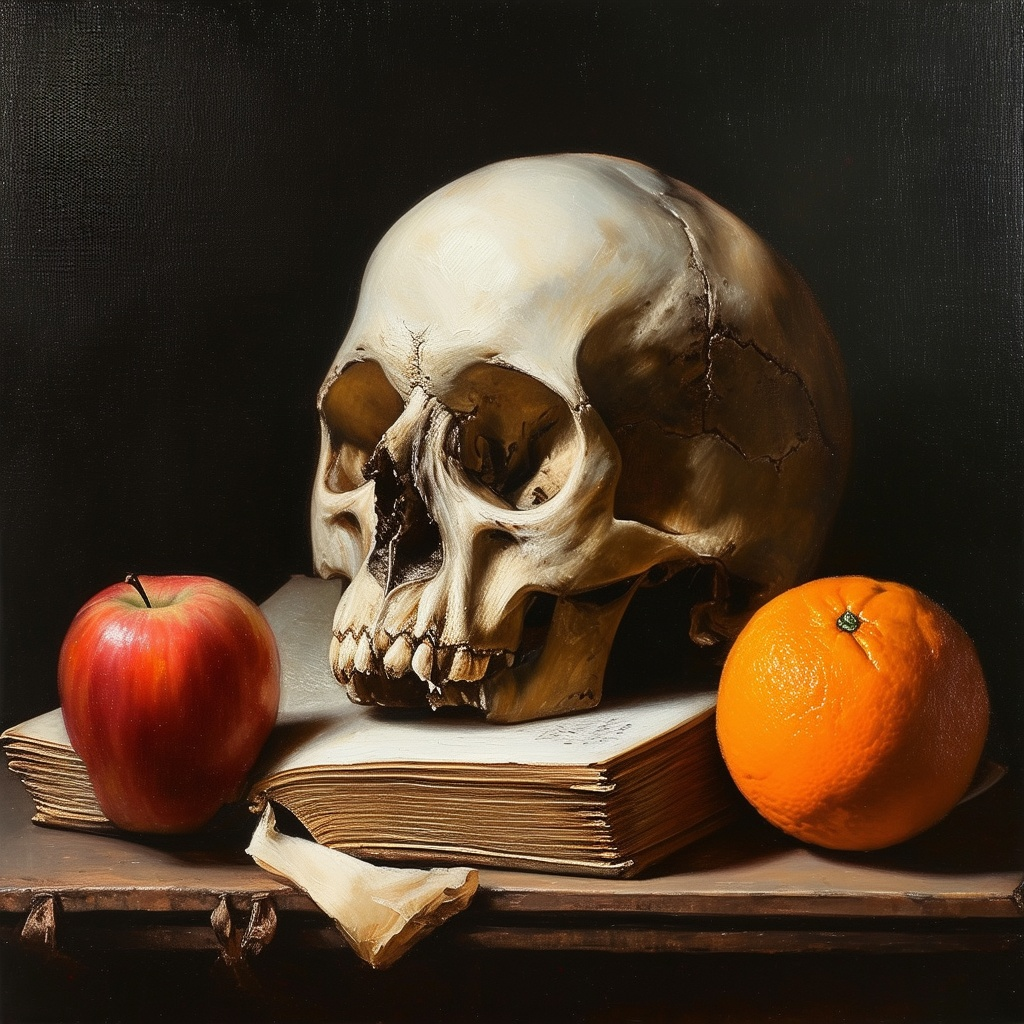

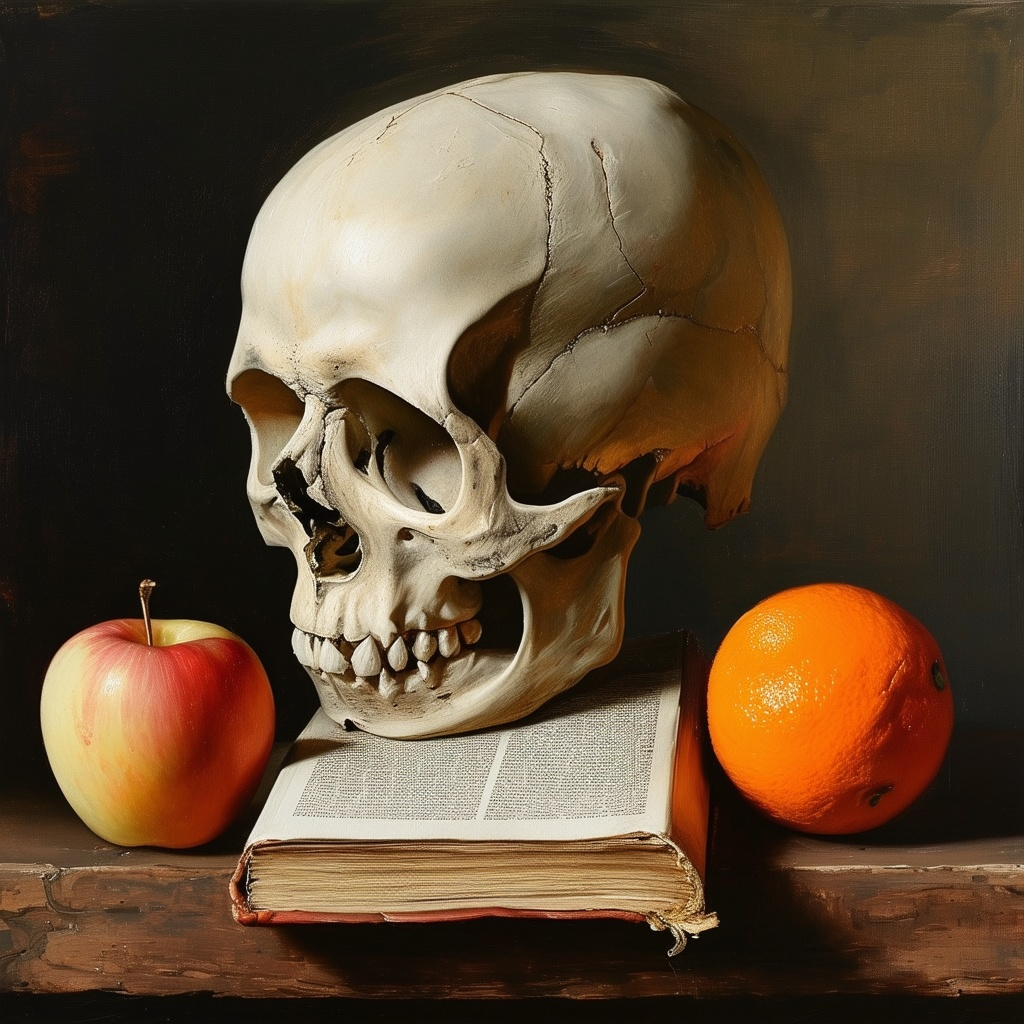

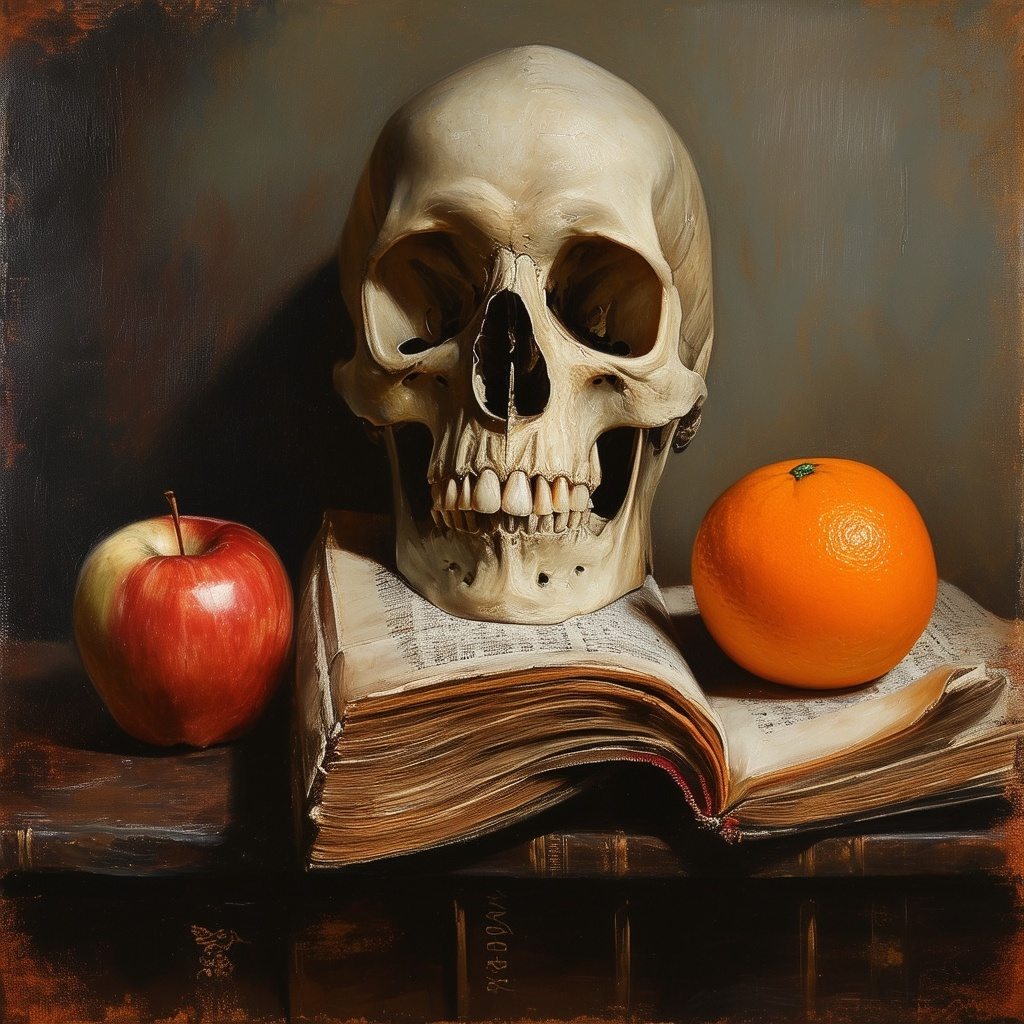

Prompt:

Still life painting of a skull above a book, with an orange on the right and an apple on the left

SD3 is the clear winner in this test. The composition is correct in every image I generated.

SDXL: (None is correct)

Stable Cascade: (1 out of 3 is correct)

Stable Diffusion 3: (All are correct)

This is an expected improvement as newer models like DALLE3 have used highly accurate captions in training to significantly improve prompt-following. From SD3’s user testing, the prompt-following of SD3 is on par with DALLE3.

How can you take advantage of it? In addition to just using it, you can generate a template image to be used with ControlNet with SD 1.5 and SDXL models.

Hands

Here’s the prompt I used:

photo of open palms, detailed fingers, city street

I don’t see any clear improvement in generating hands. I hope to see a focused effort from Stability to add high-quality photos of hands to the training data.

SDXL:

Stable Cascade:

SD3:

Faces

Generating faces is perhaps the most popular application of AI image generators. Let’s test with the following prompt.

photo of a 20 year old korean k-pop star, beautiful woman, detailed face, eyes, lips, nose, hair, realistic skin tone

I added keywords “3D” and “cartoon” to the negative prompt to enhance the realistic style:

disfigured, deformed, ugly, 3d, cartoon

SDXL:

Stable Cascade:

SD3:

All models performed pretty well with no serious defects. They have different default styles and exposure levels. You should be able to customize it with more specific prompt and negative prompt.

Styles

AI models can generate a lot more styles than I can test. I will pick a few prompts from the SDXL style reference.

Expressionist

Prompt

expressionist woman. raw, emotional, dynamic, distortion for emotional effect, vibrant, use of unusual colors, detailed

Negative prompt

anime, photorealistic, 35mm film, deformed, glitch, low contrast, noisy

SD3 appropriately uses bold colors for this prompt. I would rate its generation more faithful to the prompt.

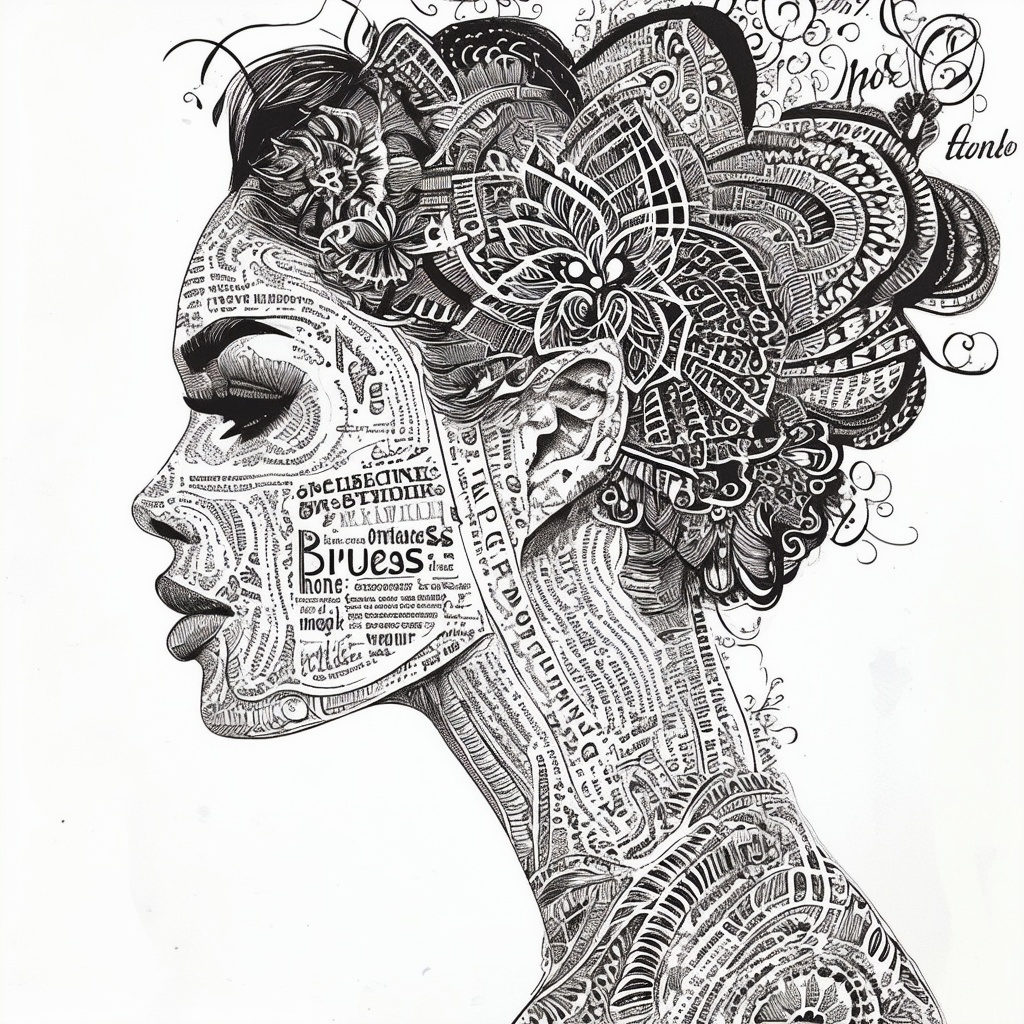

Typography art

Prompt:

typographic art woman . stylized, intricate, detailed, artistic, text-based

Negative prompt:

ugly, deformed, noisy, blurry, low contrast, realism, photorealistic

I’m pleasantly surprised by how well SD3 blends the text and image with pleasing details.

RPG Game

Prompt:

role-playing game (RPG) style fantasy city . detailed, vibrant, immersive, reminiscent of high fantasy RPG games

Negative prompt:

sci-fi, modern, urban, futuristic, low detailed

All models generate reasonable video game images. Stable Cascade’s default style is a newer 3D game style, while SDXL and SD3 create a style of older video games.

Overall, I really like SD3’s ability to render styles accurately. There are real opportunities to mix and match different styles to generate something new!

Conclusions

Stable Diffusion 3 delivers noted improvements in rendering text and generating images that closely follow the prompt. While SD3 is still imperfect in directing human poses with prompts, it has improved over the previous most competent models.

Face generations are generally on par with previous models, which have already been excellent.

Unfortunately, SD3 still has problems in generating hands. It didn’t seem to have improved over the previous models. Hopefully, there will be some more focused effort in this area.

Generating new styles is an excellent opportunity for SD3. With its enhanced capability in text rendering and prompt-following, we can more accurately dial in the styles we want.

I’d be curious to see the comparison to the “medium” version which is able to be run on PCs, I believe the large model is what they have in their API and I’d expect performance on many tasks to be different because it’s a much bigger model in their API.

I’ve tried both Stable Cascade and SD3 on my computer and while they may follow prompts a little better in some ways, I don’t feel like they’re a good replacement yet for sdxl and 1.5 and have lots of weird peculiarities. Maybe that will change with time. I’d love control nets that work well and custom optimized models LoRAs and other stuff from users and those didn’t exist much yet. Maybe they will come.

It’s a shame there hasn’t been much community interest in Stable Cascade since the resources for training it are supposed to be significantly reduced, so it seems like a model where the community could make it lots better very quickly and release specialized models but it has been overshadowed by stable diffusion 3 and I think the license also scared some people off.

Maybe the weirdnesses are because of the things i typically prompt (not generally young, attractive women which is what I see most photos and prompts for on sites like civitai). It sort of feels like these models are great at rendering people who look like young YouTube makeup influencers with eye glitter, but less so for some other body and face types.

I find that Stable Cascade tries to make every photo I generate look like either a horror movie (very dimly lit with most everything in heavy shadow) or like a super contrasty portrait with heavy shadowing on one side of a face and in all eye sockets and light from one side. I can’t get lighting prompts to work well. It seems to avoid drawing eye details and makes lots of people with eyes closed or completely shadowed so you can’t see their irises. It is also much worse at creating facial detail for portraits of people from the waist up at 1024×1024 than sdxl variants. Their faces come out with globby details (it does ok if the portrait is a head shot and takes more of the frame). I think rendering at something like 1532×1024 instead, also helps. I like the compositions it makes more than many sdxl ones, but it also doesn’t vary them as much if you want some variety. Putting ”horror” into the negative prompts helps somewhat in getting photos that don’t look like they were taken from a Netflix horror movie in obnoxiously dark HDR with extreme shadowing on everything.

I’ve had a lot of problems with SD3 medium making lots of horribly deformed people, especially if you try to get these people to interact in any way (shaking hands, hug, even standing side by side). It tends to create Siamese twins, merge limbs, miss limbs and other things more than the sdxl models I use do only at 1024 pixels or so). I’ve also found it drawing body proportions in very off ways more than sdxl, too.

For any NSFW generation, the newer models also don’t seem like much of an option at all yet, if that matters to people who are thinking about using them.

While these models have potential, I’m not finding them as flexible or useful as sdxl models yet.

People haven’t invested in Cascade because Stability has announced SD3 and made it available through API. Cascade is a bit better than SDXL but nothing compared to SD3. Turned out that Stability didn’t release the SD3 model in API, but the far inferior SD3 medium model. Since then we are in an inpasse.

Getting a bit out of topic, what is your take about the SD family future? I’ve heard rumours that StabilityAI will cease and SD3 will be its last, and not so good as initially it was expected.

Stability has released strong AI models. I would be surprised if they just cease to exist. Stable Diffusion is the de facto foundation model for AI images. Someone would capture these values. Their tech is good but the business model is weak.

I think they will either get bought up by another company, or get new financing with a more believable business plan. Either way, I don’t think they can afford to release all models to public like what they did.

There are so much they can do to capitalize the models and brand recognition they already have.E.g. Build out a more respectable image generation service, B2B API etc. That brings in recurring revenue and provide faster feedback to the models they are training.

SD3 has a visual acuity that other renderers lack. Yet often when I run Pixart-alpha against SD3 there is very little difference. SD3 has the advantage of legible text (at last); but is still very much a WIP. Which to say, when MidJourney solves the text problem, there will be much clear light between SD3 and MJ. Hands/fingers/limbs/faces – still needs a Harry Potter or an Indiana Jones kind of fix – no matter what generator is in use!!! SD3 promises to release the weights soon™ for desktop (“free”) use!

Will SD3 be available for download in the future for people to train in detail?

That was the promise.

Is SD3 subscriber based?

It’s pay as you use

Will SD3 be available for PC again or only via API?

I would like to have my data only on my computer and not on some other server.

It’s fascinating what SD3 can do now and many thanks for your review Andrew

Have a nice time

Chris

They said they will release it. Probably with an non-commercial license. But who knows after their CEO left.

Thanks for this! Wonderful observation and really helpful to understand what the model can be used for. I have already been using it and I am amazed.