Cognvideo is a state-of-the-art AI video generator similar to Kling, except you can generate the video locally on your PC. In this article, you will learn how to use Cogvideo in ComfyUI.

Table of Contents

Software

We will use ComfyUI, a free AI image and video generator. You can use it on Windows, Mac, or Google Colab.

Think Diffusion provides an online ComfyUI service. They offer an extra 20% credit to our readers.

Read the ComfyUI beginner’s guide if you are new to ComfyUI. See the Quick Start Guide if you are new to AI images and videos.

Take the ComfyUI course to learn how to use ComfyUI step by step.

What is CogvideoX?

CogVideoX is a significant advancement in text-to-video generation. Building upon the success of text-to-image models like Stable Diffusion, CogVideo is specifically designed to generate coherent and high-quality videos from text prompts.

Model architecture

Here are some notable model design features.

- CogVideo uses the large T5 text encoder to convert the text prompt into embeddings, similar to Stable Diffusion 3 and Flux AI.

- In Stable Diffusion, an VAE compresses an image to and from the latent space. CogVideo generalizes this idea and uses a 3D casual VAE to compress a video into the latent space.

Models available

CogVideo models with 2B and 5B parameters are available. For higher-quality videos, we will use the 5B version in this tutorial.

How to use CogVideo in ComfyUI

This workflow is tested with an RTX4090 GPU card. It takes about 15 minutes to generate a video with a maximum VRAM usage of 16GB.

Step 1: Load the CogVideo workflow

Download the workflow JSON file below. Drop it to ComfyUI.

Step 2: Install missing nodes

You will need the ComfyUI Manager for this step. Follow the link for instructions to install ComfyUI Manager.

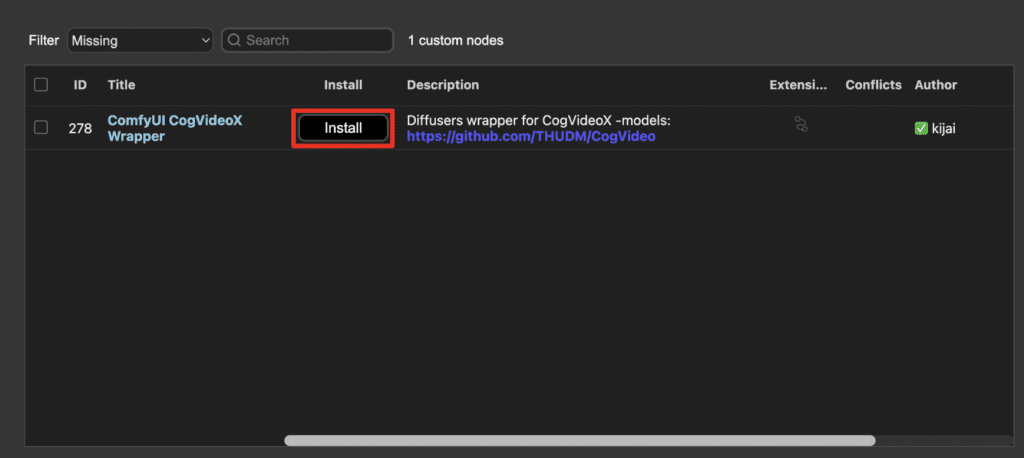

Click Manager on the sidebar. Click Install missing custom nodes.

Install the ComfyUI CogVideoX Wrapper.

Restart ComfyUI.

Refresh the ComfyUI page.

Step 3: Download the T5 text encoder

Download the T5 text encoder using the link below. Put it in ComfyUI > models > clip.

Step 4: Generate a video.

Press Queue Prompt to generate a video.

It will automatically download the 5B CogVideo model the first time you run it. It will take a while as if nothing is happening. But you can tell by the size of the folder models > CogVideos getting larger.

After the download is complete, it will start the video generation.

Hi Andrew,

Thanks for this tutorial. Can you use CognVideo to generate a video longer than 5 seconds. Thanks.

Zaffer

(member)

Yes technically but it is outside of what this model is trained to do.

Thanks for getting back to me Andrew. Is there a model that is free and will run locally and generate longer videos?

does this work offline,?

yes

Does it work in CPU with 16GB RAM?

No.

Any idea what this error means?

Failed to validate prompt for output 33:

* CogVideoDecode 11:

– Exception when validating inner node: tuple index out of range

Output will be ignored

invalid prompt: {‘type’: ‘prompt_outputs_failed_validation’, ‘message’: ‘Prompt outputs failed validation’, ‘details’: ”, ‘extra_info’: {}}

Same error for me, looking for a fix.

same, please help

Fixed.

same, please help

It should be working. make sure the nodes and comfyui are all up-to-date. The json file was updated a few days again. version v3.

Hi there, got the following error, any idea please? Thank you!!!

Prompt outputs failed validation

DownloadAndLoadCogVideoModel:

– Value not in list: fp8_transformer: ‘False’ not in [‘disabled’, ‘enabled’, ‘fastmode’]

CogVideoSampler:

– Value 49.0 bigger than max of 1.0: denoise_strength

– Value not in list: scheduler: ‘DPM’ not in [‘DPM++’, ‘Euler’, ‘Euler A’, ‘PNDM’, ‘DDIM’, ‘CogVideoXDDIM’, ‘CogVideoXDPMScheduler’, ‘SASolverScheduler’, ‘UniPCMultistepScheduler’, ‘HeunDiscreteScheduler’, ‘DEISMultistepScheduler’, ‘LCMScheduler’]

Fixed.

I have tried downloading CogVideo in ComfyUI but I’m unable to complete it because of the following error:

Prompt outputs failed validation

CheckpointLoaderSimple:

– Value not in list: ckpt_name: ‘v1-5-pruned-emaonly.ckpt’ not in []

Any suggestion on how to fix this problem?

Download here: https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5/blob/main/v1-5-pruned-emaonly.safetensors

Put it in comfyui > models > checkpoints.

Thank you for the input, Andrew. Now I’m getting a new error message. Here it is:

CogVideoSampler

Expected query, key, and value to have the same dtype, but got query.dtype: float key.dtype: float and value.dtype: c10::Half instead.

Any idea on how to fix this problem?

Thanks again.

Not quite sure for this one. You can try deleting the cogvideo5B folder in models>cogvideo and rerun.

Andrew, you can try to use DualClipLoader(Flux) node instead of ClipLoader node(SD3).

Thanks!

Highly dependant on prompt and skill – and *every* video generator out there is a hit or miss as far as what works.

I’ve used every text-to-vid, img-to-vid and vid-to-vid tool out there that I can find on Github and otherwise and I get mostly crap from all of them, with a couple good ones here and there once you stumble on something that works well.

CogVideoX is actually pretty phenomenal, even when compared to the more well-known of the bunch like Kling and Runway.

Not saying it’s better, it isn’t – but is capable of giving you stuff that is nearly on-par – and given the fact we have other AI tools to clean things up, it’s pretty amazing really.

And don’t forget – this is the worst it’s going to get.

Yeah I think it is pretty good for a *local* generator.

Hi Andrew,

“max of 16GB VRAM” or it’s requirement as 16Mb and above?

It means the usage is below 16GB VRAM. So a 16GB card would work.

“””””””””””high quality””””””””””” videos. Right…