VAE is a partial update to Stable Diffusion 1.4 or 1.5 models that will make rendering eyes better. I will explain what VAE is, what you can expect, where you can get it, and how to install and use it.

Table of Contents

What is VAE?

VAE stands for variational autoencoder. It is part of the neural network model that encodes and decodes the images to and from the smaller latent space, so that computation can be faster.

Do I need a VAE?

You don’t need to install a VAE file to run Stable Diffusion—any models you use, whether v1, v2 or custom, already have a default VAE.

When people say downloading and using a VAE, they refer to using an improved version of it. This happens when the model trainer further fine-tunes the VAE part of the model with additional data. Instead of releasing a whole new model, which is a big file, they release only the tiny part that has been updated.

What is the effect of using VAE?

Usually, it’s pretty tiny. An improved VAE decodes the image better from the latent space. Fine details are better recovered. It helps render eyes and text where all fine details matter.

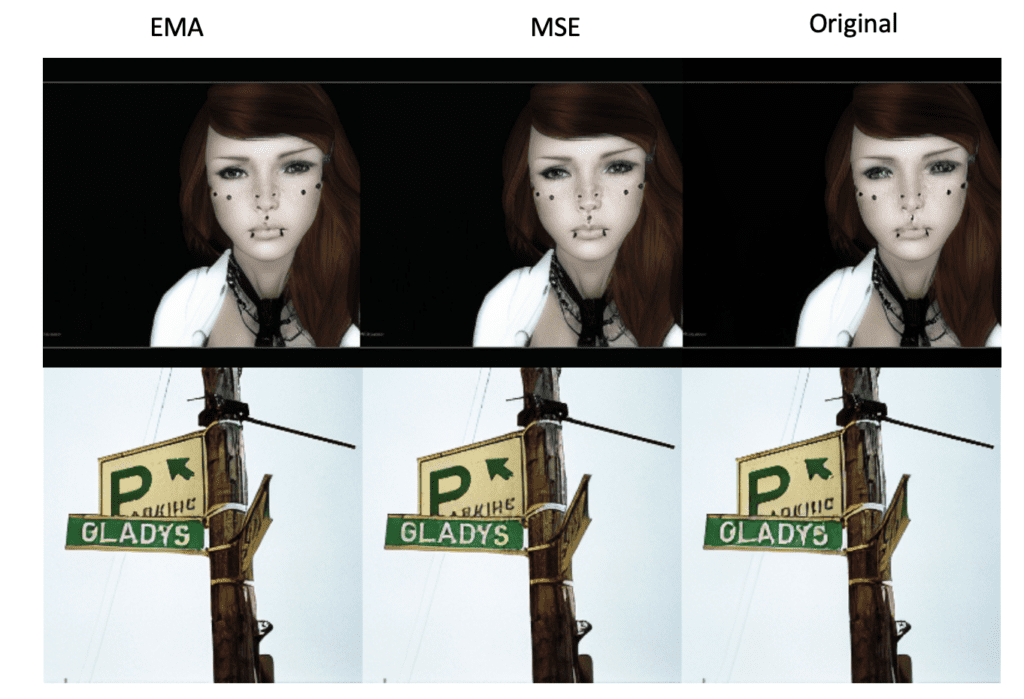

Stability AI released two variants of fine-tuned VAE decoders, EMA and MSE. (Exponential Moving Average and Mean Square Error are metrics for measuring how good the autoencoders are.)

See their comparison reproduced below.

Which one should you use? Stability’s assessment with 256×256 images is that EMA produces sharper images while MSE’s images are smoother. (That matches my own testing.)

In my own testing of Stable Diffusion v1.4 and v1.5 with 512×512 images, I see good improvements in rendering eyes in some images, especially when the faces are small. I didn’t see any improvements to rendering text, but I don’t think many people are using Stable Diffusion for this reason, anyway.

In no case, the new VAE performs worse. Either doing better or nothing.

Below is a comparison between the original, EMA, and MSE using Stable Diffusion v1.5 model. (prompt can be found here.) Enlarge and compare the difference.

Improvements to text generation are not as clear (Added “holding a sign said Stable Diffusion” to the prompt):

You can also use these VAEs with a custom model. I tested with some anime models but didn’t see any improvements. I encourage you to do your own test.

As a final note, EMA and MSE are compatible with Stable Diffusion v2.0. You can use them but the effect is minimal. 2.0 is already very good at rendering eyes. Perhaps they have already incorporated the improvement to the model.

Should I use a VAE?

You don’t need to use a VAE if you are happy with the result you are getting. E.g., you are already using face restoration like CodeFormer to fix eyes.

You should use a VAE if you are in the camp of taking all the little improvements you can get. You only need to go through the trouble of setting it up once. After that, the art creation workflow stays the same.

How to use VAE?

VAEs are ready to use in the Colab Notebook included in the Quick Start Guide.

Download

Currently, there are two improved versions of VAE released by Stability. Below are direct download links.

Installation

This install instruction applies to AUTOMATIC1111 GUI. Place the downloaded VAE files in the directory.

stable-diffusion-webui/models/VAEFor Linux and Mac OS

For your convenience, run the commands below in Linux or Mac OS under stable-diffusion-webui’s directory, and download and install the VAE files.

wget https://huggingface.co/stabilityai/sd-vae-ft-ema-original/resolve/main/vae-ft-ema-560000-ema-pruned.ckpt -O models/VAE/vae-ft-ema-560000-ema-pruned.ckpt

wget https://huggingface.co/stabilityai/sd-vae-ft-mse-original/resolve/main/vae-ft-mse-840000-ema-pruned.ckpt -O models/VAE/vae-ft-mse-840000-ema-pruned.ckptUse

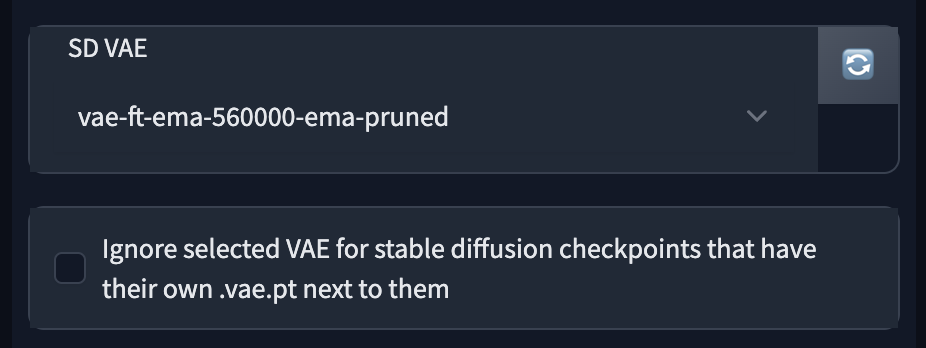

To use a VAE in AUTOMATIC1111 GUI, click the Settings tab on the left and click the VAE section.

In the SD VAE dropdown menu, select the VAE file you want to use.

Press the big red Apply Settings button on top. You should see the message

Settings: sd_vae applied

in the Setting tab when the loading is successful.

Other options in the dropdown menu are:

- None: Use the original VAE that comes with the model.

- Auto: see this post for behavior. I don’t recommend beginners use Auto since it is easy to confuse which VAE is used.

Pro tip: If you cannot find a setting, click Show All Pages on the left. All settings will be shown on a single page. Use Ctrl-F to find the setting.

Summary

We have gone through how to use the two improved VAE decoders released by Stability AI. They provide small but noticeable improvements to rendering eyes. You can decide whether you want to use it.

I am using it because I don’t see any cases that harm my images. I hope this article helps!

It appears the setting went into it’s own category “VAE” in the settings. Below “Stable Diffusion” and “Stable Diffusion XL”. At least that’s where I found my EMA checkpoint in the drop-down menu (in the “Stable Diffusion” the drop-down menu is “SD Unet” and only offers “Automatic” and “none”)

Thanks! corrected.

Hello, just curious question – is there way to train (or fine-tune) my own VAE?

Not wanting to achieve something, i just want to play with and see results.

You can download a Model that has already a VAE.

How do I know that model has included VAE

Unfortunately it is not for me. I have no VAE drop down box.

Here are the settings I have under Settings > Stable Diffusion:

(scroll bar) Checkpoints to cache in RAM

(scroll bar) VAE Checkpoints to cache in RAM

(checkbox) Ignore selected VAE for stable diffusion checkpoints that have their own .vae.pt next to them

(scroll bar) Inpainting conditioning mask strength

(scroll bar) Noise multiplier for img2img

(checkbox) Apply color correction to img2img results to match original colors.

(checkbox) With img2img, do exactly the amount of steps the slider specifies (normally you’d do less with less denoising).

(color choice) With img2img, fill image’s transparent parts with this color.

(checkbox) Enable quantization in K samplers for sharper and cleaner results. This may change existing seeds. Requires restart to apply.

(checkbox) Emphasis: use (text) to make model pay more attention to text and [text] to make it pay less attention

(checkbox) Make K-diffusion samplers produce same images in a batch as when making a single image

(scroll bar) Increase coherency by padding from the last comma within n tokens when using more than 75 tokens

(scroll bar) Clip skip

(checkbox) Upcast cross attention layer to float32

It is supposed to be the 3rd item. I suggest re-cloning the webui and starting without copying your config files.

I do not see the SD VAE drop down in the settings. I have also searched in the All Pages settings and have not found that drop down. Have they removed it? Or done something else with it?

Hi, it’s still there in settings -> Stable Diffusion

HI, was wondering if VAE also works with InvokeAi? I’ve notice in the model manger, in the details for each model there is a VAE box where you can drop I’m guessing the VAE files from Stability? At the moment the box is empty.

Thanks

Sorry I haven’t used InvokeAI.

I misread. I take back what I sent.

It is now just titled “Stable Diffusion” in the settings.

I feel that a person can click around but keep in mind that they may feel distressed when they don’t see exactly what was typed in the instructions.

Not sure if the UI changed but I had a hard time finding SD VAE in the settings tab. As luck would have it, I started clicking on the items in the left menu and when I clicked on “Stable Diffusion” I happened to find the needle in the haystack. You might want to update this blog.

Thanks for pointing it out. Post updated.