ControlNet is an indispensable tool for controlling the precise composition of AI images. You can use ControlNet to specify human poses and compositions in Stable Diffusion. You can now use ControlNet with the Flux.1 dev model.

In this article, I will guide you through setting up ControlNet for Flux AI models locally in ComfyUI.

Table of Contents

Software

We will use ComfyUI, a free AI image and video generator. You can use it on Windows, Mac, or Google Colab.

Think Diffusion provides an online ComfyUI service. They offer an extra 20% credit to our readers.

Read the ComfyUI beginner’s guide if you are new to ComfyUI. See the Quick Start Guide if you are new to AI images and videos.

Take the ComfyUI course to learn how to use ComfyUI step by step.

Follow the Flux1.dev model installation guide if you have not installed the Flux model on ComfyUI.

Install ControlNet for Flux.1 dev model

Xlabs AI has developed custom nodes and ControlNet models for Flux on ComfyUI. You will need the x-flux-comfyui custom nodes and the corresponding ControlNet models to run ControlNet.

Step 1: Update ComfyUI

Upload ComfyUI to the latest version. This can be done using the ComfyUI Manager.

In ComfyUI, click Manager > Update ComfyUI.

Step 2: Load ControlNet workflow

Download the workflow JSON file below.

Drop it in ComfyUI.

Step 3: Install missing nodes

You should see some red nodes if you need to install additional nodes.

Click Manager > Install missing custom nodes.

Install the missing nodes and restart ComfyUI.

Refresh the ComfyUI page.

Step 4: Download the Flux.1 dev model

If you have used Flux on ComfyUI, you may have these files already.

Download the flux1-dev-fp8.safetensors model and put it in ComfyUI > models > unet.

Download the following two CLIP models and put them in ComfyUI > models > clip.

Download the Flux VAE model file and put it in ComfyUI > models > vae.

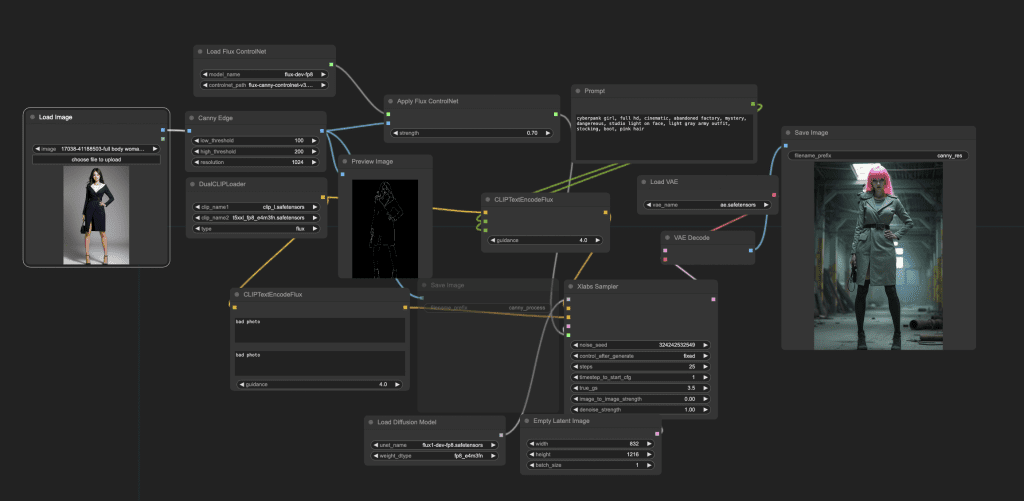

Step 5: Download the Canny ControlNet model

Download the Canny ControlNet model flux-canny-controlnet-v3.safetensors. Put it in ComfyUI > models > xlabs > controlnets.

Step 6: Run the workflow

Your workflow should be ready to run.

Upload a reference image to the Load Image node. You can also use the image below for testing.

Click Queue Prompt to generate an image.

Tips for using ControlNet for Flux

The strength value in the Apply Flux ControlNet cannot be too high. If you see artifacts on the generated image, you can lower its value.

Adjust the low_threshold and high_threshold of the Canny Edge node to control how much detail to copy from the reference image.

Change the image size in the Empty Latent Image node. Use a reference image with the same aspect ratio for the best results.

Additional ControlNets for Flux

If you have followed the installation steps above, you can easily add additional ControlNets to ComfyUI.

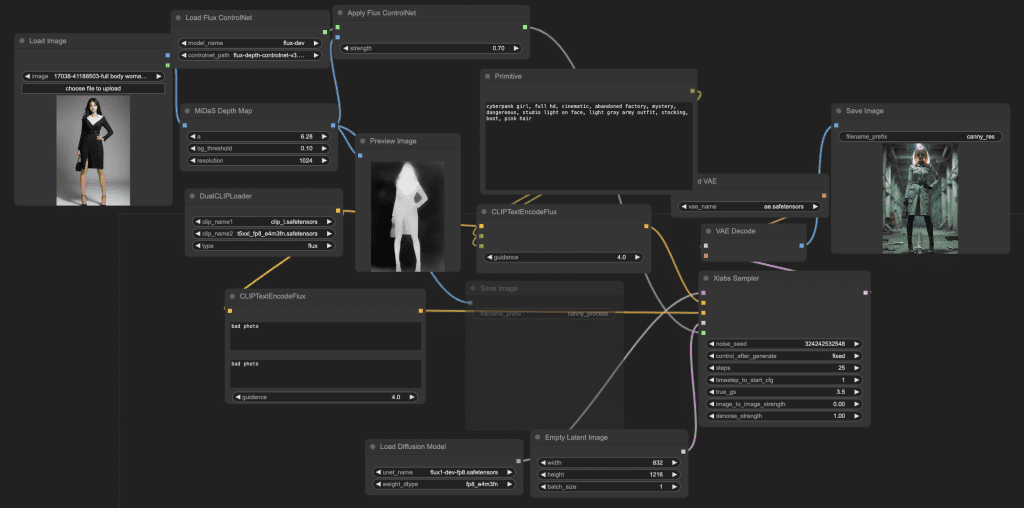

Depth ControlNet for Flux

The depth ControlNet copies the depth information from a reference image. It is useful for copying the perceived 3D composition from the reference image.

Wire the ComfyUI workflow like below.

If you are a member of the site, you can download the JSON workflow here or below.

Drop it in ComfyUI.

Download the Depth ControlNet model flux-depth-controlnet-v3.safetensors. Put it in ComfyUI > models > xlabs > controlnets.

Upload a reference image to the Load Image node.

Click Queue Prompt to run.

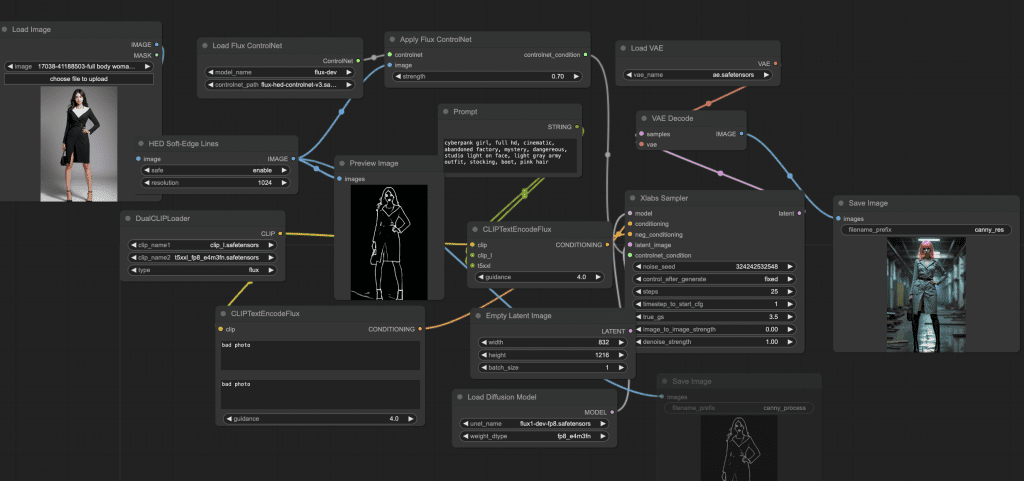

HED ControlNet for Flux

The HED ControlNet copies the rough outline from a reference image. It is useful for copying the general shape but not fine details from the reference image.

Follow the steps below to install the HED ControlNet for Flux.

Wire the ComfyUI workflow like below.

If you are a member of the site, you can download the JSON workflow here or below.

Drop it in ComfyUI.

Download the HED ControlNet model .flux-hed-controlnet-v3.safetensors. Put it in ComfyUI > models > xlabs > controlnets.

Upload a reference image to the Load Image node.

Click Queue Prompt to run.

Hi Andrew, this doesn’t seem to work on Colab as some of the models aren’t in the right place: Flux1-dev-fp8 is in the AI_PICS/models/Stable-diffusion folder rather than models/unet (I didn’t think this checkpoint model was a unet?) and the node doesnt find the controlnet models I installed in models/ControlNet.

Can I do anything about this and/or can you fix it?

Thanks

The xlab controlnet model should be in models > xlabs > controlnets, not the models > contorlnets.

This workflow loads the unet and clip separately, so it will need the flux1-dev-fp8 model to be in unet. You can copy it or use symlink to conserve space.

Thanks, Andrew. Please could you explain how I would use symlink.

It’s a command line thing and is out of scope of what I can teach.

No problem, I think I’ve worked it out

At first I thought this didn’t really work. But it is just VERY dependent on the aspect ratio. If it’s not the same as the source image it just does whatever it likes 😉

Therefore I added a “Get Image Size” node and connected it to the “Empty latent Image” node.

Now if only I knew how to use a LORA so the output can be a consistent person… Still so much to learn 😉

That makes sense. In A1111, the controlnet extension takes care of the resizing the control image. Perhaps these nodes are a bit primitive.

Using the depth controlnet, I found that I could successfully change the aspect ratio, within limits, if I resized the depth image. I did that by putting an Enhance And Resize Hint Images node in between the MiDaS Depth Map and Apply Flux ControlNet nodes. That method worked better than resizing the reference image itself as with that approach, it wasn’t easy to control the cropping and filling to get something that was then useful for MiDaS

Hi Andrew, Canny worked fine but with Depth and HED I’m getting those messages (did all my check to make sure I missed anything):

HEDPreprocessor

[Errno 2] No such file or directory: ‘C:\\ComfyUI_windows_portable_nvidia_cu118_or_cpu\\ComfyUI_windows_portable\\ComfyUI\\custom_nodes\\comfyui_controlnet_aux\\ckpts\\lllyasviel\\Annotators\\.cache\\huggingface\\download\\ControlNetHED.pth.5ca93762ffd68a29fee1af9d495bf6aab80ae86f08905fb35472a083a4c7a8fa.incomplete’

MiDaS-DepthMapPreprocessor

[Errno 2] No such file or directory: ‘C:\\ComfyUI_windows_portable_nvidia_cu118_or_cpu\\ComfyUI_windows_portable\\ComfyUI\\custom_nodes\\comfyui_controlnet_aux\\ckpts\\lllyasviel\\Annotators\\.cache\\huggingface\\download\\dpt_hybrid-midas-501f0c75.pt.501f0c75b3bca7daec6b3682c5054c09b366765aef6fa3a09d03a5cb4b230853.incomplete’

It seems the download was incomplete. You can try removing those folders.

Hi Andrew, in general, how much GPU do we need to train the flux.1 with ControlNet?

i cant get mine to work on forge im getting error :/

do these work with dev model only or also schnell?

When I wrote this, Forge didn’t support controlnet. This is for comfyui.

To answer your question, this is using the dev model.

no problem and thanks. hope it gets supported soon

Got Error ..looks like I don’t have enough juice. This Flux thing seems to be useless. Does only text to Image and quality is not so much better that it should need this much hardware. Please explain why this should be used

==================================

a# ComfyUI Error Report

## Error Details

– **Node Type:** XlabsSampler

– **Exception Type:** torch.cuda.OutOfMemoryError

– **Exception Message:** Allocation on device 0 would exceed allowed memory. (out of memory)

Currently allocated : 11.15 GiB

Requested : 36.00 MiB

Device limit : 11.99 GiB

Free (according to CUDA): 0 bytes

PyTorch limit (set by user-supplied memory fraction)

: 17179869184.00 GiB

## Stack Trace

“`

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 323, in execute

output_data, output_ui, has_subgraph = get_output_data(obj, input_data_all, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 198, in get_output_data

return_values = _map_node_over_list(obj, input_data_all, obj.FUNCTION, allow_interrupt=True, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 169, in _map_node_over_list

process_inputs(input_dict, i)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 158, in process_inputs

results.append(getattr(obj, func)(**inputs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\x-flux-comfyui\nodes.py”, line 397, in sampling

inmodel.diffusion_model.to(device)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 1152, in to

return self._apply(convert)

^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 802, in _apply

module._apply(fn)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 802, in _apply

module._apply(fn)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 802, in _apply

module._apply(fn)

[Previous line repeated 1 more time]

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 825, in _apply

param_applied = fn(param)

^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 1150, in convert

return t.to(device, dtype if t.is_floating_point() or t.is_complex() else None, non_blocking)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

“`

## System Information

– **ComfyUI Version:** v0.2.2-37-gcf80d28

– **Arguments:** ComfyUI\main.py –windows-standalone-build

– **OS:** nt

– **Python Version:** 3.11.6 (tags/v3.11.6:8b6ee5b, Oct 2 2023, 14:57:12) [MSC v.1935 64 bit (AMD64)]

– **Embedded Python:** true

– **PyTorch Version:** 2.2.1+cu121

## Devices

– **Name:** cuda:0 NVIDIA GeForce RTX 4070 Ti : cudaMallocAsync

– **Type:** cuda

– **VRAM Total:** 12878086144

– **VRAM Free:** 8516115264

– **Torch VRAM Total:** 3053453312

– **Torch VRAM Free:** 35232576

## Logs

“`

2024-09-14 06:48:50,930 – root – INFO – Total VRAM 12282 MB, total RAM 65405 MB

2024-09-14 06:48:50,931 – root – INFO – pytorch version: 2.2.1+cu121

2024-09-14 06:48:50,931 – root – INFO – Set vram state to: NORMAL_VRAM

2024-09-14 06:48:50,931 – root – INFO – Device: cuda:0 NVIDIA GeForce RTX 4070 Ti : cudaMallocAsync

2024-09-14 06:48:52,124 – root – INFO – Using pytorch cross attention

2024-09-14 06:48:52,868 – root – INFO – [Prompt Server] web root: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\web

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path checkpoints C:\Users\satis\stable-diffusion-webui\models/Stable-diffusion

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path configs C:\Users\satis\stable-diffusion-webui\models/Stable-diffusion

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path vae C:\Users\satis\stable-diffusion-webui\models/VAE

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path loras C:\Users\satis\stable-diffusion-webui\models/Lora

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path loras C:\Users\satis\stable-diffusion-webui\models/LyCORIS

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path upscale_models C:\Users\satis\stable-diffusion-webui\models/ESRGAN

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path upscale_models C:\Users\satis\stable-diffusion-webui\models/RealESRGAN

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path upscale_models C:\Users\satis\stable-diffusion-webui\models/SwinIR

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path embeddings C:\Users\satis\stable-diffusion-webui\embeddings

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path hypernetworks C:\Users\satis\stable-diffusion-webui\models/hypernetworks

2024-09-14 06:48:52,868 – root – INFO – Adding extra search path controlnet C:\Users\satis\stable-diffusion-webui\models/ControlNet

2024-09-14 06:48:54,055 – root – WARNING – Traceback (most recent call last):

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\nodes.py”, line 1994, in load_custom_node

module_spec.loader.exec_module(module)

File “”, line 940, in exec_module

File “”, line 241, in _call_with_frames_removed

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-Inference-Core-Nodes\__init__.py”, line 1, in

from inference_core_nodes import NODE_CLASS_MAPPINGS, NODE_DISPLAY_NAME_MAPPINGS

ModuleNotFoundError: No module named ‘inference_core_nodes’

2024-09-14 06:48:54,071 – root – WARNING – Cannot import C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-Inference-Core-Nodes module for custom nodes: No module named ‘inference_core_nodes’

2024-09-14 06:48:55,836 – albumentations.check_version – INFO – A new version of Albumentations is available: 1.4.15 (you have 1.4.7). Upgrade using: pip install –upgrade albumentations

2024-09-14 06:48:56,607 – root – INFO –

Import times for custom nodes:

2024-09-14 06:48:56,607 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\websocket_image_save.py

2024-09-14 06:48:56,607 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\image-resize-comfyui

2024-09-14 06:48:56,607 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI_Nimbus-Pack

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds (IMPORT FAILED): C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-Inference-Core-Nodes

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-SAI_API

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI_IPAdapter_plus

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI_FizzNodes

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\comfyui-animatediff

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-Advanced-ControlNet

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\x-flux-comfyui

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI_essentials

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-AnimateDiff-Evolved

2024-09-14 06:48:56,608 – root – INFO – 0.0 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\comfyui_controlnet_aux

2024-09-14 06:48:56,608 – root – INFO – 0.1 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI_tinyterraNodes

2024-09-14 06:48:56,608 – root – INFO – 0.1 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-VideoHelperSuite

2024-09-14 06:48:56,608 – root – INFO – 0.2 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-Manager

2024-09-14 06:48:56,608 – root – INFO – 0.3 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-layerdiffuse

2024-09-14 06:48:56,608 – root – INFO – 0.3 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\rembg-comfyui-node

2024-09-14 06:48:56,609 – root – INFO – 0.3 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\comfy_PoP

2024-09-14 06:48:56,609 – root – INFO – 0.4 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-seam-carving

2024-09-14 06:48:56,609 – root – INFO – 0.7 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI_InstantID

2024-09-14 06:48:56,609 – root – INFO – 0.8 seconds: C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\ComfyUI-Impact-Pack

2024-09-14 06:48:56,609 – root – INFO –

2024-09-14 06:48:56,614 – root – INFO – Starting server

2024-09-14 06:48:56,614 – root – INFO – To see the GUI go to: http://127.0.0.1:8188

2024-09-14 06:53:48,104 – root – INFO – got prompt

2024-09-14 06:53:48,545 – root – INFO – Using pytorch attention in VAE

2024-09-14 06:53:48,547 – root – INFO – Using pytorch attention in VAE

2024-09-14 06:53:50,277 – root – WARNING – clip missing: [‘text_projection.weight’]

2024-09-14 06:53:51,510 – root – INFO – Requested to load FluxClipModel_

2024-09-14 06:53:51,510 – root – INFO – Loading 1 new model

2024-09-14 06:53:52,392 – root – INFO – loaded completely 0.0 4777.53759765625 True

2024-09-14 06:53:53,338 – root – INFO – model weight dtype torch.float8_e4m3fn, manual cast: torch.bfloat16

2024-09-14 06:53:53,338 – root – INFO – model_type FLUX

2024-09-14 06:53:56,497 – root – INFO – Requested to load Flux

2024-09-14 06:53:56,498 – root – INFO – Loading 1 new model

2024-09-14 06:54:00,640 – root – INFO – loaded partially 6702.400402832031 6701.871154785156 0

2024-09-14 06:54:01,373 – root – ERROR – !!! Exception during processing !!! Allocation on device 0 would exceed allowed memory. (out of memory)

Currently allocated : 11.15 GiB

Requested : 36.00 MiB

Device limit : 11.99 GiB

Free (according to CUDA): 0 bytes

PyTorch limit (set by user-supplied memory fraction)

: 17179869184.00 GiB

2024-09-14 06:54:01,375 – root – ERROR – Traceback (most recent call last):

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 323, in execute

output_data, output_ui, has_subgraph = get_output_data(obj, input_data_all, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 198, in get_output_data

return_values = _map_node_over_list(obj, input_data_all, obj.FUNCTION, allow_interrupt=True, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 169, in _map_node_over_list

process_inputs(input_dict, i)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\execution.py”, line 158, in process_inputs

results.append(getattr(obj, func)(**inputs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\ComfyUI\custom_nodes\x-flux-comfyui\nodes.py”, line 397, in sampling

inmodel.diffusion_model.to(device)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 1152, in to

return self._apply(convert)

^^^^^^^^^^^^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 802, in _apply

module._apply(fn)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 802, in _apply

module._apply(fn)

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 802, in _apply

module._apply(fn)

[Previous line repeated 1 more time]

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 825, in _apply

param_applied = fn(param)

^^^^^^^^^

File “C:\Users\satis\OneDrive\Documents\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\nn\modules\module.py”, line 1150, in convert

return t.to(device, dtype if t.is_floating_point() or t.is_complex() else None, non_blocking)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

torch.cuda.OutOfMemoryError: Allocation on device 0 would exceed allowed memory. (out of memory)

Currently allocated : 11.15 GiB

Requested : 36.00 MiB

Device limit : 11.99 GiB

Free (according to CUDA): 0 bytes

PyTorch limit (set by user-supplied memory fraction)

: 17179869184.00 GiB

2024-09-14 06:54:01,376 – root – ERROR – Got an OOM, unloading all loaded models.

2024-09-14 06:54:03,221 – root – INFO – Prompt executed in 14.90 seconds

“`

## Attached Workflow

Please make sure that workflow does not contain any sensitive information such as API keys or passwords.

“`

{“last_node_id”:49,”last_link_id”:117,”nodes”:[{“id”:39,”type”:”PreviewImage”,”pos”:{“0″:444,”1″:-130},”size”:{“0″:210,”1″:246},”flags”:{“collapsed”:false},”order”:10,”mode”:0,”inputs”:[{“name”:”images”,”type”:”IMAGE”,”link”:113}],”outputs”:[],”properties”:{“Node name for S&R”:”PreviewImage”}},{“id”:48,”type”:”CannyEdgePreprocessor”,”pos”:{“0″:102,”1″:-227},”size”:{“0″:315,”1″:106},”flags”:{},”order”:9,”mode”:0,”inputs”:[{“name”:”image”,”type”:”IMAGE”,”link”:112}],”outputs”:[{“name”:”IMAGE”,”type”:”IMAGE”,”links”:[113,114,115],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”CannyEdgePreprocessor”},”widgets_values”:[100,200,1024]},{“id”:19,”type”:”CLIPTextEncodeFlux”,”pos”:{“0″:203,”1″:167},”size”:{“0″:400,”1″:200},”flags”:{},”order”:8,”mode”:0,”inputs”:[{“name”:”clip”,”type”:”CLIP”,”link”:27,”slot_index”:0}],”outputs”:[{“name”:”CONDITIONING”,”type”:”CONDITIONING”,”links”:[26],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”CLIPTextEncodeFlux”},”widgets_values”:[“bad photo”,”bad photo”,4]},{“id”:7,”type”:”VAEDecode”,”pos”:{“0″:1164,”1″:40},”size”:{“0″:210,”1″:46},”flags”:{},”order”:14,”mode”:0,”inputs”:[{“name”:”samples”,”type”:”LATENT”,”link”:6,”slot_index”:0},{“name”:”vae”,”type”:”VAE”,”link”:7}],”outputs”:[{“name”:”IMAGE”,”type”:”IMAGE”,”links”:[101],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”VAEDecode”}},{“id”:8,”type”:”VAELoader”,”pos”:{“0″:1111,”1″:-84},”size”:{“0″:315,”1″:58},”flags”:{},”order”:0,”mode”:0,”inputs”:[],”outputs”:[{“name”:”VAE”,”type”:”VAE”,”links”:[7],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”VAELoader”},”widgets_values”:[“ae.safetensors”]},{“id”:46,”type”:”SaveImage”,”pos”:{“0″:621,”1″:146},”size”:{“0″:315,”1″:270},”flags”:{},”order”:12,”mode”:2,”inputs”:[{“name”:”images”,”type”:”IMAGE”,”link”:115}],”outputs”:[],”properties”:{},”widgets_values”:[“canny_process”]},{“id”:13,”type”:”LoadFluxControlNet”,”pos”:{“0″:121,”1″:-375},”size”:{“0″:316.83343505859375,”1″:86.47058868408203},”flags”:{},”order”:1,”mode”:0,”inputs”:[],”outputs”:[{“name”:”ControlNet”,”type”:”FluxControlNet”,”links”:[44],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”LoadFluxControlNet”},”widgets_values”:[“flux-dev-fp8″,”flux-canny-controlnet-v3.safetensors”]},{“id”:3,”type”:”XlabsSampler”,”pos”:{“0″:948,”1″:149},”size”:{“0″:342.5999755859375,”1″:282},”flags”:{},”order”:13,”mode”:0,”inputs”:[{“name”:”model”,”type”:”MODEL”,”link”:58,”slot_index”:0},{“name”:”conditioning”,”type”:”CONDITIONING”,”link”:18},{“name”:”neg_conditioning”,”type”:”CONDITIONING”,”link”:26},{“name”:”latent_image”,”type”:”LATENT”,”link”:66},{“name”:”controlnet_condition”,”type”:”ControlNetCondition”,”link”:28}],”outputs”:[{“name”:”latent”,”type”:”LATENT”,”links”:[6],”shape”:3}],”properties”:{“Node name for S&R”:”XlabsSampler”},”widgets_values”:[324242532549,”fixed”,25,1,3.5,0,1]},{“id”:6,”type”:”EmptyLatentImage”,”pos”:{“0″:850,”1″:449},”size”:{“0″:315,”1″:106},”flags”:{},”order”:2,”mode”:0,”inputs”:[],”outputs”:[{“name”:”LATENT”,”type”:”LATENT”,”links”:[66],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”EmptyLatentImage”},”widgets_values”:[832,1216,1]},{“id”:23,”type”:”SaveImage”,”pos”:{“0″:1433,”1″:-194},”size”:{“0″:433.7451171875,”1″:469.8033447265625},”flags”:{},”order”:15,”mode”:0,”inputs”:[{“name”:”images”,”type”:”IMAGE”,”link”:101}],”outputs”:[],”properties”:{},”widgets_values”:[“canny_res”]},{“id”:14,”type”:”ApplyFluxControlNet”,”pos”:{“0″:546,”1″:-264},”size”:{“0″:393,”1″:98},”flags”:{},”order”:11,”mode”:0,”inputs”:[{“name”:”controlnet”,”type”:”FluxControlNet”,”link”:44},{“name”:”image”,”type”:”IMAGE”,”link”:114,”slot_index”:1},{“name”:”controlnet_condition”,”type”:”ControlNetCondition”,”link”:null}],”outputs”:[{“name”:”controlnet_condition”,”type”:”ControlNetCondition”,”links”:[28],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”ApplyFluxControlNet”},”widgets_values”:[0.7000000000000001]},{“id”:32,”type”:”UNETLoader”,”pos”:{“0″:502,”1″:452},”size”:{“0″:315,”1″:82},”flags”:{},”order”:3,”mode”:0,”inputs”:[],”outputs”:[{“name”:”MODEL”,”type”:”MODEL”,”links”:[58],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”UNETLoader”},”widgets_values”:[“flux1-dev-fp8.safetensors”,”fp8_e4m3fn”]},{“id”:5,”type”:”CLIPTextEncodeFlux”,”pos”:{“0″:763,”1″:-45},”size”:{“0″:286.951416015625,”1″:103.62841796875},”flags”:{},”order”:7,”mode”:0,”inputs”:[{“name”:”clip”,”type”:”CLIP”,”link”:2,”slot_index”:0},{“name”:”clip_l”,”type”:”STRING”,”link”:116,”widget”:{“name”:”clip_l”}},{“name”:”t5xxl”,”type”:”STRING”,”link”:117,”widget”:{“name”:”t5xxl”}}],”outputs”:[{“name”:”CONDITIONING”,”type”:”CONDITIONING”,”links”:[18],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”CLIPTextEncodeFlux”},”widgets_values”:[“cyberpank girl, full hd, cinematic, abandoned factory, mystery, dangereous, studio light on face, light gray army outfit, stocking, boot, pink hair”,”cyberpank girl, full hd, cinematic, abandoned factory, mystery, dangereous, studio light on face, light gray army outfit, stocking, boot, pink hair”,4]},{“id”:49,”type”:”PrimitiveNode”,”pos”:{“0″:964,”1″:-287},”size”:{“0″:414.8598937988281,”1″:147.0726776123047},”flags”:{},”order”:4,”mode”:0,”inputs”:[],”outputs”:[{“name”:”STRING”,”type”:”STRING”,”links”:[116,117],”slot_index”:0,”widget”:{“name”:”clip_l”}}],”title”:”Prompt”,”properties”:{“Run widget replace on values”:”on”},”widgets_values”:[“cyberpank girl, full hd, cinematic, abandoned factory, mystery, dangereous, studio light on face, light gray army outfit, stocking, boot, pink hair”]},{“id”:4,”type”:”DualCLIPLoader”,”pos”:{“0″:104,”1″:-79},”size”:{“0″:315,”1″:106},”flags”:{},”order”:5,”mode”:0,”inputs”:[],”outputs”:[{“name”:”CLIP”,”type”:”CLIP”,”links”:[2,27],”slot_index”:0,”shape”:3}],”properties”:{“Node name for S&R”:”DualCLIPLoader”},”widgets_values”:[“clip_l.safetensors”,”t5xxl_fp8_e4m3fn.safetensors”,”flux”]},{“id”:16,”type”:”LoadImage”,”pos”:{“0″:-253,”1″:-229},”size”:{“0″:315,”1″:314},”flags”:{},”order”:6,”mode”:0,”inputs”:[],”outputs”:[{“name”:”IMAGE”,”type”:”IMAGE”,”links”:[112],”slot_index”:0,”shape”:3},{“name”:”MASK”,”type”:”MASK”,”links”:null,”shape”:3}],”properties”:{“Node name for S&R”:”LoadImage”},”widgets_values”:[“17038-41188503-full-body-woman.png”,”image”]}],”links”:[[2,4,0,5,0,”CLIP”],[6,3,0,7,0,”LATENT”],[7,8,0,7,1,”VAE”],[18,5,0,3,1,”CONDITIONING”],[26,19,0,3,2,”CONDITIONING”],[27,4,0,19,0,”CLIP”],[28,14,0,3,4,”ControlNetCondition”],[44,13,0,14,0,”FluxControlNet”],[58,32,0,3,0,”MODEL”],[66,6,0,3,3,”LATENT”],[101,7,0,23,0,”IMAGE”],[112,16,0,48,0,”IMAGE”],[113,48,0,39,0,”IMAGE”],[114,48,0,14,1,”IMAGE”],[115,48,0,46,0,”IMAGE”],[116,49,0,5,1,”STRING”],[117,49,0,5,2,”STRING”]],”groups”:[],”config”:{},”extra”:{“ds”:{“scale”:1.2100000000000006,”offset”:[566.6160305853425,338.6646835803564]}},”version”:0.4}

“`

## Additional Context

(Please add any additional context or steps to reproduce the error here)

Try Forge if you have low VRAM. The image quality and prompt adherence are better than xl.

1) I mean you can just say you’re running out of memory, no need to paste 10 pages of Python logs too in your message…

2) You can absolutely run Flux with 12 GB VRAM, I do with my 3080 and 10 GB. You just have to use a quantized version, there’s multiple alternatives (GGUF, nf4, fp8) that allow running Flux already at 8 GB.

3) Describing Flux as “useless” and “not enough high quality” because you’re failing to run it (while you can) is a bit laughable. Flux has the absolutely best quality compared to any other image generating model currently, doubly so when you take into account its prompt understanding. It’s the next step in the evolution of image generation, the new “Stable Diffusion”.

Flux model is text to image only.? Checkpoint does not work with IPAdapter or Instatnt_ID

It also support image-to-image and inpainting.https://stable-diffusion-art.com/flux-img2img-inpainting/

Really appreciate you publishing these. Comfy looks more complicated that Auto; currently using Forge through Pinokio but I’ll try your tut through Pinokio’s Comfy UI as a trial. I have a lower end laptop gpu (6 gb 4050), but I’m making art so amazing I’m printing some for my place. So, thanks again for all the help! It’s bleeding edge tutorials and I’m here for it.

You are welcome!

What about using union control with flux? That could be a good addition to this article.

Not trying to discourage you from trying Comfy, but be prepared for a long adjustment period before you reach the level you have at Forge now.

It’s a completely different system with only a few similarities (both interface- and server-wise).

I’m in the same process, decided to give Comfy a decent try coming from Forge. It’s been a week now and I’m barely starting to adjust to Comfy’s nodal nature. I don’t mean understanding how nodes work, more like thinking artistically in node “architecture” mode, how to translate my ideas to a node-based format.

I mean sure you can do simple stuff like txt2img and img2img simple workflows, but those you can already do in Forge much more easy and uncomplicated. But as I said, after a week or so of constant use, I’ve barely scratched the surface, but done so enough to start understanding why Comfy is worth the effort put in learning it.

Do you think that SwarmU with the integrated Comfy could be an easier way to start learning all this?

This site has a ComfyUI course if both of you want to learn 🙂 https://stable-diffusion-art.com/stable-diffusion-courses/