Flux AI is the best open-source AI image generator you can run locally on your PC (As of August 2024). However, the 12-billion parameter model requires high VRAM to run. Don’t have a beefy GPU card? Don’t worry. You can now run Flux AI with a GPU as low as 6 GB VRAM.

You will need to use SD Forge WebUI.

Table of Contents

What is Forge?

SD Forge is a fork of the popular AUTOMATIC1111 Stable Diffusion WebUI. The backend was rewritten to optimize speed and GPU VRAM consumption. If you are familiar with A1111, it is easy to switch to using Forge.

You can use Forge on Windows, Mac, or Google Colab.

If you are new to Stable Diffusion, check out the Quick Start Guide.

Take the Stable Diffusion course to build solid skills and understanding.

What is the low VRAM NF4 Flux model?

The 4-bit NormalFloat (NF4) Flux uses a sophisticated 4-bit quantization method to achieve a smaller memory footprint and faster speed. It is based on the QLoRA method developed for large language models like ChatGPT. NF4 is a new data type theoretically optimal for normally distributed weights.

The speed-up is more significant in low VRAM machines.

Use Flux AI NF4 model on Forge

Step 1: Update Forge

The support for Flux is relatively new. You will need to update Forge before using it.

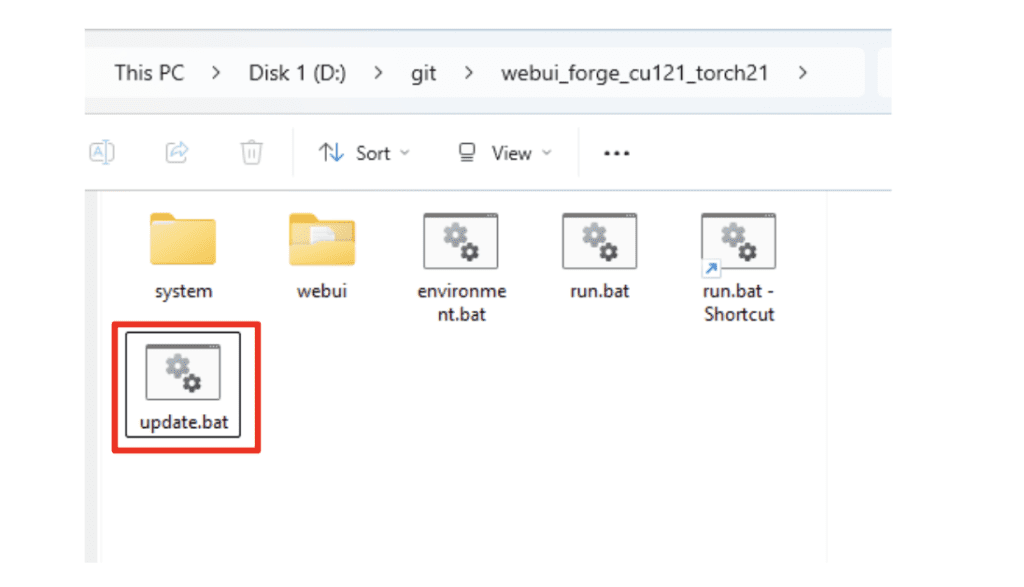

If you use the standalone installation package, double-click the file update.bat in the Forge installation folder webui_forge_cuXXX_torchXXX.

Step 2: Download the Flux AI model

There are two download options:

- Flux1 dev FP8 – This checkpoint file is the same as the one for ComfyUI.

- Flux1 dev NF4 – This version is smaller and faster if you have a low VRAM machine (6GB/8GB/12GB)

Download one of them and put it in the folder webui_forge_cuXXX_torchXXX > webui > models > Stable-diffusion.

Tips: If you have already downloaded the FP8 model for ComfyUI and are happy with the VRAM usage, you don’t need to download the NF4 model.

Step 3: Generate an image

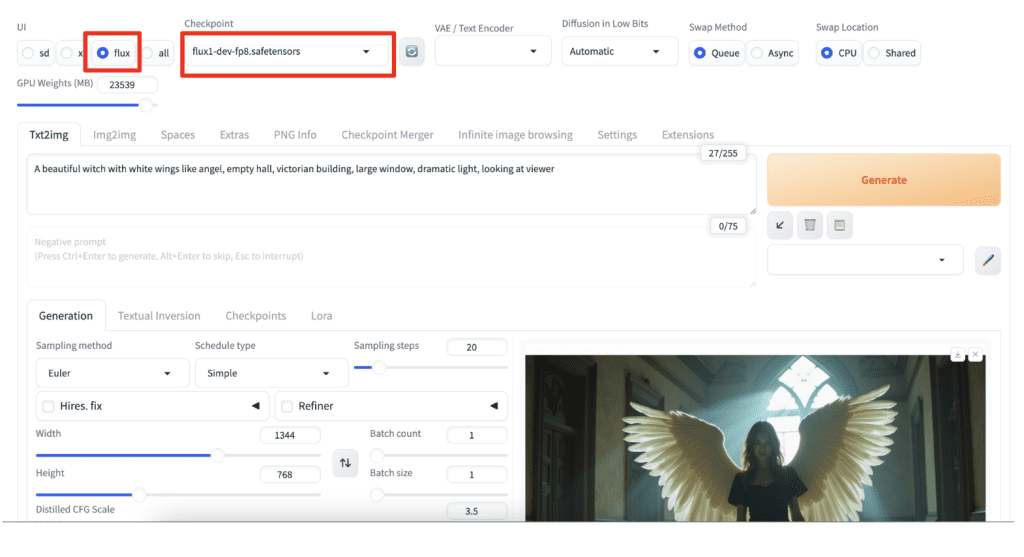

In SD Forge WebUI, select Flux in UI. Select a flux model in the Checkpoint dropdown menu.

Enter a prompt, e.g.

A beautiful witch with white wings like angel, empty hall, victorian building, large window, dramatic light, looking at viewer

Set the image size:

- 1024 x 1024 (Square)

- 1216 x 832 (3:2)

- 1344 x 768 (16:9)

Click Generate to generate an image.

Note: Negative prompts are not supported in the Flux model. Set the CFG scale to 1.

Tips

You can reduce the VRAM usage even more by generating smaller, SD 1.5 size images.

- 512 x 512 (1:1)

- 512 x 768 (2:3)

Useful resources

Flux: Loading T5, CLIP + new VAE UI in SD Forge

[Major Update] BitsandBytes Guidelines and Flux

NF4 Checkpoint: flux1-dev-bnb-nf4-v2.safetensors

FP8 Checkpoint: flux1-dev-fp8.safetensors

This is fantastic! It’s going slow on my 8GB GTX 1080 and 64GB system RAM, but the results are STUNNING!

nf4 works on my Laptop RTX 4070 with 8GB – Great!

(Even with Hires. fix … very slow but working)

RuntimeError: Error(s) in loading state_dict for Flux:

size mismatch for img_in.weight: copying a param with shape torch.Size([98304, 1]) from checkpoint, the shape in current model is torch.Size([3072, 0]).

size mismatch for time_in.in_layer.weight: copying a param with shape torch.Size([393216, 1]) from checkpoint, the shape in current model is torch.Size([3072, 256]).

size mismatch for time_in.out_layer.weight: copying a param with shape torch.Size([4718592, 1]) from checkpoint, the shape in current model is torch.Size([3072, 3072]).

size mismatch for vector_in.in_layer.weight: copying a param with shape torch.Size([1179648, 1]) from checkpoint, the shape in current model is torch.Size([3072, 768]).

size mismatch for vector_in.out_layer.weight: copying a param with shape torch.Size([4718592, 1]) from checkpoint, the shape in current model is torch.Size([3072, 3072]).

The error message is like this and verry long and at the end it says:

“Stable diffusion model failed to load”

But the models are in the correct foulders.

Do you know how i can configure these values correctly or where they are stored?

Am i doing somethis wrong?

It seems the model file is incorrect. try redownloading.

Python 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)]

Version: f2.0.1v1.10.1-previous-624-gc9850c84

Commit hash: c9850c84362cee0acc4ceb6546f73de59537d167

Traceback (most recent call last):

File “E:\Generative Ai Models\webui_forge_cu121_torch231\webui\launch.py”, line 54, in

main()

File “E:\Generative Ai Models\webui_forge_cu121_torch231\webui\launch.py”, line 42, in main

prepare_environment()

File “E:\Generative Ai Models\webui_forge_cu121_torch231\webui\modules\launch_utils.py”, line 436, in prepare_environment

raise RuntimeError(

RuntimeError: Your device does not support the current version of Torch/CUDA! Consider download another version:

https://github.com/lllyasviel/stable-diffusion-webui-forge/releases/tag/latest

I am having this problem, I was looking for a cuda version compatible to my gpu and then I found out I have Amd Rx 5600xt gpu and Cuda only support Nvidea gpu. is there any way to run this on amd gpu??? or is it any other problem?

You will need a special setup to use AMD gpu. If you don’t want to spend time on monkeying with the setup, I would suggest you to use Google Colab or an online service.

when i run i got a black screen as the generated image , any fix for it

fixed fixed no prob now

Well, I’ve tried hard, 1060 with 6gb vram through WebForge UI using NF4 model.

Results:

Any checkpoint based on SD1.5: 512×512 image takes, 40 steps, 50 secs to run.

Flux NF4: 512×512, 10 steps, 9 minutes to run.

In Flux mode, my GPU power is barely used btw, just the VRAM is taken, 16% of the gpu power is used tho.

Similar thing happened long ago when XL came and people started pointing out that it could run on low vram, I’ve tried the low vram XL method and it took ages to run and the result was always a terrible image.

Hi Andrew,

I’m using Stable Diffusion via Fooocus 2.5.5 and 6GB Nvidia VRAM. Any chance to benefit from Flux within this environment?

BR, Bernd

I see people able to.

Thanks, Andrew. I hope it can be used with 6 GB VRAM (slow processing time would not be a problem), but how about using it with Fooocus instead of ComfyUI, SD Forge or Automatic1111… and if it can be used, how could I integrate it into the Fooocus environment of my Windows PC?

I don’t think Fooocus and A1111 supports Flux. Forge is very similar to A1111 and you can configure it to share models with A1111 to save space. There’s little reason not to install it.

Thanks, Andrew – I’ll give it a try with Forge & Flux…

Hi, when I try to generate with both models, it shows this error message: TypeError: Trying to convert Float8_e4m3fn to the MPS backend but it does not have support for that dtype.

May I know how to fix this? Thank you.

Also when I try to generate image using 1.5 or XL model, it generate black image

And may I know how to update forge ui on mac. I cannot find the update.bat file.

You seems to be using a Mac. You can try this: https://stable-diffusion-art.com/flux-mac/

Hi Andrew,

Thanks for your article as always. I have couple of questions:

1. Do we need to install any VAE for this model? I tried to read some of the links that you have shared but It doesn’t seem to really indicate the usage of VAE. Some said the VAE is already baked in as part of the Flux1 dev NF4 model, but I can’t seem to find a source that confirms this.

2. Is this Flux compatible for a PC with 4GB VRAM? I have tried, and seem to be running fine, and in fact it was able to generate the image in like less than 5 minutes, which is surprising to me considering that I would expect the image generation to be way way much slower.

1. The VAE is already baked in the flux1 dev nf4 model. I confirmed with opening the safetensors model in python.

2. If you can run it then it works! The author of Forge has done a great job in managing and reducing the memory footprint.

Thanks for this Andrew, it got me curious to see whether Flux would run on Forge with no GPU at all. Long story short: it didn’t (with my Ubuntu desktop). I just got a stack trace with “mat1 and mat2 shapes cannot be multiplied (1024×64 and 1×98304)”. I thought this strange as I was expecting ferocious core dumps rather than this familiar hiccup. Forge carried on gracefully nonetheless; with other non-Flux models.

(I subsequently tried out Flux at huggingface. I’m still reeling at its ability to produce realistic images that accord so well with my intentions.)

Yeah, the NF4 checkpoint is only for GPUs. You may be able to run the FP8 or full checkpoint on CPU, but it is going to be very slow…

Thanks kindly for the suggestion Andrew, just had to give it a go but, sadly, not so graceful with FP8; pulled the server over in short order. No stack trace even. Not even with “Never OOM” enabled. Rude! 🙂

Update: at 512×512 FP8 runs fine; ~20mins/img + extra for correcting any faces!

Wow, running flux on cpu is quite an achievement!

Hi Andrew

. I have been running on forge for some time. A short while ago, lllyasviel began doing some experimental stuff and many of us encountered huge issues. So much so, that he setup a link to a previous version that was fine and said folks should use that and simply not update. This is the version in question. https://github.com/lllyasviel/stable-diffusion-webui-forge/releases/download/previous/webui_forge_cu121_torch21_f0017.7z And so I did that. Is it now safe to update to the latest version now? I would hate to do so and end up with the same problem again.

I’m using version f2.0.1v1.10.1-previous-317-g4bb56139. It seems to work fine.