This is a step-by-step guide for using the Google Colab notebook in the Quick Start Guide to run AUTOMATIC1111. This is one of the easiest ways to use AUTOMATIC1111 because you don’t need to deal with the installation.

See installation instructions on Windows PC and Mac if you prefer to run locally.

This notebook is designed to share models in Google Drive with the following notebooks.

Google has blocked usage of Stable Diffusion with a free Colab account. You need a paid plan to use this notebook.

Table of Contents

- What is AUTOMATIC1111?

- What is Google Colab?

- Alternatives

- Step-by-step instructions to run the Colab notebook

- ngrok (Optional)

- When you are done

- Computing resources and compute units

- Models available

- Other models

- Installing models

- Installing extensions from URL

- Extra arguments to webui

- Version

- Secrets

- Extensions

- Frequently asked questions

- Next Step

What is AUTOMATIC1111?

Stable Diffusion is a machine-learning model. It is not very user-friendly by itself. You need to write codes to use it. Most users use a GUI (Graphical User Interface). Instead of writing codes, we write prompts in a text box and click buttons to generate images.

AUTOMATIC1111 is one of the first Stable Diffusion GUIs developed. It supports standard AI functions like text-to-image, image-to-image, upscaling, ControlNet, and even training models (although I won’t recommend it).

What is Google Colab?

Google Colab (Google Colaboratory) is an interactive computing service offered by Google. It is a Jupyter Notebook environment that allows you to execute code.

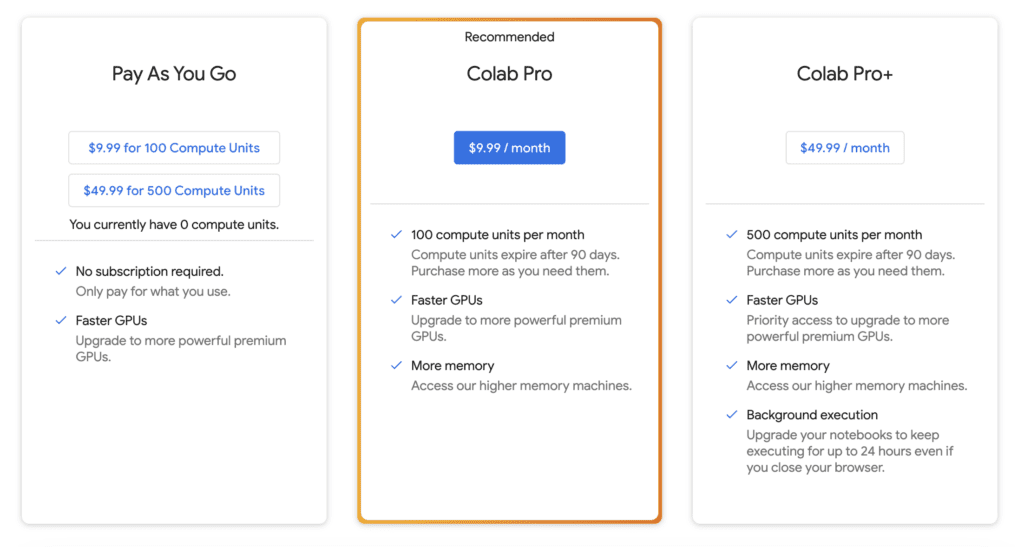

They have three paid plans – Pay As You Go, Colab Pro, and Colab Pro+. You need the Pro or Pro+ Plan to use all the models. I recommend using the Colab Pro plan. It gives you 100 compute units per month, which is about 50 hours per standard GPU. (It’s a steal)

With a paid plan, you have the option to use Premium GPU. It is an A100 processor. That comes in handy when you need to train Dreambooth models fast.

When you use Colab for AUTOMATIC1111, be sure to disconnect and shut down the notebook when you are done. It will consume compute units when the notebook is kept open.

You will need to sign up with one of the plans to use the Stable Diffusion Colab notebook. They have blocked the free usage of AUTOMATIC1111.

Alternatives

Think Diffusion provides fully managed AUTOMATIC1111/Forge/ComfyUI web service. They cost a bit more than Colab but save you from the trouble of installing models and extensions and faster startup time. They offer 20% extra credit to our readers. (Affiliate link)

Step-by-step instructions to run the Colab notebook

Step 0. Sign up for a Colab Pro or a Pro+ plans. (I use Colab Pro.)

Step 1. Open the Colab notebook in Quick Start Guide.

Access the notebook below if you are a member of this site.

You should see the notebook with the second cell below.

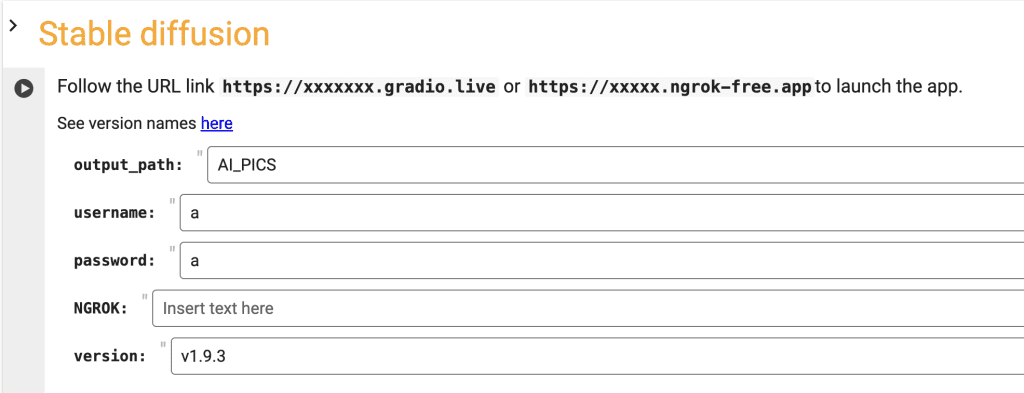

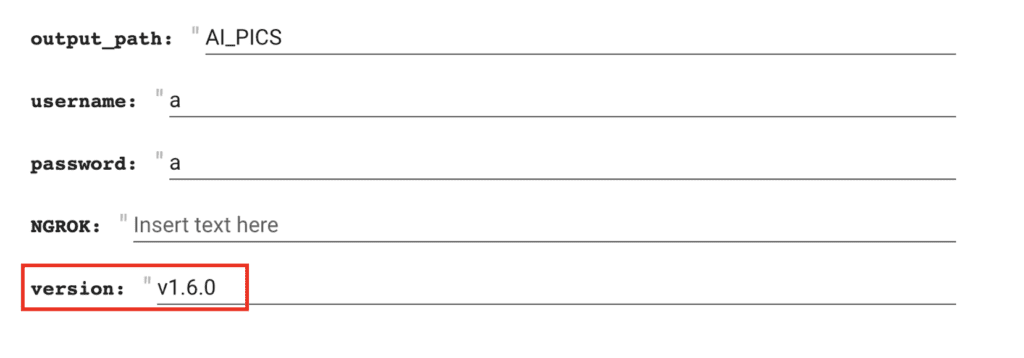

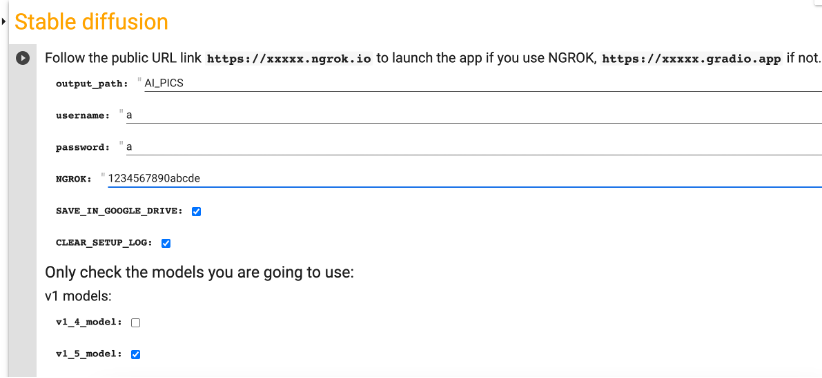

Step 2. Set the username and password. You will need to enter them before using AUTOMATIC11111.

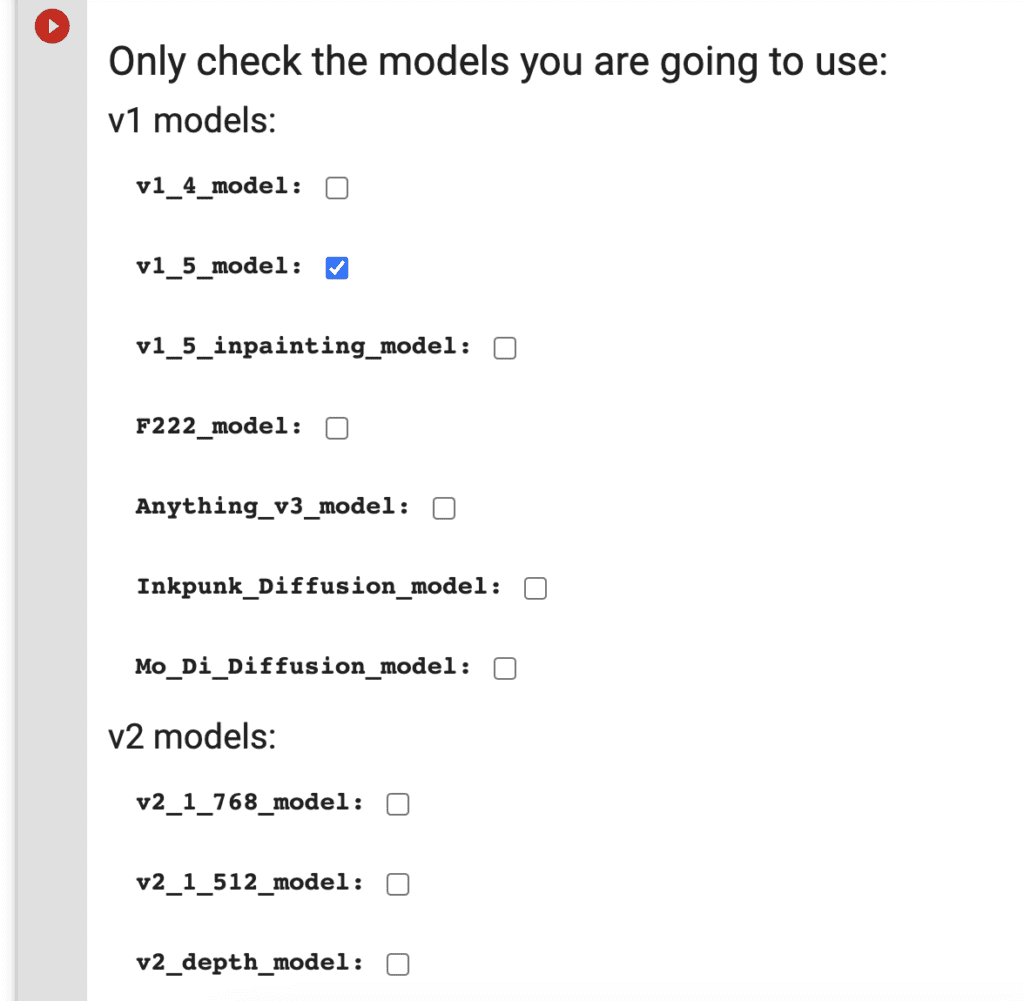

Step 3. Check the models you want to load. If you are a first-time user, you can use the default settings.

Step 4. Click the Play button on the left of the cell to start.

Step 5. It will install A1111 and models in the the Colab envirnoment.

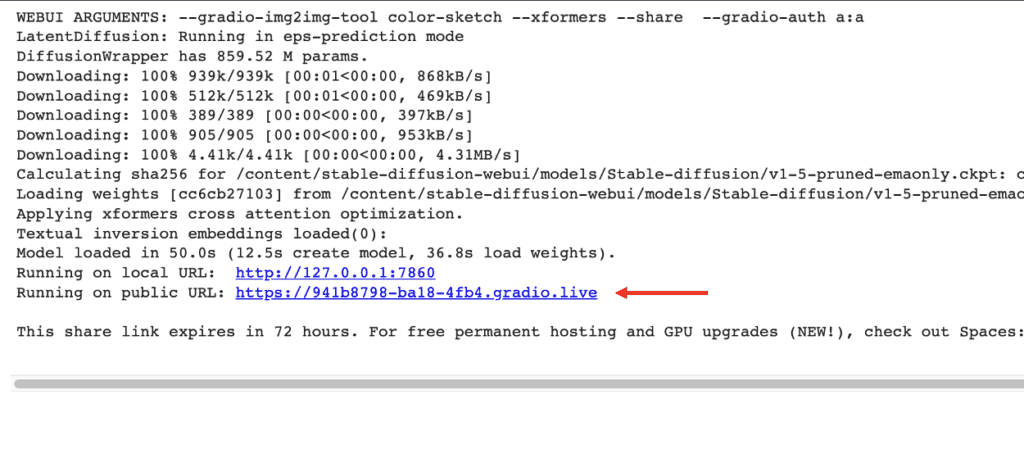

Step 6. Follow the gradio.live link to start AUTOMATIC1111.

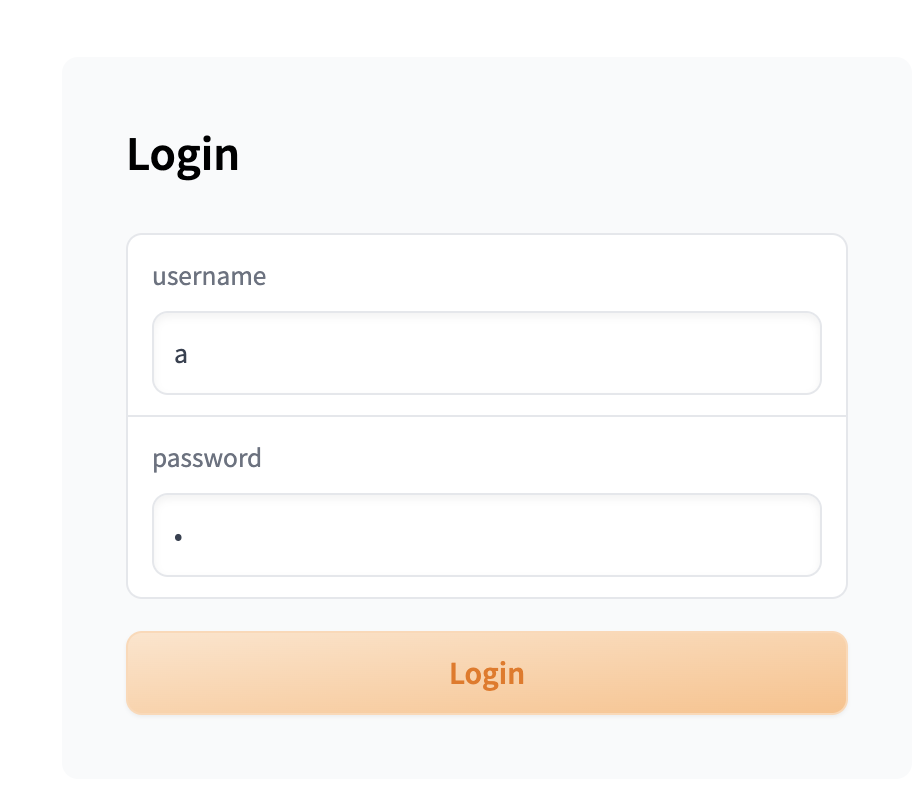

Step 7. Enter the username and password you specified in the notebook.

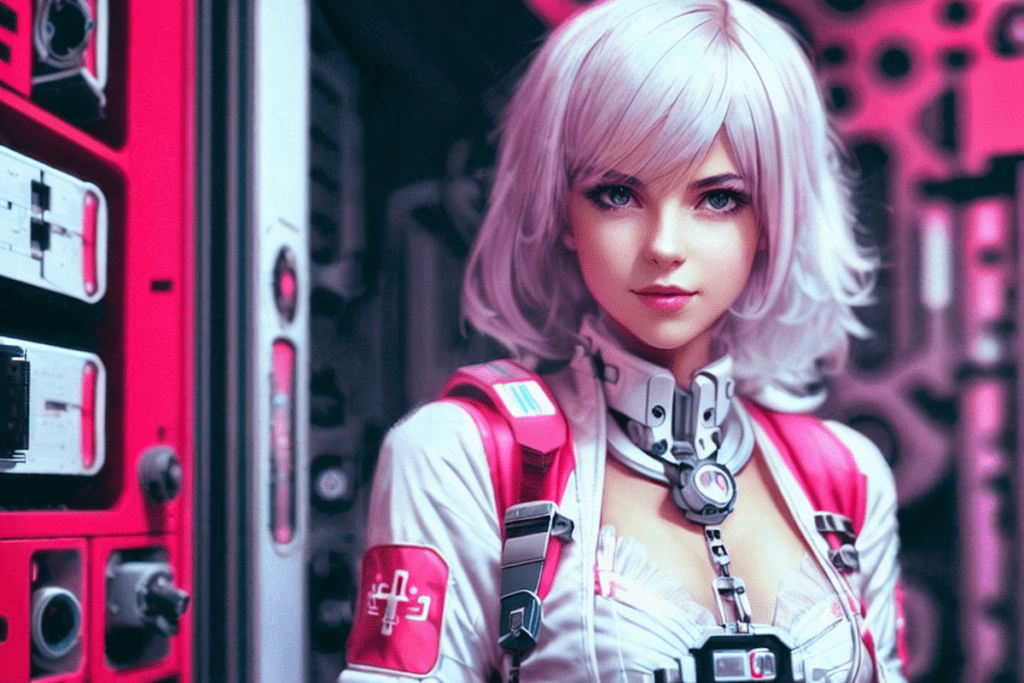

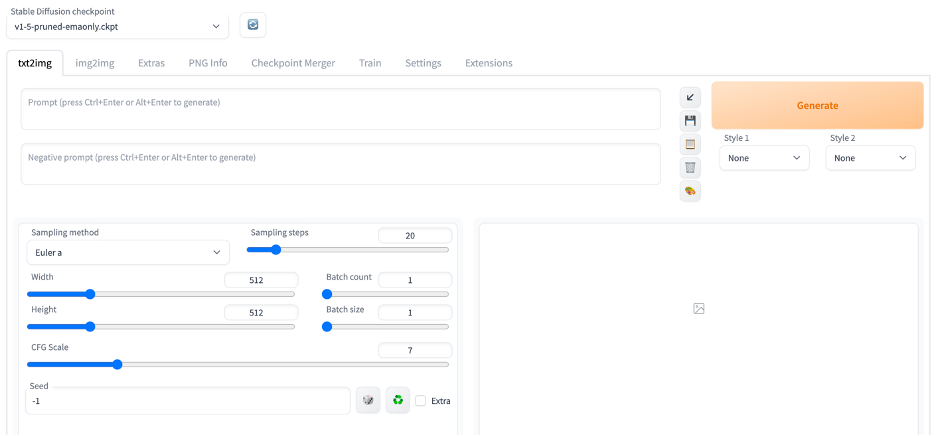

Step 8. You should see the AUTOMATIC1111 GUI after you log in.

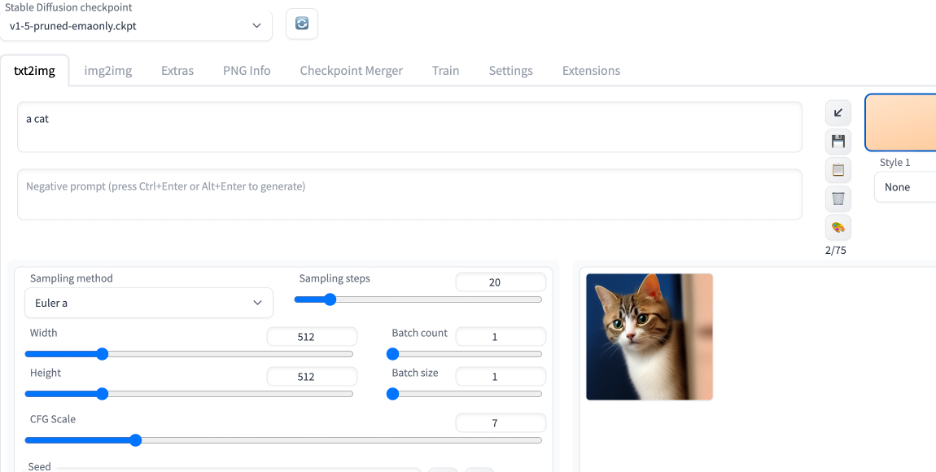

Put in “a cat” in the prompt text box and press Generate to test using Stable Diffusion. You should see it generates an image of a cat.

ngrok (Optional)

If you run into display issues with the GUI, you can try using ngrok instead of Gradio to establish the public connection. It is a more stable alternative to the default gradio connection.

You will need to set up a free account and get an authoken.

- Go to https://ngrok.com/

- Create an account

- Verify email

- Copy the authoken from https://dashboard.ngrok.com/get-started/your-authtoken and paste in the ngrok field in the notebook.

The Stable Diffusion cell in the notebook should look like below after you put in your ngrok authtoken.

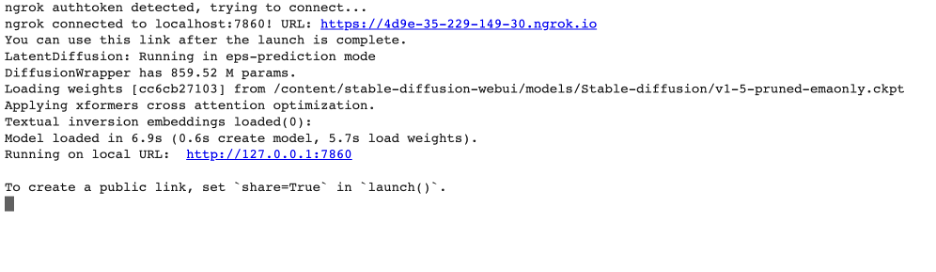

Click the play button on the left to start running. When it is done loading, you will see a link to ngrok.io in the output under the cell. Click the ngrok.io link to start AUTOMATIC1111. The first link in the example output below is the ngrok.io link.

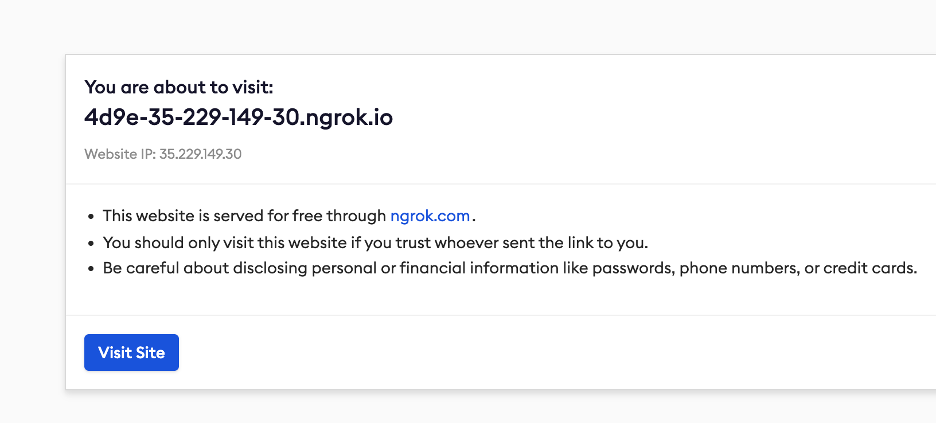

When you visit the ngrok link, it should show a message like below

Click on Visit Site to Start AUOTMATIC1111 GUI. Occasionally, you will see a warning message that the site is unsafe to visit. It is likely because someone used the same ngrok link to put up something malicious. Since you are the one who created this link, you can ignore the safety warning and proceed.

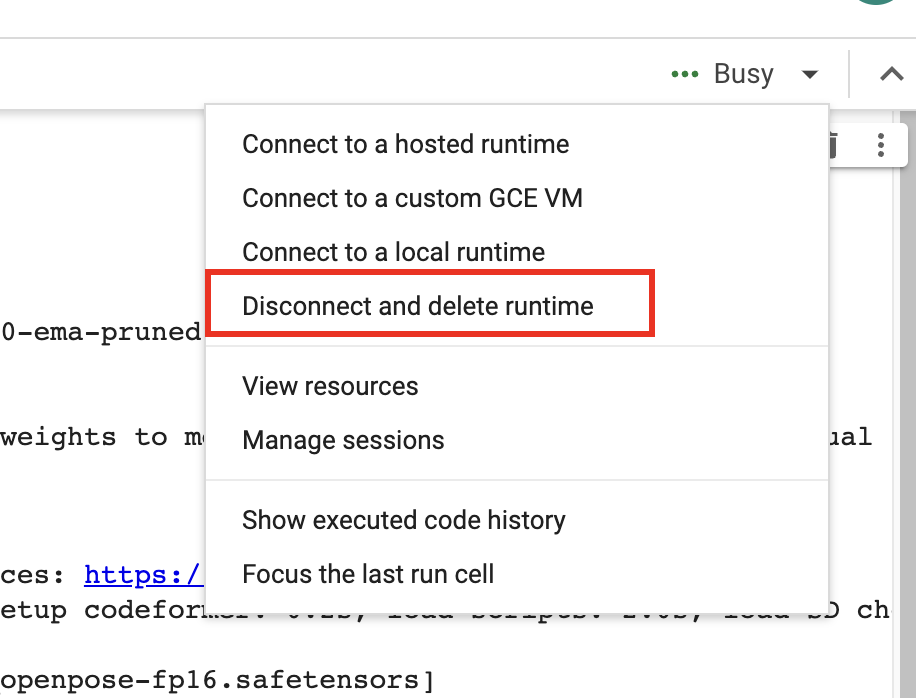

When you are done

When you finish using the notebook, don’t forget to click “Disconnect and delete runtime” in the top right drop-down menu. Otherwise, you will continue to consume compute credits.

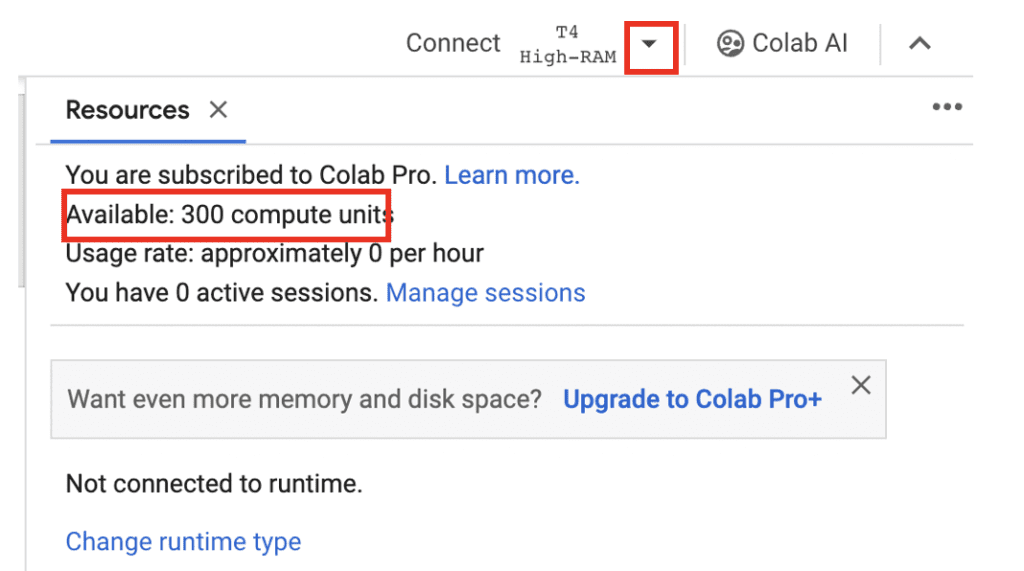

Computing resources and compute units

To view computing resources and credits, click the downward caret next to the runtime type (E.g. T4, High RAM) on the top right. You will see the remaining compute units and usage rate.

Models available

For your convenience, the notebook has options to load some popular models. You will find a brief description of them in this section.

v1.5 models

v1.5 model

v1.5 model is released after 1.4. It is the last v1 model. Images from this model is very similar to v1.4. You can treat the v1.5 model as the default v1 base model.

v1.5 inpainting model

The official v1.5 model trained for inpainting.

Realistic Vision

Realistic Vision v2 is good for generating anything realistic, whether they are people, objects, or scenes.

F222

F222 is good at generating photo-realistic images. It is good at generating females with correct anatomy.

Caution: F222 is prone to generating explicit images. Suppress explicit images with a prompt “dress” or a negative prompt “nude”.

Dreamshaper

Dreamshaper is easy to use and good at generating a popular photorealistic illustration style. It is an easy way to “cheat” and get good images without a good prompt!

Open Journey Model

Open Journey is a model fine-tuned with images generated by Mid Journey v4. It has a different aesthetic and is a good general-purpose model.

Triggering keyword: mdjrny-v4 style

Anything v3

Anything V3 is a special-purpose model trained to produce high-quality anime-style images. You can use danbooru tags (like 1girl, white hair) in the text prompt.

It’s useful for casting celebrities to amine style, which can then be blended seamlessly with illustrative elements.

Inkpunk Diffusion

Inkpunk Diffusion is a Dreambooth-trained model with a very distinct illustration style.

Use keyword: nvinkpunk

v2 models

v2 models are the newest base models released by Stability AI. It is generally harder to use and is not recommended for beginners.

v2.1 768 model

The v2.1-768 model is the latest high-resolution v2 model. The native resolution is 768×768 pixels. Make sure to set at least one side of the image to 768 pixels. It is imperative to use negative prompts in v2 models.

You will need Colab Pro to use this model because it needs a high RAM instance.

v2 depth model

v2 depth model extracts depth information from an input image and uses it to guide image generation. See the tutorial on depth-to-image.

SDXL model

This Coalb notebook supports SDXL 1.0 base and refiner models.

Select SDXL_1 to load the SDXL 1.0 model.

Important: Don’t use VAE from v1 models. Go to Settings > Stable Diffusion. Set SD VAE to AUTOMATIC or None.

Check out some SDXL prompts to get started.

Other models

Here are some models that you may be interested in.

See more realistic models here.

Dreamlike Photoreal

Dreamlike Photoreal Model Page

Model download URL

https://huggingface.co/dreamlike-art/dreamlike-photoreal-2.0/resolve/main/dreamlike-photoreal-2.0.safetensorsDreamlike Photoreal model is good at generating beautiful females with correct anatomy. It is similar to F222.

triggering keyword: photo

Caution: This model is prone to generating explicit photos. Suppress explicit images with a prompt “dress” or a negative prompt “nude”.

Lyriel

Lyriel excels in artistic style and is good at rendering a variety of subjects, ranging from portraits to objects.

Model download URL:

https://civitai.com/api/download/models/50127Deliberate v2

Deliberate v2 is a well-trained model capable of generating photorealistic illustrations, anime, and more.

Installing models

There are two ways to install models that are not on the model selection list.

- Use the

Checkpoint_models_from_URLandLora_models_from_URLfields. - Put model files in your Google Drive.

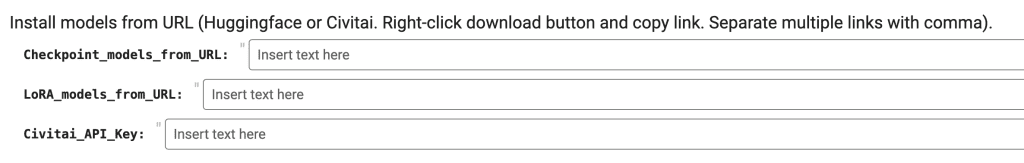

Install models using URLs

You can only install checkpoint or LoRA models using this method.

Put in the download URL links in the field. The link you initiate the file download when you visit it in your browser.

Checkpoint_models_from_URL: Use this field for checkpoint models.Lora_models_from_URL: Use this field for LoRA models.

Some models on CivitAi needs an API key to download. Go to the account page on CivitAI to create a key and put it in Civitai_API_Key.

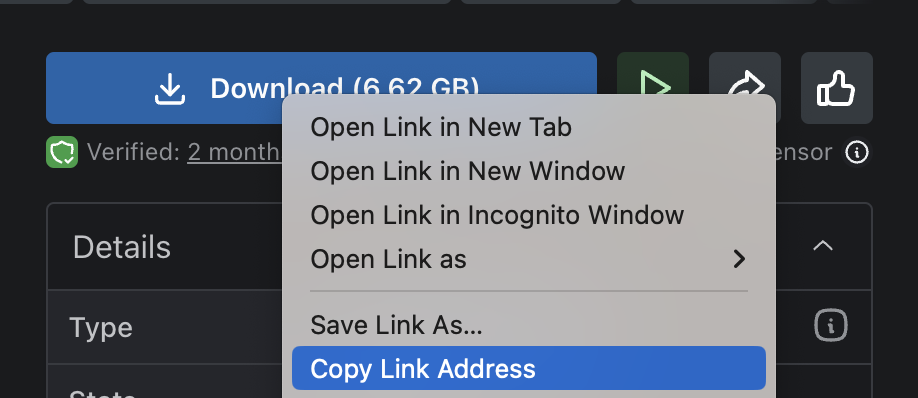

Below is an example of getting the download link on CivitAI.

Remove everything after the first question mark (?).

For example, change https://civitai.com/api/download/models/993999?type=Model&format=SafeTensor to https://civitai.com/api/download/models/993999.

Put it in the Model_from_URL field.

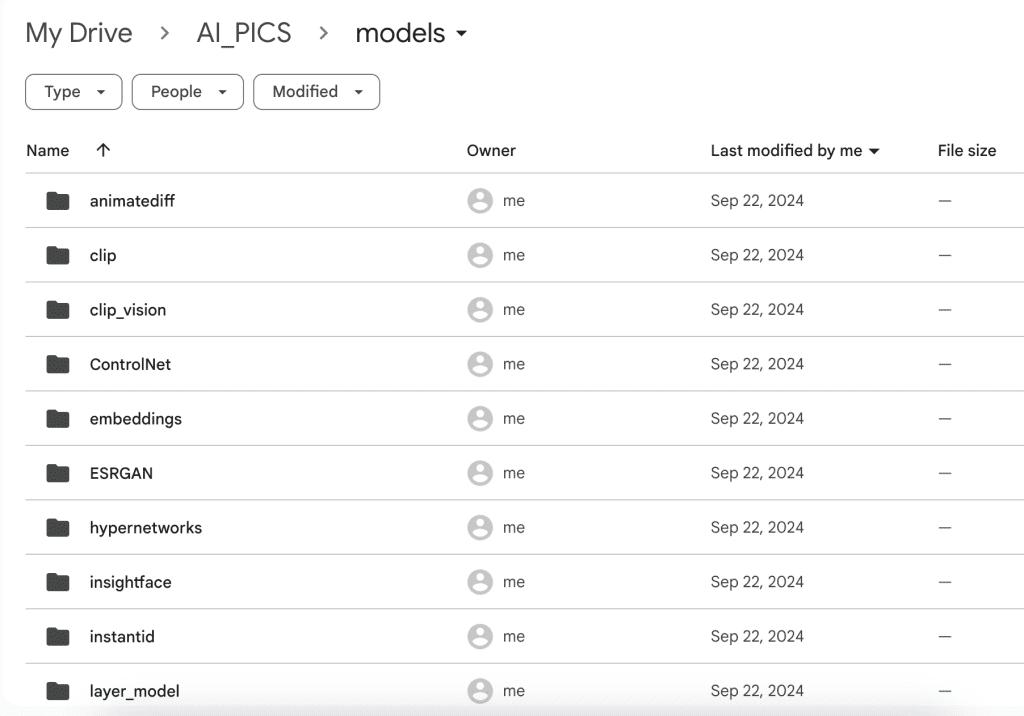

Installing models in Google Drive

After running the notebook for the first time, you should see the folder AI_PICS > models created in your Google Drive. The folder structure inside this folder mirror AUTOMATIC1111‘s and is designed to share models with other notebooks from this site.

Put your model files in the corresponding folder. For example,

- Put checkpoint model files in AI_PICS > models > Stable-diffusion.

- Put LoRA model files in AI_PICS > models > Lora.

You will need to restart the notebook to see the new models.

Installing extensions from URL

This field can be used to install any number of extensions. To do so, you will need the URL of the extension’s Github page.

For example, put in the following if you want to install the Civitai model extension.

https://github.com/civitai/sd_civitai_extensionYou can also install multiple extensions. The URLs need to be separated with commas. For example, the following URLs install the Civitai and the multi-diffusion extensions.

https://github.com/civitai/sd_civitai_extension,https://github.com/pkuliyi2015/multidiffusion-upscaler-for-automatic1111Extra arguments to webui

You can add extra arguments to the Web-UI by using the Extra_arguments field.

For example, if you use the lycoris extension, it is handy to use the extra webui argument --lyco-dir to specify a custom lycoris model directory in your Google Drive.

Other useful arguments are

--api. Allow API access. Useful for some applications, e.g. the PhotoShop Automatic1111 plugin.

Version

Now you can specify the version of Stable Diffusion WebUI you want to load. Use this at your own risk, as I only test the version saved.

Notes on some versions

v1.6.0: You need to add--disable-model-loading-ram-optimizationin the Extra_arguments field.

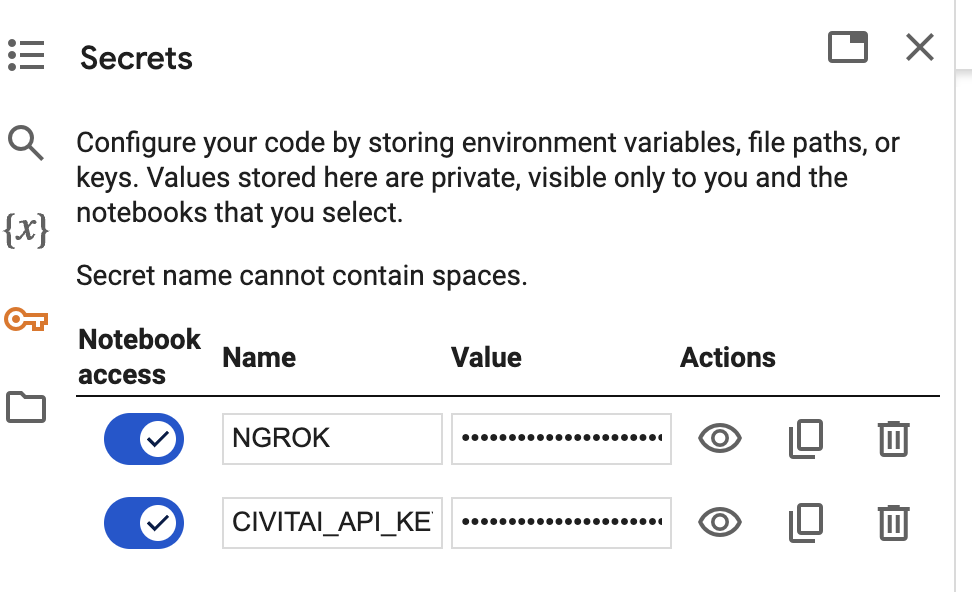

Secrets

This notebook supports storing API keys in addition to Secrets. If the keys were defined in secrets, the notebook would always use them. The notebook currently supports these two API keys (All upper cases):

NGROK: Ngrok API key.CIVITAI_API_KEY: API key for CivitAI.

You will need to enable Notebook access for each key like above.

Extensions

ControlNet

ControlNet is a Stable Diffusion extension that can copy the composition and pose of the input image and more. ControlNet has taken the Stable Diffusion community by storm because there is so much you can do with it. Here are some examples

This notebook supports ControlNet. See the tutorial article.

You can put your custom ControlNet models in AI_PICS/ControlNet folder.

Deforum – Making Videos using Stable Diffusion

You can make videos with text prompts using the Deforum extension. See this tutorial for a walkthrough.

Regional Prompter

Regional prompter lets you use different prompts for different regions of the image. It is a valuable extension for controlling the composition and placement of objects.

After Detailer

After Detailer (!adetailer) extension fixes faces and hands automatically when you generate images.

Openpose editor

Openpose editor is an extension that lets you edit the openpose control image. It is useful for manipulating the pose of an image generation with ControlNet. It is used with ControlNet.

AnimateDiff

AnimateDiff lets you create short videos from a text prompt. You can use any Stable Diffusion model and LoRA. Follow this tutorial to learn how to use it.

text2video

Text2video lets you create short videos from a text prompt using a model called Modelscope. Follow this tutorial to learn how to use it.

Infinite Image Browser

The Infinite Image Browser extension lets you manage your generations right in the A1111 interface. The secret key is SDA.

Frequently asked questions

Do I need a paid Colab account to use the notebook?

Yes, you need a Colab Pro or Pro+ account to use this notebook. Google has blocked the free usage of Stable Diffusion.

Is there any alternative to Google Colab?

Yes, Think Diffusion provides fully managed AUTOMATIC1111/Forge/ WebUI online as a web service. They offer 20% extra credit to our readers. (Affiliate link)

Do I need to use ngrok?

You don’t need to use ngrok to use the Colab notebook. In my experience, ngrok provides a more stable connection between your browser and the GUI. If you experience issues like buttons not responding, you should try ngrok.

What is the password for the Infinite Image Browser?

SDAWhy do I keep getting disconnected?

Two possible reasons:

- There’s a human verification shortly after starting each Colab notebook session. You will get disconnected if you do not respond to it. Make sure to switch back to the Colab notebook and check for verification.

- You are using a free account. Google has blocked A1111 in Colab. Get Colab Pro.

Can I use the dreambooth models I trained?

Yes, put the model file in the corresponding folder in Google Drive.

- Checkpoint models: AI_PICS > models > Stable-diffusion.

- LoRA models: AI_PICS > models > Lora.

How to enable API?

You can use AUMATIC1111 as an API server. Add the following to Extra Web-UI arguments.

--apiThe server’s URL is the same as the one you access the Web-UI. (i.e. the gradio or ngrok link)

Why do my SDXL images look garbled?

Check to make sure you are not using a VAE from v1 models. Check Settings > Stable Diffusion > SD VAE. Set it to None or Automatic.

Next Step

If you are new to Stable Diffusion, check out the Absolute beginner’s guide.

Thank you so much for this workbook.

It seems, however, that the problems with ReActor are still there. I’ve tried reinstalling everything from scratch, but to no avail.

Haven’t changed any settings, perhaps I am missing something?

Many thanks.

the original repo is not available anymore. updated to a new link.

Hello. There’s a problem in generating images. This error will appear:

NansException: A tensor with all NaNs was produced in Unet. This could be either because there’s not enough precision to represent the picture, or because your video card does not support half type. Try setting the “Upcast cross attention layer to float32” option in Settings > Stable Diffusion or using the –no-half commandline argument to fix this. Use –disable-nan-check commandline argument to disable this check.

It happens to some models and LoRAs. You can try adding those suggested arguments to the extra_arguments field in the notebook. Other than that, there’s not much you can do about it.

Hello! I’m having a problem with openpose editor. I can’t find it in the UI even after I checked it in the checkbox. How to fix this? Thank you

Hello Mr Andrew,

I’ve been using your A1111 Colab notebook for almost 1 year now and I’m very satisfied, so thank you for the good work! However, I’m writing now to report an issue that began occurring today (yesterday it all worked fine and I didn’t touch a thing): when I try to launch it, I get this error.

[…]

Apply lowram patch

/content/stable-diffusion-webui

WEBUI ARGUMENTS: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –ckpt-dir /content/drive/MyDrive/AI_PICS/models –share –gradio-auth “a”:”a” –disable-model-loading-ram-optimization –opt-sdp-attention

Python 3.10.12 (main, Nov 6 2024, 20:22:13) [GCC 11.4.0]

Version: v1.9.3

Commit hash: 1c0a0c4c26f78c32095ebc7f8af82f5c04fca8c0

Launching Web UI with arguments: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –ckpt-dir /content/drive/MyDrive/AI_PICS/models –share –gradio-auth a:a –disable-model-loading-ram-optimization –opt-sdp-attention

Traceback (most recent call last):

File “/content//stable-diffusion-webui/launch.py”, line 48, in

main()

File “/content//stable-diffusion-webui/launch.py”, line 44, in main

start()

File “/content/stable-diffusion-webui/modules/launch_utils.py”, line 465, in start

import webui

File “/content/stable-diffusion-webui/webui.py”, line 13, in

initialize.imports()

File “/content/stable-diffusion-webui/modules/initialize.py”, line 17, in imports

import pytorch_lightning # noqa: F401

File “/usr/local/lib/python3.10/dist-packages/pytorch_lightning/__init__.py”, line 35, in

from pytorch_lightning.callbacks import Callback # noqa: E402

File “/usr/local/lib/python3.10/dist-packages/pytorch_lightning/callbacks/__init__.py”, line 28, in

from pytorch_lightning.callbacks.pruning import ModelPruning

File “/usr/local/lib/python3.10/dist-packages/pytorch_lightning/callbacks/pruning.py”, line 31, in

from pytorch_lightning.core.module import LightningModule

File “/usr/local/lib/python3.10/dist-packages/pytorch_lightning/core/__init__.py”, line 16, in

from pytorch_lightning.core.module import LightningModule

File “/usr/local/lib/python3.10/dist-packages/pytorch_lightning/core/module.py”, line 47, in

from pytorch_lightning.loggers import Logger

File “/usr/local/lib/python3.10/dist-packages/pytorch_lightning/loggers/__init__.py”, line 22, in

from pytorch_lightning.loggers.wandb import WandbLogger # noqa: F401

File “/usr/local/lib/python3.10/dist-packages/pytorch_lightning/loggers/wandb.py”, line 36, in

import wandb

File “/usr/local/lib/python3.10/dist-packages/wandb/__init__.py”, line 21, in

from wandb import sdk as wandb_sdk

File “/usr/local/lib/python3.10/dist-packages/wandb/sdk/__init__.py”, line 28, in

from .wandb_init import _attach, init

File “/usr/local/lib/python3.10/dist-packages/wandb/sdk/wandb_init.py”, line 39, in

from . import wandb_login, wandb_setup

File “/usr/local/lib/python3.10/dist-packages/wandb/sdk/wandb_login.py”, line 19, in

from .wandb_settings import Settings

File “/usr/local/lib/python3.10/dist-packages/wandb/sdk/wandb_settings.py”, line 25, in

from pydantic import (

ImportError: cannot import name ‘computed_field’ from ‘pydantic’ (/usr/local/lib/python3.10/dist-packages/pydantic/__init__.cpython-310-x86_64-linux-gnu.so)

Would you please look into it?

I’ve tried googling for a solution but I’m not very well-versed in this sort of things.

Thank you

The error is fixed. I am glad that the notebook serves you well.

Thank you for your fast reply… But I still get the same issue. Just to make sure, I tried opening the version at “Latest version of this notebook can be found at here” and leaving all checkboxes at their default value. But the error message is still the same…

I got the same error again, too. The link in the old notebook still points at itself.

Apparently a completely new file, albeit one that looks a lot like the old one has been installed. You’re looking for AUTOMATIC1111_SDA.ipynb

I had to start from the top of the Stable Diffusion Art website. If you’re navigating by title name, you’re looking for this page:

How to run Stable Diffusion on Google Colab (AUTOMATIC1111)

(this its URL)

https://stable-diffusion-art.com/automatic1111-colab/

(scroll down)

(Click on the Green button labeled: A1111 Colab Notebook)

This notebook appears, its name implies its in a different source tree:

AUTOMATIC1111_SDA.ipynb

Sigh.

Thank you!

There’s a new notebook. Follow the link in this article or the quick start guide.

I think Lora’s are broken in the latest notebook. I see zero Lora’s under the tab, and they were all compatible with the model I’m using before the new notebook was installed. Can you check Andrew, and perhaps suggest what I’m doing wrong?

I just checked and can see lora there. Try follow the original link to access the notebook unmodified. Put LoRAs in google drive AI_PICS > models > Lora. Press refresh button.

Don’t know what happened. I found the Lora files in AI_PICS>Lora. I have since moved them to AI_PICS > models >Lora. Thanks.

LoRA_models_from_URL field isn’t present in the notebook either

Putting LORAs on the AI_PICS > models > Lora doesn’t make them show up, even after reload 🤔

Hi, I just tested the notebook and the loading LoRA in Google Drive is working correctly.

1. Try press the Refresh button on the LoRA tab.

2. A1111 only shows LoRAs that are compatible with the checkpoint model. E.g. Select an XL checkpoint -> Refresh the LoRA tab to show XL LoRAs.

The Save_In_Google_Drive option is gone in the latest version of the notebook.

It is removed. You can access the old version which I no longer maintain. https://stable-diffusion-art.com/legacy-A1111-notebook

Would you ever consider doing a notebook as UI-friendly as yours but with ComfyUI? People are migrating towards it and still am unable to find a Colab notebook as clear as yours.

I managed to run ‘Comfy’ UI with a Colab notebook. The problem is that this is the most ironic name for anything ever. Comfy like a maze made of burning chainsaws.

I have one but its not as well written as this one. I will think about it given that A1111 is not catching up with the latest tech.

+1 for the request for a Comfy notebook. I’m willing to pay extra for a ComfyUI notebook from yours, Andrew.

@Bjørn, what notebook do you use?

OK I will think about it 🙂

Hi Andrew,

Thank you for the Colab. I’m grateful to be using it.

Recently, I’ve encountered a couple of issues while using this Colab:

1. When I use NGROK, I get an error stating that my request has been refused. Are we still able to use NGROK?

2. When I use Gradio, the page displays an error saying, “This site can’t be reached.” I’m wondering if there’s an issue with Gradio.

Andrew, do you have any idea what might be causing these issues? Thank you for your help.

Hi, I just ran with Gradio and it is working correctly. Perhaps it is a temp issue. The need for ngrok is a lot less nowadays. I recommend using it only when gradio is not available.

Save_In_Google_Drive Everything mode has stopped working for me with the A100. I’ve been able to use it on a regular basis up until around 4 days ago. I’m not sure what changed in that time, but I’ve tried every day since then with no luck, both with gradio and ngrok. T4 still works but I find it much too slow for SDXL, which is why i subscribe for colab Pro+. There are never any error messages or warnings in either the UI or the colab log. The UI boots up and I can access it just fine, I can change & save settings but am unable to actually generate any images or even view/refresh my LoRAs. I click the generate button and the buttons change to the typical Interrupt|Skip buttons, but nothing happens and it just acts like it’s stuck before it even says 0% progress. There is no additional output in the colab log when I do this either, the most recent lines on there are just the startup messages about applying attention optimization, embedding loading, and model loading in however many seconds.

I get the same sort of issue when i try to view or refresh my LoRAs before even trying to generate an image, it acts like it’s starting to refresh but then just gets stuck in some sort of infinite loading/processing.

Do you have any advice?

The save everything setting is problematic due to inherent working of colab. You can try starting a new AI_PICS folder (e.g. AI_PICS2) to see if the problem can be resolved. Otherwise, use the default save setting.

If I use the default save setting, will I have to re-install/re-download my checkpoints, embeddings, and loras every time I start up?

You may need to move the model files to different folders in G drive. See this post for folder locations. You can switch to default to see if you still see the models and whether it resolves the issue.

Share link not created.

V1.9.0. Selected AnimateDiff and ControlNet. It seems this is is since 27/Apr as comments below.

>>>>>>

Running on local URL: http://127.0.0.1:7860

Interrupted with signal 2 in

Could not create share link. Please check your internet connection or our status page: https://status.gradio.app.

Startup time: 267.1s (prepare environment: 52.7s, import torch: 4.6s, import gradio: 1.0s, setup paths: 3.6s, initialize shared: 0.3s, other imports: 0.7s, list SD models: 0.5s, load scripts: 19.3s, create ui: 18.9s, gradio launch: 165.5s).

Looks like it was a temp issue.

Hello, Andrew. First of all, I would like to say thanks for your Colab work! Been actively using them without much issues~

Just a heads up, today, gradio has an issue where the xxxxxx.gradio.live link would not appear, only the local URL which is non-functional as expected.

Apply lowram patch

/content/stable-diffusion-webui

WEBUI ARGUMENTS: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –share –gradio-auth “a:a” –disable-model-loading-ram-optimization –opt-sdp-attention –medvram-sdxl

Python 3.10.12 (main, Nov 20 2023, 15:14:05) [GCC 11.4.0]

Version: v1.9.0

Commit hash: adadb4e3c7382bf3e4f7519126cd6c70f4f8557b

Launching Web UI with arguments: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –share –gradio-auth a:a –disable-model-loading-ram-optimization –opt-sdp-attention –medvram-sdxl

2024-04-27 15:26:50.920974: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-04-27 15:26:50.921028: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-04-27 15:26:50.922414: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2024-04-27 15:26:52.248896: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

no module ‘xformers’. Processing without…

no module ‘xformers’. Processing without…

No module ‘xformers’. Proceeding without it.

[-] ADetailer initialized. version: 24.4.2, num models: 10

Checkpoint sweetMix_v22Flat.safetensors [83326ee94a] not found; loading fallback aurora_v10.safetensors [1b5f8211ec]

Loading weights [1b5f8211ec] from /content/stable-diffusion-webui/models/Stable-diffusion/aurora_v10.safetensors

Running on local URL: http://127.0.0.1:7860

Creating model from config: /content/stable-diffusion-webui/configs/v1-inference.yaml

Loading VAE weights specified in settings: /content/stable-diffusion-webui/models/VAE/blessed2.vae.pt

Applying attention optimization: sdp… done.

Model loaded in 7.1s (load weights from disk: 0.8s, create model: 2.4s, apply weights to model: 2.6s, load VAE: 0.5s, load textual inversion embeddings: 0.5s, calculate empty prompt: 0.2s).

ngrok works fine for now.

I just realised this after letting the colab run for a while:

Model loaded in 52.2s (calculate hash: 31.3s, load weights from disk: 0.4s, create model: 3.5s, apply weights to model: 2.6s, load VAE: 7.5s, load textual inversion embeddings: 6.3s, calculate empty prompt: 0.5s).

Interrupted with signal 2 in

Could not create share link. Missing file: /usr/local/lib/python3.10/dist-packages/gradio/frpc_linux_amd64_v0.2.

Please check your internet connection. This can happen if your antivirus software blocks the download of this file. You can install manually by following these steps:

1. Download this file: https://cdn-media.huggingface.co/frpc-gradio-0.2/frpc_linux_amd64

2. Rename the downloaded file to: frpc_linux_amd64_v0.2

3. Move the file to this location: /usr/local/lib/python3.10/dist-packages/gradio

Startup time: 217.8s (prepare environment: 2.1s, import torch: 4.6s, import gradio: 1.0s, setup paths: 3.4s, initialize shared: 1.0s, other imports: 1.1s, list SD models: 4.2s, load scripts: 22.6s, create ui: 1.2s, gradio launch: 176.4s).

Maybe that’s the reason why gradio links are not appearing anymore?

Hi, gradio is working now. Its likely a temporary issue.

Hello i have error “HTTP Requests exceeded” in ngrok…

And from localhost SD not runnig “ERR_CONNECTION_REFUSED” when i try connect from colab.

Can you give advice with it?

Interesting… ngrok is normally not needed nowadays. You can try without.

Yes… but if i set empty in NGROK. I can not connect to SD colab…

The gradio link shows up now. It was a temp issue.

Hey,

The animatediff is not working. I’ve got this error:

*** Error calling: /content/stable-diffusion-webui/extensions/sd-webui-animatediff/scripts/animatediff.py/ui

Traceback (most recent call last):

File “/content/stable-diffusion-webui/modules/scripts.py”, line 547, in wrap_call

return func(*args, **kwargs)

File “/content/stable-diffusion-webui/extensions/sd-webui-animatediff/scripts/animatediff.py”, line 43, in ui

from scripts.animatediff_mm import mm_animatediff as motion_module

ModuleNotFoundError: No module named ‘scripts.animatediff_mm’

—

*** Error calling: /content/stable-diffusion-webui/extensions/sd-webui-animatediff/scripts/animatediff.py/ui

Traceback (most recent call last):

File “/content/stable-diffusion-webui/modules/scripts.py”, line 547, in wrap_call

return func(*args, **kwargs)

File “/content/stable-diffusion-webui/extensions/sd-webui-animatediff/scripts/animatediff.py”, line 43, in ui

from scripts.animatediff_mm import mm_animatediff as motion_module

ModuleNotFoundError: No module named ‘scripts.animatediff_mm’

It should be working now. updated A1111 to v1.9.0

Still not working

Traceback (most recent call last):

File “/usr/local/lib/python3.10/dist-packages/gradio/routes.py”, line 488, in run_predict

output = await app.get_blocks().process_api(

File “/usr/local/lib/python3.10/dist-packages/gradio/blocks.py”, line 1431, in process_api

result = await self.call_function(

File “/usr/local/lib/python3.10/dist-packages/gradio/blocks.py”, line 1103, in call_function

prediction = await anyio.to_thread.run_sync(

File “/usr/local/lib/python3.10/dist-packages/anyio/to_thread.py”, line 33, in run_sync

return await get_asynclib().run_sync_in_worker_thread(

File “/usr/local/lib/python3.10/dist-packages/anyio/_backends/_asyncio.py”, line 877, in run_sync_in_worker_thread

return await future

File “/usr/local/lib/python3.10/dist-packages/anyio/_backends/_asyncio.py”, line 807, in run

result = context.run(func, *args)

File “/usr/local/lib/python3.10/dist-packages/gradio/utils.py”, line 707, in wrapper

response = f(*args, **kwargs)

File “/content/stable-diffusion-webui/modules/call_queue.py”, line 95, in f

mem_stats = {k: -(v//-(1024*1024)) for k, v in shared.mem_mon.stop().items()}

File “/content/stable-diffusion-webui/modules/memmon.py”, line 92, in stop

return self.read()

File “/content/stable-diffusion-webui/modules/memmon.py”, line 77, in read

free, total = self.cuda_mem_get_info()

File “/content/stable-diffusion-webui/modules/memmon.py”, line 34, in cuda_mem_get_info

return torch.cuda.mem_get_info(index)

File “/usr/local/lib/python3.10/dist-packages/torch/cuda/memory.py”, line 655, in mem_get_info

return torch.cuda.cudart().cudaMemGetInfo(device)

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.

Hi! I can’t seem to load controlnet and openpose editor even tho I clicked the checkbox on the extensions

Hi, I just ran the notebook as is but adding controlnet and openpose editor. controlnet works but openpose editor didn’t show up. It seems to be the issue with the extension. You can report the issue in their repo.

I am getting an error when I run any prompts, “RuntimeError: Given groups=1, weight of size [320, 5, 3, 3], expected input[2, 9, 64, 64] to have 5 channels, but got 9 channels instead

Time taken: 1.4 sec.”

I used a different checkpoint, and that fixed that issue, but another issue is that the models that I put into the AI_PICS>Models folder are not loading in the GUI, I must be doing something wrong. I am trying to add these two

animagine-xl-2.0.safetensors

AnythingV5V3_v5PrtRE.safetensors

maybe I need to disconnect and reconnect, now I see them in the drop down. I am all set.

👍

Hi Andrew! Not sure if this is the spot to ask, but I’ve been using your Notebook from the Quick Start Guide in google colab for a while and came back to try it again this week, but I’m getting odd out of memory errors with PyTorch gobling up a lot of GPU ram. Its making it necessary to run in low ram mode, or limit batches to just 2 512×768 pictures (or both), though I’ve supposedly got 15GB of GPU ram with my Colab Pro membership. Did something change, and is there any way I can correct or work around this?

I can’t post a screenshot here, but the error message that appears is:

‘OutOfMemoryError: CUDA out of memory. Tried to allocate 4.50 GiB. GPU 0 has a total capacity of 14.75 GiB of which 3.25 GiB is free. Process 106112 has 11.50 GiB memory in use. Of the allocated memory 6.92 GiB is allocated by PyTorch, and 4.42 GiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)’

Hi! I updated the notebook today (3/21). Give it a try.

Its working great now, thanks so much!

Hi, thanks so much for all your work!

I love reading your articles and learning so much!

I currently have a problem with that Colab notebook. So I wanted to ask if anything is known:

I am using Colab pro. T4 and sometimes also V100.

With both GPUs I have the problem that the system-ram fills up and then I lose the connection or in the notebook it simply terminates the program with “^C”

The system-ram is getting fuller and fuller per generated image (approx. 5gb or so per image) and this does not decrease again. It runs full and when it reaches about 45, 48 of 50, it aborts….

Is there a known problem or am I doing something wrong? 😀

Hi! I updated the notebook today (3/21). Give it a try.

Checking back…I”m using the 4/18 version of the notebook and having this problem. Is there anything that I need to do to resolve?

This is an issue with A1111. Not much I can do.

No module named ‘torchvision.transforms.functional_tensor’

Is this a common error? I’ve tried 4 times now and paid for Colab.

A similar problem

I updated to v1.8.0 and it seems to be working fine. Post the whole error message and how to reproduce.

Still doesn’t work. The log with the error is very long, it doesn’t fit here, I posted it at the link: https://badgena.notion.site/SD-Colab-Error-991ec7728bea4416930ea2a26dae5c12?pvs=4

I see you save everything in your google drive. This is known to cause issue from time to time.

Either use the recommended saving option, or delete/rename the folder in your google drive and try again.

it doesn’t work on google colab😤 it wants you to give all the acsess to your google account, but even if you give it, Step 7 never happens

not sure what to do with google account now… change all passwords urgently?…

The google account access is only for saving the images and settings in your google drive. (If you are a programmer, you can read the codes in the notebook to confirm)

Post the error message if you want to try again.

Hello? How can I add civitai link models instead of huggingface?

They require login to download model so a link on notebook won’t work.

You need to use civitai helper. See the extension guide – https://stable-diffusion-art.com/automatic1111-extensions/

Hi, openpose editor doesn’t load even though I included it in the extensions choices

It used to work fine for me, but this week I’m having trouble connecting. Wondering why?

The interface can be launched just fine. However, after like few mins, the error started to appear.

“Error

Connection errored out.”

Also, the local url never work for me. It says check proxy and firewall.

I didn’t use any proxy and Chrome is in the whitelist.

hi, I’m also getting this runtime error and failed to launch. Could you help me to fix it?

Python 3.10.12 (main, Nov 20 2023, 15:14:05) [GCC 11.4.0]

Version: v1.7.0

Commit hash: cf2772fab0af5573da775e7437e6acdca424f26e

Traceback (most recent call last):

File “/content/drive/MyDrive/AI_PICS/stable-diffusion-webui/launch.py”, line 48, in

main()

File “/content/drive/MyDrive/AI_PICS/stable-diffusion-webui/launch.py”, line 39, in main

prepare_environment()

File “/content/drive/MyDrive/AI_PICS/stable-diffusion-webui/modules/launch_utils.py”, line 384, in prepare_environment

raise RuntimeError(

RuntimeError: Torch is not able to use GPU; add –skip-torch-cuda-test to COMMANDLINE_ARGS variable to disable this check

I’ve used it for a while and its working.

Local connection: It’s not supposed to work. It’s local to the colab environment that you don’t have access to. That’s why we need to open the public connection.

Runtime Error: It seems that you have started the notebook without GPU. Are you using a paid colab account? You have to. Otherwise you will be disconnected or not allocated with GPU sometimes.

Hi, thx for your quick reply.

I’m on a “pay as you go”plan and running with T4 GPU.

Sometimes when I start up, I run into a runtime error, or after the interface launches fine, I hit a snag with a ‘connection errorred out’. Mostly the latter.

Your setup should run run fine. You can paste the whole error message next time when it happens.

Hi. I’m brand new to this. Where do I download a copy of AUTOMATIC1111 so I can upload it to my Google Drive and then open it in aCoLab notebook?

You simply run the notebook and it will be downloaded automatically to colab. It will not be saved to your google drive due to the size. you can save and reuse models in your google drive.

Where do I get the notebook? How do I run it?

I’ve set up a Google CoLab Pro account and I’m trying to follow the tutorial “How to run Stable Diffusion on Google Colab (AUTOMATIC1111)”, but when I get to the section “Step-by-step instructions to run the Colab notebook” it tells me to open the Colab notebook in Quick Start Guide. But when I click on that link it takes me to this page:

https://andrewongai.gumroad.com/l/stable_diffusion_quick_start

where there is no Colab Notebook…

Hi, you need to download the quick start guide first. It’s free. The link is in the guide.

Hey there—I’m running A1111 through Google Colab, looks like I’m getting this error when I try to run it:

OSError: Can’t load tokenizer for ‘openai/clip-vit-large-patch14’. If you were trying to load it from ‘https://huggingface.co/models’, make sure you don’t have a local directory with the same name. Otherwise, make sure ‘openai/clip-vit-large-patch14’ is the correct path to a directory containing all relevant files for a CLIPTokenizer tokenizer.

Any ideas?

Never mind—I see huggingface is under maintenance so I’m sure that’s why.

I’ve started getting the following error:

ImportError: cannot import name ‘set_documentation_group’ from ‘gradio_client.documentation’ (/usr/local/lib/python3.10/dist-packages/gradio_client/documentation.py)

Hi, I tested the recommended setting and didn’t see this error.

Hi. How many images (approximately) you can generate using Colab-Pro Subscription (SD1.5 and SDXL models) during a month?

Not use if pricing is the same in all countries. But in the US, you get about 50 hours for $10. You should get 4 SD1.5 images within a minute.

Hi Andrew

Recently, AnimateDiff has “CUDA out of memory” error.

I use T4 with High RAM and enabled ControlNet, DeForum and AnimateDiff. When, I run AnimateDiff to create video with Prompt Travle, the error as below occurs. I tested with A100 (40G VRAM), which successfully generates video. I wonder if 15G VRAM is not enough to run AnimateDiff on Colab.

Andrew, do you have any idea why the error occurs?

OK, I will take a look!

Hi Andrew again

I wonder if had a chance to check if AnimateDiff causes CUDA out of memory error if T4 is used? I wonder if this error occurs only on my part or it is a common error.

Sorry for the delay. I did a quick test with an SD1.5 model and did not see the error. The GPU memory is merely at 6.1GB. Please detail the steps to reproduce.

Hi Andrew,

I’m using your notebook StableDiffusionUI_ngrok_sagiodev.ipynb for 2 months already but got stucked at: “Installing Deforum requirement: pims” step today.

I saw there were an update yesterday. Could this cause my problem?

Norbert

Hi, I checked deforum and it worked correctly.

It is updated to using v1.7 by default. you can revert it to v1.6 by changing the version field.

been having issues lately (forgive me if silly questions I don’t fully understand all this)

I had previously been making models no problems but lately whenever I create a new model, no matter what I type the images it produces are almost identical to the training images, completely ignoring al my prompts. Some people have been saying it’s over training but I have been using the same method for all my previous models and have had no trouble (100 training steps for each image). Not sure if it has anything to do with it but ever since the models have been saving as ‘safetensors’ instead of ‘ckpt’ I’ve been having this issue. I’ve tried lowering the steps but then I don’t seem to get the same quality I was previously getting. I was previously doing around 100 training images with 10000 steps and getting great results. Someone suggested lowering the learning rate to 3e-6 but when I do that I get an error for running out of disc space right when it’s about to finish. Appreciate what you do and again I apologize if I sound dumb lol

I’m trying to add the adetailer model face_yolov8n_v2. Am I able to do that in the models folder of the collab?

a custom model in adetailer is not supported. you will need to upload manually through the file explorer on the left.

Hey Andrew, just installed the notebook.

I am currently trying to create a video with deforum but for some reason

1. the revAnimated V1-2-2-2 model doesn’t load. It appears in the dropdown menu in the upper left corner but everytime I select it, it changes back to another preinstalled model immediately.

2. Also when I hit generate in Deforum I get this error message:

*START OF TRACEBACK*

Traceback (most recent call last):

File “/content/stable-diffusion-webui/extensions/deforum/scripts/deforum_helpers/run_deforum.py”, line 116, in run_deforum

render_animation(args, anim_args, video_args, parseq_args, loop_args, controlnet_args, root)

[…]

RuntimeError: Input type (torch.cuda.HalfTensor) and weight type (torch.HalfTensor) should be the same

*END OF TRACEBACK*

User friendly error message:

Error: Input type (torch.cuda.HalfTensor) and weight type (torch.HalfTensor) should be the same. Please, check your schedules/ init values.

Please help and merry christmas from an Andrew to Andrew 😀

Merry Xmas!

For 1: what’s the error message when loading the model? You can try re-downloading the model.

For 2: I used the preloaded dreamshaper model on the colab notebook running on T4. Running deforum with default settings. The video generation was successful.

Merry Christmas man!

For both problems I figured out a solution. For some reason the download didn’t quite work when I just pasted the link into the notebook before startup.

I now just downloaded the Lora and Checkpoint that I needed and added it to my google drive.

Thanks for this amazing website and notebook.

Have a great time with your family and enjoy the upcoming days! 🎅

Hi Everybody – Unable to get any of my ckpts to mount currently. Anybody else having issues?

changing setting sd_model_checkpoint to charrr.ckpt: AttributeError

Traceback (most recent call last):

File “/content/stable-diffusion-webui/modules/options.py”, line 140, in set

option.onchange()

File “/content/stable-diffusion-webui/modules/call_queue.py”, line 13, in f

res = func(*args, **kwargs)

File “/content/stable-diffusion-webui/modules/initialize_util.py”, line 170, in

shared.opts.onchange(“sd_model_checkpoint”, wrap_queued_call(lambda: sd_models.reload_model_weights()), call=False)

File “/content/stable-diffusion-webui/modules/sd_models.py”, line 741, in reload_model_weights

state_dict = get_checkpoint_state_dict(checkpoint_info, timer)

File “/content/stable-diffusion-webui/modules/sd_models.py”, line 315, in get_checkpoint_state_dict

res = read_state_dict(checkpoint_info.filename)

File “/content/stable-diffusion-webui/modules/sd_models.py”, line 301, in read_state_dict

sd = get_state_dict_from_checkpoint(pl_sd)

File “/content/stable-diffusion-webui/modules/sd_models.py”, line 245, in get_state_dict_from_checkpoint

pl_sd = pl_sd.pop(“state_dict”, pl_sd)

AttributeError: ‘NoneType’ object has no attribute ‘pop’

Yess, I got the same error. try to download the models manually and add them to your drive. this way it should work.

Hey Andrew!

Thanks for the Colab notebook. I was wondering where does it exactly save the models and the files on my drive? I have already downloaded models and want to just add them to the folder where the models are.

In the AI_PICS folder.

Found it! I also added the SDXL Turbo but somehow it doesn’t load it! it keeps switching back to other models you added there by default. Am i missing something?

The SDXL turbo is no different from other models. See the error message on Colab if you cannot load it. You can try re-downloading the model. Sometimes its just corrupted.

Soooo after doing all the above and having successfully launched it once and saved it to gdrive, after you close the runtime, how do you open it up again next time? lol

Probably a newbie question, but the guide states “The LoRA model will be saved to your Google Drive under AI_PICS > Lora if Use_Google_Drive is selected” and “start the notebook with the Use_Google_Drive option”

How does one select “Use_Google_Drive”? I can’t find that option anywhere.

Hi, you only need to use the recommended setting for saving in google drive. I will update the text.

Downloaded and tried to install Auto1111 colab purchased from here…

And got this for the last hour or so…

This share link expires in 72 hours. For free permanent hosting and GPU upgrades, run `gradio deploy` from Terminal to deploy to Spaces (https://huggingface.co/spaces)

Startup time: 13.4s (prepare environment: 1.8s, import torch: 6.1s, import gradio: 0.6s, setup paths: 0.5s, initialize shared: 0.2s, other imports: 0.7s, load scripts: 0.7s, create ui: 0.4s, gradio launch: 2.2s).

Creating model from config: /content/stable-diffusion-webui/configs/v1-inference.yaml

Applying attention optimization: sdp-no-mem… done.

Model loaded in 6.0s (load weights from disk: 2.9s, create model: 1.8s, apply weights to model: 1.0s, calculate empty prompt: 0.2s).

no other messsages…

do you see the gradio.live link above? If not, likely be a temporary problem with gradio.

ngork’s link is usually more stable. you can try.

Tried the Gradio.live link>.. Reported “no interface…”

anyway the line out feed suggested that nothing was running on that port anyway.

that’s strange. I just tried and it is working. Make sure you use the link when the notebook is running.

I receive this error message: nansException: A tensor with all NaNs was produced in VAE. This could be because there’s not enough precision to represent the picture. Try adding –no-half-vae commandline argument to fix this. Use –disable-nan-check commandline argument to disable this check.

Try adding those arguments to “Extra Web-UI arguments” in the notebook.

You mean like this? “–disable-model-loading-ram-optimization –opt-sdp-no-mem-attention –no-half-vae –disable-nan-check” as the first two were there already,

Anyway I get this message: launch.py: error: unrecognized arguments: –no-half-vae –disable-nan-check

Solved it, didn’t have compute units in colab, but why does the animation look very different with animatediff, compared to when I generate a single image only?

because the image condition is conditioned by animatediff’s motion model, in addition to the text prompt.

hi. i was able to run this a few months ago w a free colab account and now it’s saying the code isn’t allowed. what’s the deal?

yeah, they banned free SD.

Hey!

Today notebook stopped starting with this error.

I have Colab Pro

“You cannot currently connect to a GPU due to usage limits in Colab. Learn more”

Colab Pro+ works. I think it’s Google limiting access, hopefully only at peak times, because I’ve been just about to downgrade to Pro – I don’t use 500 points a month… Maybe try again? (I’d be rather displeased if it becomes Pro+ only)

I tested with colab pro and it is working correct. perhaps it is a temp problem.

I’m trying very hard to train Dreambooth on Google Colab using your notebook. However It seems your notebook is not installing xformers properly. When I launch dreambooth, I give the message “No module ‘xformers’. Proceeding without it”.

In AUTOMATIC1111, I can create a new model in the Dreambooth extension. But when I try to train it, after about 15 of running it would tell me xformers is not installed.

I read some of the comments about using sdp? I followed your instructions to change my settings and deleted the iconfig file, however Dreambooth is still giving me the error that xformers is not installed.

How do I properly install xformers with your notebook, so I am able to use Dreambooth to train a model?

I found a solution. Before running the contents of the notebook, I had to first run the following line in the notebook.

pip3 install -U xformers –index-url https://download.pytorch.org/whl/cu118

Then I added –xformers to the “Extra_arguments”

Hello Andrew~

I’m currently having problem in trying to execute the colab notebook, below is the log I have obtained:

Apply lowram patch

/content/stable-diffusion-webui

WEBUI ARGUMENTS: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –ngrok 2XZW8TEOBnMlRVh6KlNr98YVZpD_88RqBGJBd7cLfaE2PScbd –gradio-auth lofited:lofitedcal –disable-model-loading-ram-optimization –opt-sdp-no-mem-attention

Python 3.10.12 (main, Jun 11 2023, 05:26:28) [GCC 11.4.0]

Version: v1.6.0

Commit hash: 5ef669de080814067961f28357256e8fe27544f4

Launching Web UI with arguments: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –ngrok 2XZW8TEOBnMlRVh6KlNr98YVZpD_88RqBGJBd7cLfaE2PScbd –gradio-auth lofited:lofitedcal –disable-model-loading-ram-optimization –opt-sdp-no-mem-attention

2023-11-03 15:09:27.332699: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2023-11-03 15:09:27.332752: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2023-11-03 15:09:27.332777: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2023-11-03 15:09:28.306097: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

Traceback (most recent call last):

File “/content//stable-diffusion-webui/launch.py”, line 48, in

main()

File “/content//stable-diffusion-webui/launch.py”, line 44, in main

start()

File “/content/stable-diffusion-webui/modules/launch_utils.py”, line 432, in start

import webui

File “/content/stable-diffusion-webui/webui.py”, line 13, in

initialize.imports()

File “/content/stable-diffusion-webui/modules/initialize.py”, line 21, in imports

import gradio # noqa: F401

File “/usr/local/lib/python3.10/dist-packages/gradio/__init__.py”, line 3, in

import gradio.components as components

File “/usr/local/lib/python3.10/dist-packages/gradio/components/__init__.py”, line 1, in

from gradio.components.annotated_image import AnnotatedImage

File “/usr/local/lib/python3.10/dist-packages/gradio/components/annotated_image.py”, line 12, in

from gradio import utils

File “/usr/local/lib/python3.10/dist-packages/gradio/utils.py”, line 353, in

class AsyncRequest:

File “/usr/local/lib/python3.10/dist-packages/gradio/utils.py”, line 372, in AsyncRequest

client = httpx.AsyncClient()

File “/usr/local/lib/python3.10/dist-packages/httpx/_client.py”, line 1397, in __init__

self._transport = self._init_transport(

File “/usr/local/lib/python3.10/dist-packages/httpx/_client.py”, line 1445, in _init_transport

return AsyncHTTPTransport(

File “/usr/local/lib/python3.10/dist-packages/httpx/_transports/default.py”, line 275, in __init__

self._pool = httpcore.AsyncConnectionPool(

TypeError: AsyncConnectionPool.__init__() got an unexpected keyword argument ‘socket_options’

Same problem.

Same problem 🙁

…TypeError: AsyncConnectionPool.__init__() got an unexpected keyword argument ‘socket_options’…

Seconded… *looks at comments below* Fourthed 😉

Fix: add the line

!pip install httpx==0.24.1

in the notebook, before python.py is run. Whatever httpx is, it needs a downgrade from 0.25.1. (Credit: lilyasviel)

thank you!

Hello! I am having trouble with this. Where in the code do I add the line?

I hope you will reply, thank you!

display the code and add the line command to the penultimate line (just before : !python {root}/stable-diffusion-webui/launch.py {args})

Hi, the notebook is fixed. Please pull the copy updated Nov 4th.

Hi Andrew

The roop extension no longer works. It seems that the huggingface model file is no longer available.

Hi Andrew again

Although the huggingface model file is no longer available, it seems roop is still working on Colab.

Look up FaceSwapLab. It’s not only way better (I created a checkpoint of my face with 40 images, it gives incredible results) but also has the inswapper_128.onnx file included. Save it somewhere separately, because it keeps making appearances, then disappears here and re-appears there. Not sure why, because it was officially released, it’s not pirated.

Unfortunately the _256 and _512 modules have not been released (if anybody has them, I would not hate you for sending me a link…)

Also, Andrew, thanks for “forcing” us to use sdp – it’s twice as fast as xformers at AnimateDiff 8-o

yeah, xformers and sdp are the same thing. We should move on to sdp unless on old machines where it’s not supported.

Yes, the notebook uses an alternative source.

Hi Andrew

I just noticed that you updated the colab with SDP attention instead of XFormers. May I ask what it actually mean and why this change was necessary?

I don’t know, personally, but for me what it means is that the notebook doesn’t work anymore on a v100 without giving a CUDA out of memory error when trying to hi-res fix/upscale a single 512×512 image. (I actually haven’t tried it on the T4 yet). Before the change away from xformers I used to do 4 batches of 4 512×768 images at once with 2x hi-res fix enabled, without any issues. Now I can’t even upscale one. I wonder if there’s a way to turn xformers back on, maybe in the extra_arguments field.

For existing users, do either one:

– Change Settings > Optimizations > Cross attention optimization to Automatic. Apply Settings.

– Or, delete uiconfig.json in AI_PICS folder

Thanks, I’ll try this

So, I checked and my cross attention optimization was already set to automatic. Using the same setup as before with trying to do a single 512×768 image with 2x hi-res fix, I still get the CUDA out of memory error:

OutOfMemoryError: CUDA out of memory. Tried to allocate 9.00 GiB. GPU 0 has a total capacty of 15.77 GiB of which 3.64 GiB is free. Process 124835 has 12.13 GiB memory in use. Of the allocated memory 11.25 GiB is allocated by PyTorch, and 462.89 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

I guess I’ll try deleting uiconfig.json and re-initializing all my UI settings to see if that does anything…

Yeah, that didn’t change anything. It just gives the same error.

Is there any way to use the older version of the notebook, or was this a change that google made to colab that makes us no longer able to use xformers?

The notebook didn’t change but colab environment did. I can reproduce your error in V100 but haven’t find a way to fix it yet. It works on T4 with sdp attention, which is the pytorch native implementation of xformers. I will try more when I have time, but switch to T4 or test other machine types for now.

Change it to sdp – single dot product. I think Automatic still tries to use xformers (which isn’t there) or doggettx.

It doesn’t work anymore with a colab update. You will need to update the settings after this change. Do either one:

– Change Settings > Optimizations > Cross attention optimization to Automatic. Apply Settings.

– Or, delete uiconfig.json in AI_PICS folder

Hi Andrew

Where can I find the “Change Settings” menu? Is it on the colab page? I can’t find it. Or do I need to find it somewhere else?

In A1111, setting page.

Thank you, Andrew.

The notebook stopped working today. Getting this error when clicking Generate:

RuntimeError: dictionary changed size during iteration

0% 0/20 [00:00<?, ?it/s]

*** Error completing request

ALSO Seeing this new error during the build:

WARNING:xformers:WARNING[XFORMERS]: xFormers can't load C++/CUDA extensions. xFormers was built for:

PyTorch 2.0.1+cu118 with CUDA 1108 (you have 2.1.0+cu118)

Python 3.10.11 (you have 3.10.12)

Please reinstall xformers (see https://github.com/facebookresearch/xformers#installing-xformers)

Memory-efficient attention, SwiGLU, sparse and more won't be available.

Set XFORMERS_MORE_DETAILS=1 for more details

OK, fixed.

Thanks, it works!

Spoke too fast.

I did a fresh install with “save small models etc.”, the only model I download is SD1.5, ControlNet, AnimateDiff. No other settings changed. When I try to animate 512×768, I get:

OutOfMemoryError: CUDA out of memory. Tried to allocate 11.53 GiB. GPU 0 has a total capacty of 15.77 GiB of which 10.83 GiB is free. Process 285530 has 4.94 GiB memory in use. Of the allocated memory 4.15 GiB is allocated by PyTorch, and 407.56 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

I was using my regular install with a different model when this hit first, so I thought I’d try from scratch. I also changed the AnimateDiff setting to “optimise attention layers with sdp” in case that would help. No other extensions, no other changes. Colab tells me I am only using 3.7GB VRAM.

512×512 just about renders, but after a restart – once I get this error, I can’t render anything at all anymore.

There’s something hardcoded in settings. Do either one:

– Change Settings > Optimizations > Cross attention optimization to Automatic. Apply Settings.

– Or, delete uiconfig.json in AI_PICS folder

Thank you it works now 🙂

I notice the Google Drive requested permissions have changed. Instead of just requesting access to the drive, it’s requesting contacts, file logs, personal information and many other permissions it didn’t ask for before. Are these new permissions part of your updates?

No, they are not my updates.

Good to know – thanks again.

In that case, Google is reaching too far into our private data. Current permissions requested are:

https://www.googleapis.com/auth/userinfo.email https://www.googleapis.com/auth/docs.test https://www.googleapis.com/auth/drive https://www.googleapis.com/auth/drive.activity.readonly https://www.googleapis.com/auth/drive.photos.readonly https://www.googleapis.com/auth/experimentsandconfigs https://www.googleapis.com/auth/peopleapi.readonly openid

These permissions were not required previously. According to the manifest, Google is stating that these permission requests are from the developer, not Google (sigh).

It was working fine till today or yesterday. I have error message

ARNING:xformers:WARNING[XFORMERS]: xFormers can’t load C++/CUDA extensions. xFormers was built for:

PyTorch 2.0.1+cu118 with CUDA 1108 (you have 2.1.0+cu118)

Python 3.10.11 (you have 3.10.12)

Please reinstall xformers (see https://github.com/facebookresearch/xformers#installing-xformers)

Memory-efficient attention, SwiGLU, sparse and more won’t be available.

Set XFORMERS_MORE_DETAILS=1 for more details

Not sure how to go about reinstalling xformers from the site, it seems like i have newer versions and need to go back to the old ones..

Same. Colab welcomed me with excited information that Google updated things. Yay. Much excite. Except now xformers won’t load, FaceSwapLab won’t either, and it’s impossible to render anything… Thanks for nothing, GOOGLE.

Andrew – will you be maintaining this notebook, or have you completely moved to HorrorUI– typo, ComfyUI?

Fixed: change “Cross attention optimisation” to “sdp – scaled dot product.”

I’m getting this comment when I run A1111:

WARNING:xformers:WARNING[XFORMERS]: xFormers can’t load C++/CUDA extensions. xFormers was built for:

PyTorch 2.0.1+cu118 with CUDA 1108 (you have 2.1.0+cu118)

Python 3.10.11 (you have 3.10.12)

Please reinstall xformers (see https://github.com/facebookresearch/xformers#installing-xformers)

Memory-efficient attention, SwiGLU, sparse and more won’t be available.

Set XFORMERS_MORE_DETAILS=1 for more details

It won’t run. What goes wrong?

It reports the following:

Apply lowram patch

/content/stable-diffusion-webui

WEBUI ARGUMENTS: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –xformers –share –gradio-auth buzzzen:Tk9^=e,t –disable-model-loading-ram-optimization

Python 3.10.12 (main, Jun 11 2023, 05:26:28) [GCC 11.4.0]

Version: v1.6.0

Commit hash: 5ef669de080814067961f28357256e8fe27544f4

Installing xformers

Launching Web UI with arguments: –gradio-img2img-tool color-sketch –enable-insecure-extension-access –gradio-queue –xformers –share –gradio-auth buzzzen:Tk9^=e,t –disable-model-loading-ram-optimization

2023-10-12 12:45:35.327918: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

Checkpoint sd_xl_base_1.0.safetensors [31e35c80fc] not found; loading fallback v1-5-pruned-emaonly.ckpt

Calculating sha256 for /content/stable-diffusion-webui/models/Stable-diffusion/v1-5-pruned-emaonly.ckpt: Traceback (most recent call last):

File “/content//stable-diffusion-webui/launch.py”, line 48, in

main()

File “/content//stable-diffusion-webui/launch.py”, line 44, in main

start()

File “/content/stable-diffusion-webui/modules/launch_utils.py”, line 436, in start

webui.webui()

File “/content/stable-diffusion-webui/webui.py”, line 79, in webui

app, local_url, share_url = shared.demo.launch(

File “/usr/local/lib/python3.10/dist-packages/gradio/blocks.py”, line 1896, in launch

) = networking.start_server(

File “/usr/local/lib/python3.10/dist-packages/gradio/networking.py”, line 131, in start_server

app = App.create_app(blocks, app_kwargs=app_kwargs)

File “/usr/local/lib/python3.10/dist-packages/gradio/routes.py”, line 175, in create_app

app.configure_app(blocks)

File “/usr/local/lib/python3.10/dist-packages/gradio/routes.py”, line 131, in configure_app

self.auth = {account[0]: account[1] for account in auth}

File “/usr/local/lib/python3.10/dist-packages/gradio/routes.py”, line 131, in

self.auth = {account[0]: account[1] for account in auth}

IndexError: tuple index out of range

cc6cb27103417325ff94f52b7a5d2dde45a7515b25c255d8e396c90014281516

Loading weights [cc6cb27103] from /content/stable-diffusion-webui/models/Stable-diffusion/v1-5-pruned-emaonly.ckpt

Creating model from config: /content/stable-diffusion-webui/configs/v1-inference.yaml

(…)it-large-patch14/resolve/main/vocab.json: 100% 961k/961k [00:00<00:00, 13.9MB/s]

(…)it-large-patch14/resolve/main/merges.txt: 100% 525k/525k [00:00<00:00, 42.2MB/s]

(…)h14/resolve/main/special_tokens_map.json: 100% 389/389 [00:00<00:00, 1.67MB/s]

(…)tch14/resolve/main/tokenizer_config.json: 100% 905/905 [00:00<00:00, 4.04MB/s]

(…)t-large-patch14/resolve/main/config.json: 100% 4.52k/4.52k [00:00<00:00, 16.4MB/s]

Applying attention optimization: xformers… done.

Model loaded in 36.6s (calculate hash: 19.3s, load weights from disk: 11.5s, create model: 3.6s, apply weights to model: 1.1s, load textual inversion embeddings: 0.3s, calculate empty prompt: 0.7s).

Hi, I cannot reproduce the error. txt2img is working correctly.

Hi Andrew

I just read your new AnimateDiff tutorial (https://stable-diffusion-art.com/animatediff) and tried to use Video Source with ControlNet on this Google Colab.

I drag a short video (2 seconds with FPS is 30), so total 60 frames. Enabled ControlNet and set the Openpose as you explained. I click the Generate button and waited a bit, but it says “RuntimeError: The size of tensor a (60) must match the size of tensor b (120) at non-singleton dimension 0”

Below is the whole message on the Colab notebook output section. I tried with different checkpoint models with different prompts. But the same error messages occur. I can generate GIF successfully without Video Source. The errors happens when I put a Video Source with ControlNet. Please help.

>>>>>>>>>>>>>>

Total progress: 100% 30/30 [00:10<00:00, 2.84it/s]

2023-10-10 11:54:00,974 – AnimateDiff – INFO – AnimateDiff process start.

Calculating sha256 for /content/stable-diffusion-webui/extensions/sd-webui-animatediff/model/mm_sd_v15_v2.ckpt: 69ed0f5fef82b110aca51bcab73b21104242bc65d6ab4b8b2a2a94d31cad1bf0

2023-10-10 11:54:05,947 – AnimateDiff – INFO – You are using mm_sd_v15_v2.ckpt, which has been tested and supported.

2023-10-10 11:54:05,948 – AnimateDiff – INFO – Loading motion module mm_sd_v15_v2.ckpt from /content/stable-diffusion-webui/extensions/sd-webui-animatediff/model/mm_sd_v15_v2.ckpt

2023-10-10 11:54:10,660 – AnimateDiff – WARNING – Missing keys

2023-10-10 11:54:11,164 – AnimateDiff – INFO – Injecting motion module mm_sd_v15_v2.ckpt into SD1.5 UNet middle block.

2023-10-10 11:54:11,164 – AnimateDiff – INFO – Injecting motion module mm_sd_v15_v2.ckpt into SD1.5 UNet input blocks.

2023-10-10 11:54:11,164 – AnimateDiff – INFO – Injecting motion module mm_sd_v15_v2.ckpt into SD1.5 UNet output blocks.

2023-10-10 11:54:11,164 – AnimateDiff – INFO – Setting DDIM alpha.

2023-10-10 11:54:11,188 – AnimateDiff – INFO – Injection finished.

2023-10-10 11:54:11,188 – AnimateDiff – INFO – Hacking lora to support motion lora

2023-10-10 11:54:11,188 – AnimateDiff – INFO – Hacking CFGDenoiser forward function.

2023-10-10 11:54:11,189 – AnimateDiff – INFO – Hacking ControlNet.

2023-10-10 11:54:12,607 – ControlNet – INFO – Loading model: control_v11p_sd15_openpose [cab727d4]

2023-10-10 11:54:20,298 – ControlNet – INFO – Loaded state_dict from [/content/stable-diffusion-webui/extensions/sd-webui-controlnet/models/control_v11p_sd15_openpose.pth]

2023-10-10 11:54:20,298 – ControlNet – INFO – controlnet_default_config

2023-10-10 11:54:23,089 – ControlNet – INFO – ControlNet model control_v11p_sd15_openpose [cab727d4] loaded.

2023-10-10 11:54:23,637 – ControlNet – INFO – Loading preprocessor: dw_openpose_full

2023-10-10 11:54:23,637 – ControlNet – INFO – preprocessor resolution = 512

Downloading: “https://huggingface.co/yzd-v/DWPose/resolve/main/yolox_l.onnx” to /content/stable-diffusion-webui/extensions/sd-webui-controlnet/annotator/downloads/openpose/yolox_l.onnx

100% 207M/207M [00:00<00:00, 347MB/s]

Downloading: "https://huggingface.co/yzd-v/DWPose/resolve/main/dw-ll_ucoco_384.onnx" to /content/stable-diffusion-webui/extensions/sd-webui-controlnet/annotator/downloads/openpose/dw-ll_ucoco_384.onnx

100% 128M/128M [00:00<00:00, 318MB/s]

2023-10-10 11:56:22,694 – ControlNet – INFO – ControlNet Hooked – Time = 130.45434308052063

0% 0/30 [00:08<?, ?it/s]

*** Error completing request

*** Arguments: ('task(vc4hmbdxtwxkuj2)', 'pretty 1girl, absurdres, 8k uhd, platinum blonde hair, bob cut, medium breasts, black tshirt, denim shorts, garden background, flowers, magnolias, apple tree, close up portrait', '3d, cartoon, anime, sketches, (worst quality, bad quality, child, cropped:1.4) ((monochrome)), ((grayscale)), (bad-hands-5:1.0), (badhandv4:1.0), (easynegative:0.8), (bad-artist-anime:0.8), (bad-artist:0.8), (bad_prompt:0.8), (bad-picture-chill-75v:0.8), (bad_prompt_version2:0.8), (bad_quality:0.8)', [], 30, 'DPM++ 2M Karras', 1, 1, 8.5, 768, 512, False, 0.55, 2, '4x_foolhardy_Remacri', 36, 0, 0, 'Use same checkpoint', 'Use same sampler', '', '', [], , 0, False, ”, 0.8, 1514816117, False, -1, 0, 0, 0, , , , , False, False, ‘positive’, ‘comma’, 0, False, False, ”, 1, ”, [], 0, ”, [], 0, ”, [], True, False, False, False, 0, False, None, None, False, None, None, False, None, None, False, 50) {}

Traceback (most recent call last):

File “/content/stable-diffusion-webui/modules/call_queue.py”, line 57, in f

res = list(func(*args, **kwargs))

File “/content/stable-diffusion-webui/modules/call_queue.py”, line 36, in f

res = func(*args, **kwargs)

File “/content/stable-diffusion-webui/modules/txt2img.py”, line 55, in txt2img

processed = processing.process_images(p)

File “/content/stable-diffusion-webui/modules/processing.py”, line 732, in process_images

res = process_images_inner(p)

File “/content/stable-diffusion-webui/extensions/sd-webui-animatediff/scripts/animatediff_cn.py”, line 108, in hacked_processing_process_images_hijack

return getattr(processing, ‘__controlnet_original_process_images_inner’)(p, *args, **kwargs)

File “/content/stable-diffusion-webui/modules/processing.py”, line 867, in process_images_inner

samples_ddim = p.sample(conditioning=p.c, unconditional_conditioning=p.uc, seeds=p.seeds, subseeds=p.subseeds, subseed_strength=p.subseed_strength, prompts=p.prompts)

File “/content/stable-diffusion-webui/extensions/sd-webui-controlnet/scripts/hook.py”, line 451, in process_sample

return process.sample_before_CN_hack(*args, **kwargs)

File “/content/stable-diffusion-webui/modules/processing.py”, line 1140, in sample

samples = self.sampler.sample(self, x, conditioning, unconditional_conditioning, image_conditioning=self.txt2img_image_conditioning(x))

File “/content/stable-diffusion-webui/modules/sd_samplers_kdiffusion.py”, line 235, in sample

samples = self.launch_sampling(steps, lambda: self.func(self.model_wrap_cfg, x, extra_args=self.sampler_extra_args, disable=False, callback=self.callback_state, **extra_params_kwargs))

File “/content/stable-diffusion-webui/modules/sd_samplers_common.py”, line 261, in launch_sampling

return func()

File “/content/stable-diffusion-webui/modules/sd_samplers_kdiffusion.py”, line 235, in

samples = self.launch_sampling(steps, lambda: self.func(self.model_wrap_cfg, x, extra_args=self.sampler_extra_args, disable=False, callback=self.callback_state, **extra_params_kwargs))

File “/usr/local/lib/python3.10/dist-packages/torch/utils/_contextlib.py”, line 115, in decorate_context

return func(*args, **kwargs)

File “/content/stable-diffusion-webui/repositories/k-diffusion/k_diffusion/sampling.py”, line 594, in sample_dpmpp_2m

denoised = model(x, sigmas[i] * s_in, **extra_args)

File “/usr/local/lib/python3.10/dist-packages/torch/nn/modules/module.py”, line 1501, in _call_impl

return forward_call(*args, **kwargs)

File “/content/stable-diffusion-webui/extensions/sd-webui-animatediff/scripts/animatediff_infv2v.py”, line 271, in mm_cfg_forward

x_out[a:b] = self.inner_model(x_in[a:b], sigma_in[a:b], cond=make_condition_dict(c_crossattn, image_cond_in[a:b]))

File “/usr/local/lib/python3.10/dist-packages/torch/nn/modules/module.py”, line 1501, in _call_impl

return forward_call(*args, **kwargs)

File “/content/stable-diffusion-webui/repositories/k-diffusion/k_diffusion/external.py”, line 112, in forward

eps = self.get_eps(input * c_in, self.sigma_to_t(sigma), **kwargs)

File “/content/stable-diffusion-webui/repositories/k-diffusion/k_diffusion/external.py”, line 138, in get_eps

return self.inner_model.apply_model(*args, **kwargs)

File “/content/stable-diffusion-webui/modules/sd_hijack_utils.py”, line 17, in

setattr(resolved_obj, func_path[-1], lambda *args, **kwargs: self(*args, **kwargs))

File “/content/stable-diffusion-webui/modules/sd_hijack_utils.py”, line 28, in __call__

return self.__orig_func(*args, **kwargs)

File “/content/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/diffusion/ddpm.py”, line 858, in apply_model

x_recon = self.model(x_noisy, t, **cond)

File “/usr/local/lib/python3.10/dist-packages/torch/nn/modules/module.py”, line 1501, in _call_impl

return forward_call(*args, **kwargs)

File “/content/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/diffusion/ddpm.py”, line 1335, in forward

out = self.diffusion_model(x, t, context=cc)

File “/usr/local/lib/python3.10/dist-packages/torch/nn/modules/module.py”, line 1501, in _call_impl

return forward_call(*args, **kwargs)

File “/content/stable-diffusion-webui/extensions/sd-webui-controlnet/scripts/hook.py”, line 858, in forward_webui

raise e

File “/content/stable-diffusion-webui/extensions/sd-webui-controlnet/scripts/hook.py”, line 855, in forward_webui

return forward(*args, **kwargs)

File “/content/stable-diffusion-webui/extensions/sd-webui-controlnet/scripts/hook.py”, line 592, in forward

control = param.control_model(x=x_in, hint=hint, timesteps=timesteps, context=context, y=y)

File “/usr/local/lib/python3.10/dist-packages/torch/nn/modules/module.py”, line 1501, in _call_impl

return forward_call(*args, **kwargs)

File “/content/stable-diffusion-webui/extensions/sd-webui-controlnet/scripts/cldm.py”, line 31, in forward

return self.control_model(*args, **kwargs)

File “/usr/local/lib/python3.10/dist-packages/torch/nn/modules/module.py”, line 1501, in _call_impl

return forward_call(*args, **kwargs)

File “/content/stable-diffusion-webui/extensions/sd-webui-controlnet/scripts/cldm.py”, line 311, in forward

h += guided_hint