Hunyuan Video is a new local and open-source video model with exceptional quality. It can generate a short video clip with a text prompt alone in a few minutes. It is ideal for content creators such as Youtubers to create the B-rolls in their videos.

Below is an example of Hunyuan Video.

In this tutorial, I will show you how to use Hunyuan Video in the following modes.

- Text-to-video

- Text-to-image

Table of Contents

Software

We will use ComfyUI, a free AI image and video generator. You can use it on Windows, Mac, or Google Colab.

Think Diffusion provides an online ComfyUI service. They offer an extra 20% credit to our readers.

Read the ComfyUI beginner’s guide if you are new to ComfyUI. See the Quick Start Guide if you are new to AI images and videos.

Take the ComfyUI course to learn how to use ComfyUI step by step.

What is Hunyuan video?

Tencent’s HunyuanVideo is an open-source AI model for text-to-video generation, distinguished by several key features and innovations:

- Large model: With 13 billion parameters, HunyuanVideo is the largest open-source text-to-video model. It is more than Mochi (10 billion), CogVideoX (5 billion), and LTX (2 billion).

- Unified Image and Video Generation: HunyuanVideo employs a “dual-stream to single-stream” hybrid transformer model design. In the dual-stream phase, video and text tokens are processed independently, allowing them to learn modulation mechanisms without interference. In the single-stream phase, the model joins the video and text tokens to fuse the information, enhancing the generation of both images and videos.

- Multimodal LLM Text Encoder: Unlike other video models, Hunyuan uses a visual LLM as its text encoder for higher-quality image-text alignment.

- 3D VAE: Hunyuan uses CausalConv3D to compress videos and images into latent space. This compression significantly reduces the resource requirement while maintaining the causal relations in the video.

- Prompt Rewrite Mechanism: To handle variability in user-provided prompts, HunyuanVideo includes a prompt rewrite model fine-tuned from the Hunyuan-Large model. It offers two modes: Normal and Master.

- Camera motion: The model is trained with many camera movements in the text prompt. You can use the following: zoom in, zoom out, pan up, pan down, pan left, pan right, tilt up, tilt down, tilt left, tilt right, around left, around right, static shot, handheld shot.

Generation time

Hunyuan Video generates an 848 x 480 (480p) video with 73 frames in:

- 4.5 mins on my RTX4090.

- 11 mins on Google Colab with an L4 runtime.

Hardware requirement

You will need an NVidia GPU card to run this workflow. People have reported running Hunyuan Video on ComfyUI with as low as 8 GB VRAM. The workflows in this tutorial are tested with RTX4090 with 24 GB VRAM.

Hunyuan Text-to-video workflow

The following workflow generates a Hunyuan video in 480p (848 x 480 pixels) and saves it as an MP4 file.

The instructions below are for local installation. If you use my ComfyUI Colab notebook, select the HunyuanVideo model.

Switch the runtime type to L4.

Jump to Step 4 to load the workflow.

Step 0: Update ComfyUI

Before loading the workflow, make sure your ComfyUI is up-to-date. The easiest way to do this is to use ComfyUI Manager.

Click the Manager button on the top toolbar.

Select Update ComfyUI.

Restart ComfyUI.

Step 1: Download video model

Download the Hunyuan video text-toimage model and put it in ComfyUI > models > diffusion_models.

Step 2: Download text encoders

Download clip_l.safetensors and llava_llama3_fp8_scaled.safetensors.

Put them in ComfyUI > models > text_encoders.

Step 3: Download VAE

Download hunyuan_video_vae_bf16.safetensors and put it in ComfyUI > models > vae.

Step 4: Load workflow

Download the Hunyuan video workflow JSON file below.

Drop it to ComfyUI.

Step 5: Install missing nodes

If you see red blocks, you don’t have the custom node that this workflow needs.

Click Manager > Install missing custom nodes and install the missing nodes.

Restart ComfyUI.

Step 6: Revise prompt

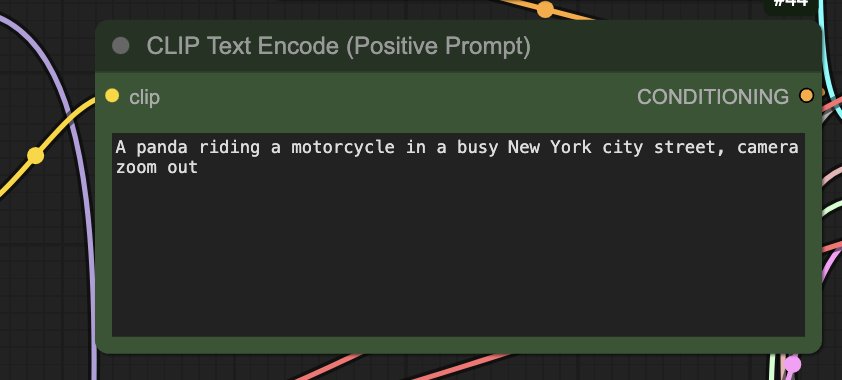

Revise the prompt to what you want to generate.

Step 7: Generate video

Click the Run button to run the workflow.

Troubleshooting

RuntimeError: “replication_pad3d_cuda” not implemented for ‘BFloat16’

This error comes from an outdated PyTorch version. This can happen if you have an old ComfyUI installation.

You can see the PyTorch version during startup in the command console. It should be 2.4 or higher.

If you use the Windows Portable version, you can try updating ComfyUI by double-clicking the file ComfyUI_windows_portable > update > update_comfyui_and_python_dependencies.bat

If it still doesn’t work, you can install a new copy of ComfyUI.

Hunyuan text-to-image workflow

Like Stable Diffusion or Flux, the Hunyuan video model can generate static images. In the workflow, you must set the number of frames to 1 and replace the final saving node as previewing or saving the image.

For your convenience, I have done all that, and you can use the following workflow JSON file after following the setup in the text-to-video workflow.

Simply revise the prompt and click Queue.

The model itself is 25GB, how can people be running it with 8GB VRAM? Has anyone tested it to confirm? Sounds surreal to me. Last time I tried (2070, 8GB) it would take forever to render any video so I deleted the entire stuff.

There is no “queue” button except on the upper left, and it just looked like it’s a queue for ongoing processes. The “Run” button just results in the upper right giving me a red box with an X next to it that says “reconnecting”. All of the instructions were followed exactly as written.

what’s the error message on the terminal? Reconnecting means ComfyUI server is not running.

just wanted to say that it also runs on a 6gb 1060, but its SLOOOOOOOOW

Thanks for trying 😂

Has it finished the video yet? 😉

is there any fast video model I can use with Mac locally ?

How would I wire a LoRA into this flow? I’ve yet to have a successful attempt.

It needs the Hunyuan Video Wrapper custom node. I will publish the workflow soon.

Yes, me too i’m interested where to put the lora exactly

https://stable-diffusion-art.com/hunyuan-video-lora/

I’m getting the error “Prompt outputs failed validation

UNETLoader:

– Value not in list: unet_name: ‘hunyuan_video_t2v_720p_bf16.safetensors’ not in [‘flux1-dev-Q8_0.gguf’]” I’ve downloaded hunyuan_video_t2v_720p_bf16.safetensors to \ComfyUI_windows_portable\ComfyUI\models\diffusion_models but the Load Diffusion Model node seems to be looking in the unet folder instead. However I also tried moving hunyuan_video_t2v_720p_bf16.safetensors to the unet folder and it still will not show up as an option in the node. What am I doing wrong?

Exact same problem here. I am not well versed in the devil’s spaghetti so I did exactly as wrote. 4090, comfy portable, 64 rams, yada yada.

Okay, got it to reproduce the panda movie. All I had to do was delete everything and reinstall comfyui using your method. I tested it at every stage from initial install, installing manager, to movie. Worked. I cannot begin to fathom why or what caused the problem as I’m not versed in the runes python uses for it’s hexes.

Yeah I have done that couple times….

Mmm… You should at least see the file. It can be put in either the unet or diffusion_models folder.

Try updating comfyui.

these files need to go in the clip folder, not the text_encoder folder

Can you please share Image-to-Video workflow as well?

They didn’t release an image-to-video model.But I will cover the ip-adapter workflow (ip2v) which uses an image for conditioning.

If I use mimicmypc ai

Can your tutorial help me?

Your blog is well written

However, I would like to see you on youtube offering the DEMO

If your card support bf16 mode then video may be generated with 8Gb VRAM, if not support so you can’t generate even with 12Gb.

That explains why it errors out with Golab T4 instance.