Generating images with a consistent style is a valuable technique in Stable Diffusion for creative works like logos or book illustrations. This article provides step-by-step guides for creating them in Stable Diffusion.

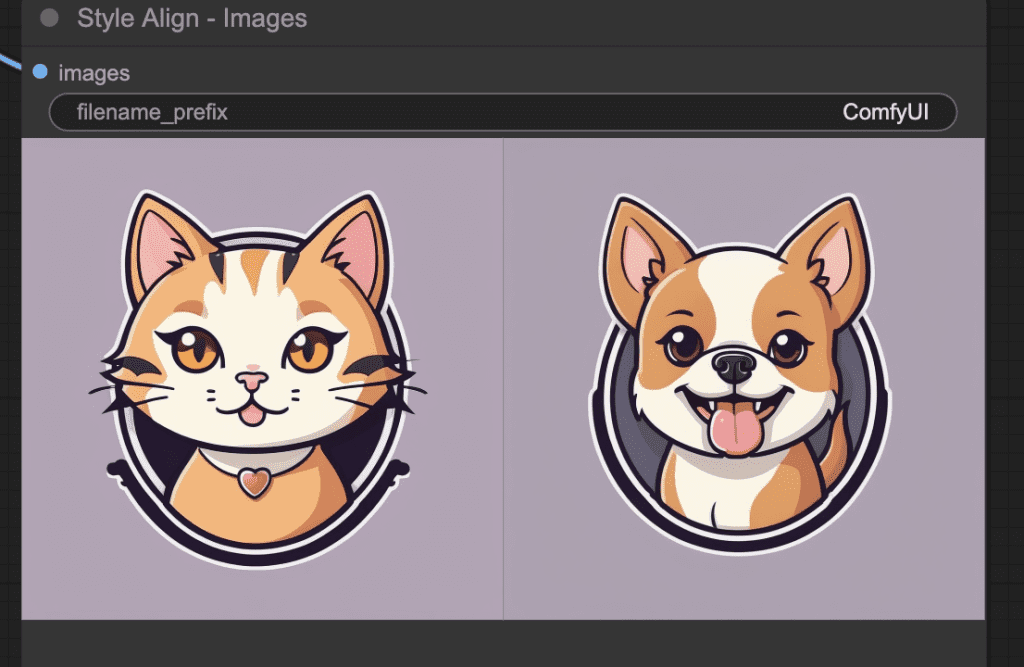

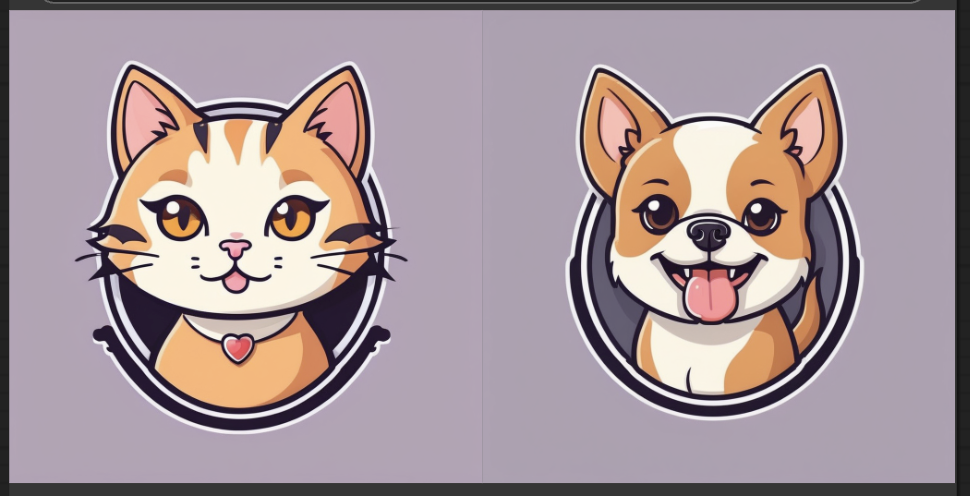

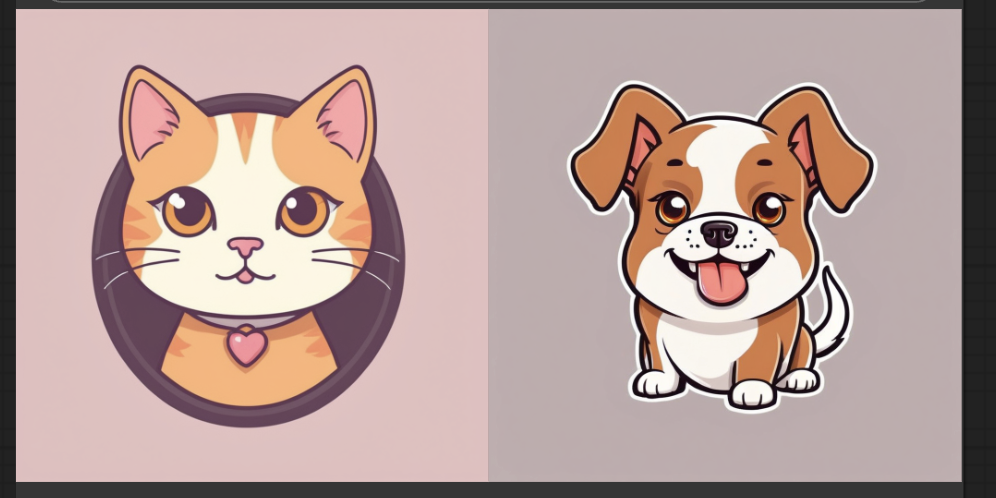

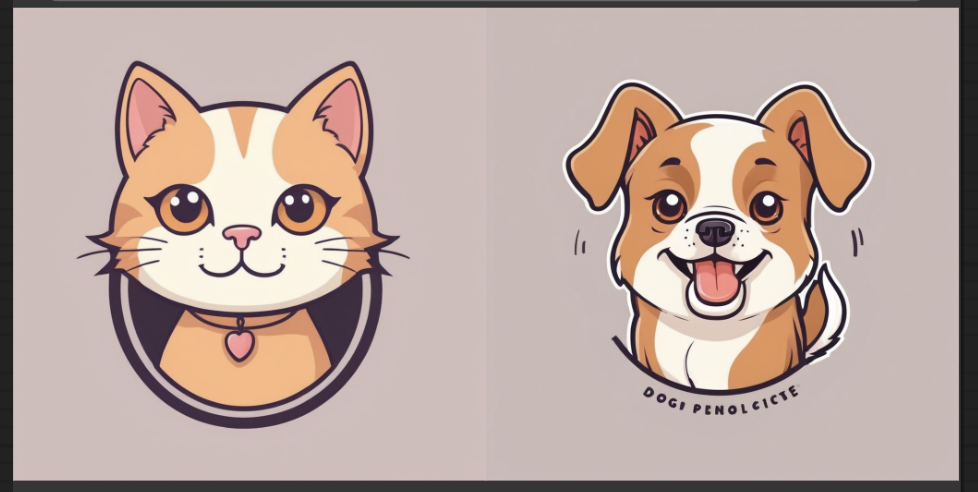

See the following examples of consistent logos created using the technique described in this article.

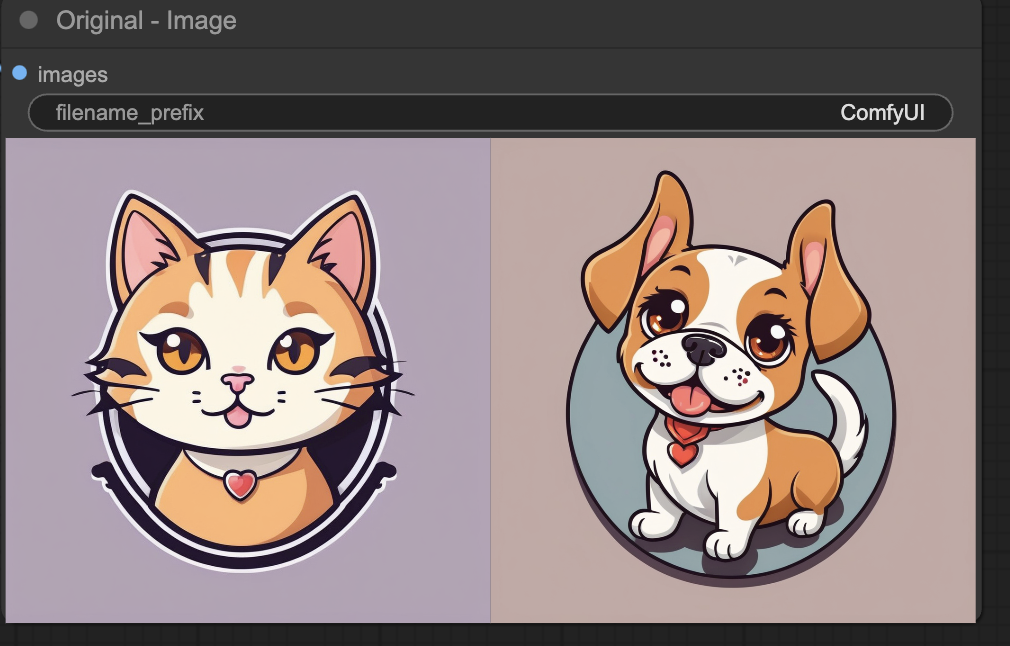

Or you can use the techniques to generate any consistent images.

This article will cover the following topics.

- Consistent style with Style Aligned (AUTOMATIC1111 and ComfyUI)

- Consistent style with ControlNet Reference (AUTOMATIC1111)

- The implementation difference between AUTOMATIC1111 and ComfyUI

- How to use them in AUTOMATIC1111 and ComfyUI

Table of Contents

Software

You can use Style Align with AUTOMATIC1111 and ComfyUI. The caveat is that their implementations are different and yield different results. More on this later.

We will use AUTOMATIC1111 and ComfyUI.

AUTOMATIC1111

We will use AUTOMATIC1111 , a popular and free Stable Diffusion software. Check out the installation guides on Windows, Mac, or Google Colab.

If you are new to Stable Diffusion, check out the Quick Start Guide.

Take the Stable Diffusion course if you want to build solid skills and understanding.

Check out the AUTOMATIC1111 Guide if you are new to AUTOMATIC1111.

ComfyUI

We will use ComfyUI, a free AI image and video generator. You can use it on Windows, Mac, or Google Colab.

Think Diffusion provides an online ComfyUI service. They offer an extra 20% credit to our readers.

Read the ComfyUI beginner’s guide if you are new to ComfyUI. See the Quick Start Guide if you are new to AI images and videos.

Take the ComfyUI course to learn how to use ComfyUI step by step.

How does style transfer work?

We will study two techniques to transfer styles in Stable Diffusion: (1) Style Aligned, and (2) ControlNet Reference.

Style Aligned

Style Aligned shares attention across a batch of images to render similar styles. Let’s go through how it works.

Stable Diffusion models use the attention mechanism to control image generation. There are two types of attention in Stable Diffusion.

- Cross Attention: Attention between the prompt and the image. This is how the prompt steers image generation during sampling.

- Self-Attention: An image’s regions interact with each other to ensure quality and consistency.

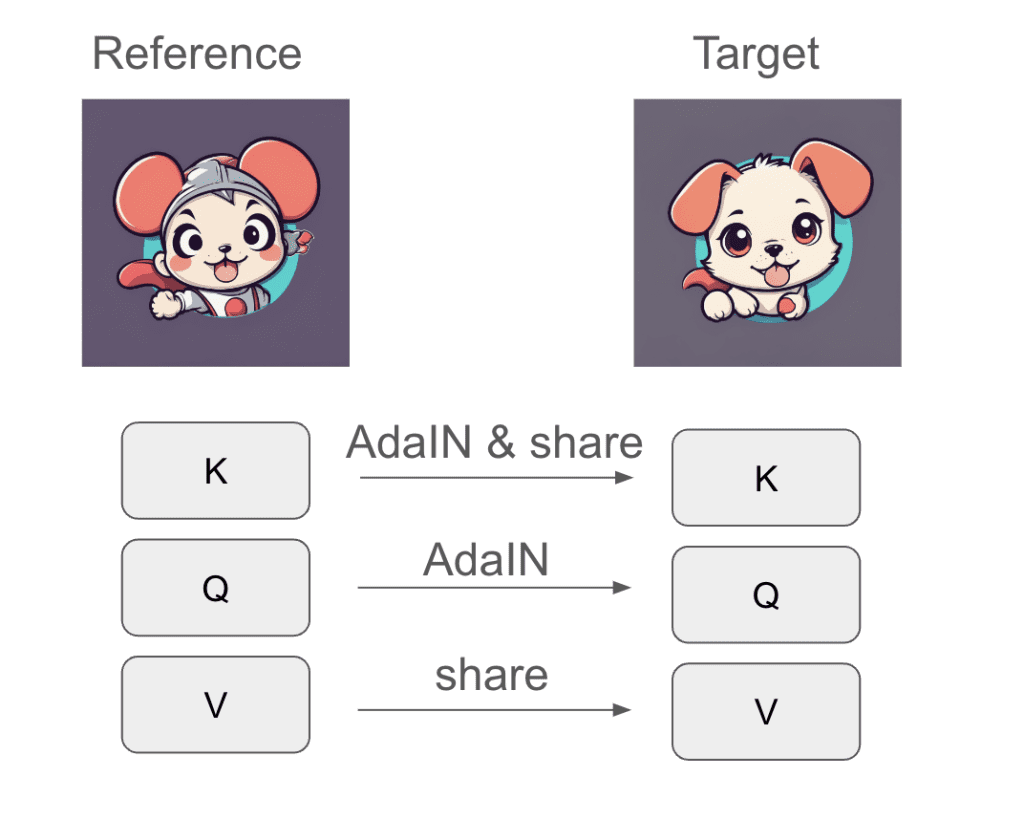

Style Aligned lets images in the same batch share information during self-attention. Three important quantities of attention are query (Q), key (K), and value (V). In self-attention, they are all derived from the latent image. (In cross-attention, the query is derived from the prompt.)

Style Aligned injects the style of a reference image by adjusting the queries and keys of the target images to have the mean and variance as the reference. This technique is called Adaptive Instance Normalization (AdaIN) and is widely used in style transfer. The images also share the keys and values. See the figure below.

The choice of where to apply AdaIN and attention sharing is quite specific. As we will see later, it doesn’t matter that much. Applying AdaIN and sharing in different ways achieves similar results.

Consistent style in AUTOMATIC1111

Although both AUTOMATIC1111 and ComfyUI claim to support Style Align, their implementations differ.

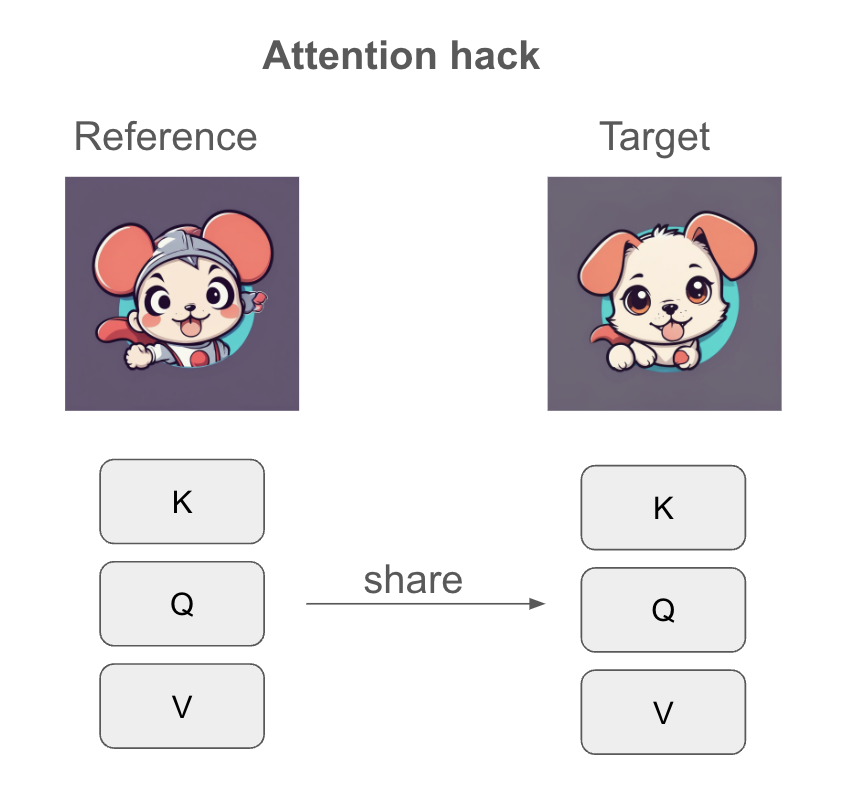

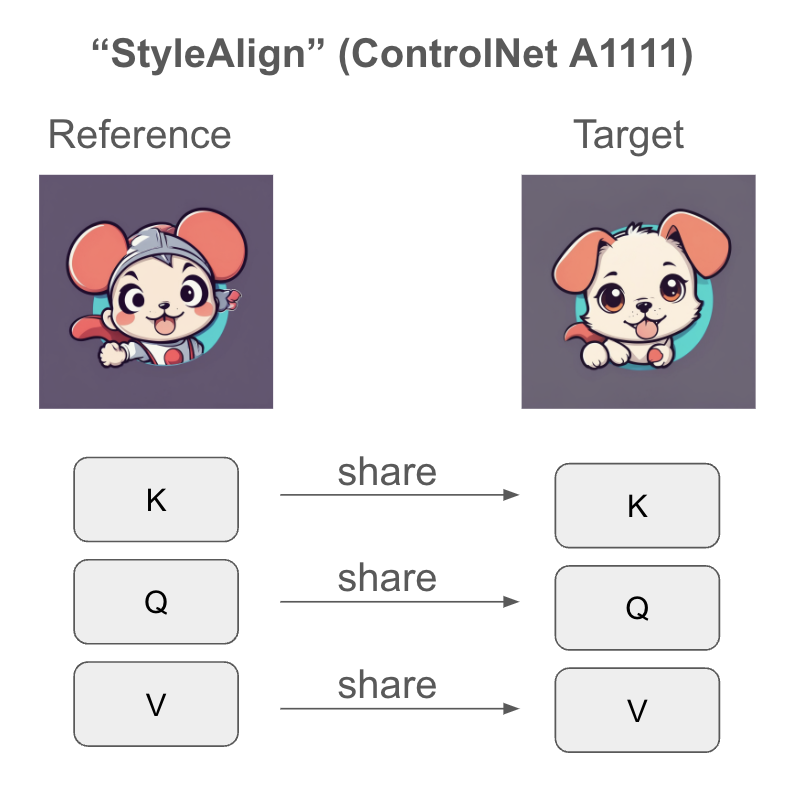

In AUTOMATIC1111, the Style Align option in ControlNet is NOT the Stye Align described in the paper. It is a simplified version that the paper’s authors called fully shared attention. It simply joins the queries, keys, and values of the images together in cross-attention. In other words, it allows the images’ regions in the same batch to interact during sampling.

As we will see, this approach is not ideal because too much information is shared across the images, causing the images to lose their uniqueness.

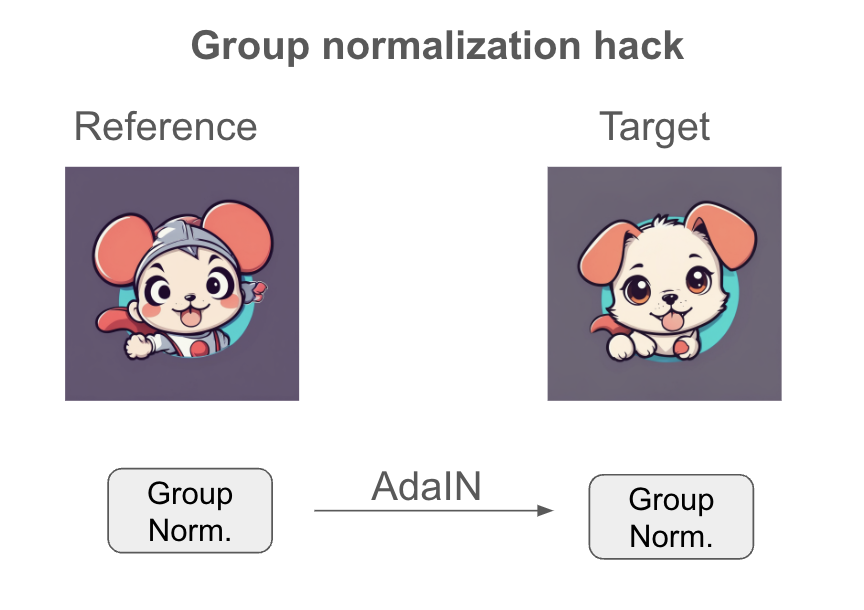

However, not all is lost. The reference ControlNet provides a similar function. Three variants are available:

| Reference method | Attention hack | Group normalization hack |

|---|---|---|

| Reference_only | Yes | No |

| Reference_adain | No | Yes |

| Reference_adain+attn | Yes | Yes |

The attention hack adds the query of the reference image into the self-attention process.

The group normalization hack injects the distribution of the reference image to the target images in the group normalization layer. It predates Style Aligned and uses the same AdaIN operation to inject style but into a different layer.

As we will see later, the attention hack is an effective alternative to Style Aligned.

Consistent style in ComfyUI

The style_aligned_comfy implements a self-attention mechanism with a shared query and key. It is faithful to the paper’s method. In addition, it has options to perform A1111’s group normalization hack through the shared_norm option.

AUTOMATIC1111

There are 4 ways to generate consistent styles in AUTOMATIC1111. However, as I detailed above, none of these methods are what the paper called Style Align, including the Style Align option.

Extensions needed

You will need to install the ControlNet and the Dynamic Prompt extension.

- Start AUTOMATIC1111 Web-UI normally.

2. Navigate to the Extension Page.

3. Click the Install from URL tab.

4. Enter the extension’s URL in the URL for extension’s git repository field.

ControlNet:

https://github.com/Mikubill/sd-webui-controlnetDynamic Prompt:

https://github.com/adieyal/sd-dynamic-prompts5. Click the Install button.

6. Wait for the confirmation message that the installation is complete.

7. Restart AUTOMATIC1111.

Method 1: Style Aligned

There’s a StyleAlign option in the ControlNet extension. However, it is not the same as the Style Aligned algorithm described in the original research article. Instead, it is what the authors called fully shared attention, which they found inferior to Style Aligned.

Below are the settings to use StyleAligned.

Step 1: Enter txt2img settings

Select an SDXL checkpoint model: juggernautXL_v8.

- Prompt:

{mouse|dog|cat}, cartoon logo, cute, anime style, vivid, professional

Note: This prompt uses the Dynamic prompt syntax {mouse|dog|cat}. It will use one of the three terms in each image.

- Negative Prompt:

text, watermark

- Sampling method: DPM++ 2M Karras

- Sampling Steps: 20

- CFG scale: 7

- Batch size: 3 (Important)

- Seed: -1

- Size: 1024×1024

Step 2: Enter Dynamic Prompts settings

Scroll down to and expand the Dynamic Prompts section.

The Dynamic Prompts enabled should have been selected by default.

Select the Combinatorial generation option. This will exhaust all combinations of the dynamic prompt. In our example, this option generates 3 prompts:

mouse, cartoon logo, cute, anime style, vivid, professional

dog, cartoon logo, cute, anime style, vivid, professional

cat, cartoon logo, cute, anime style, vivid, professional

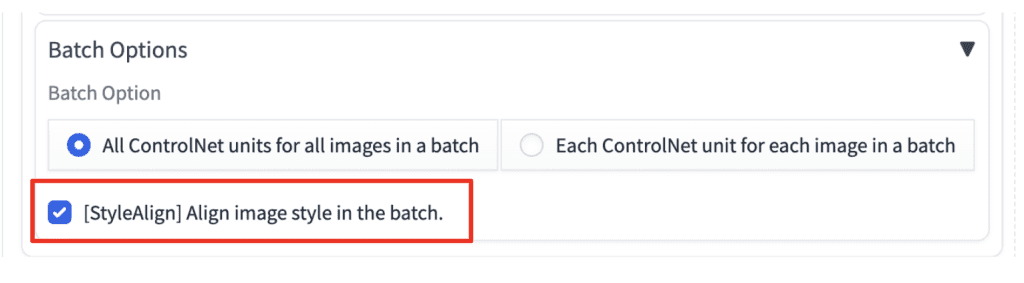

Step 3: Enter ControlNet settings

Scroll down to and expand the ControlNet section.

Select Batch Options > StyleAlign to enable fully shared attention.

You don’t need to enable ControlNet to use this option.

Step 4: Generate images

Click Generate. You should have 3 highly similar images like the ones below.

For comparison, the following images are generated with Style Align off.

StyleAlign has made the three images consistent in style. They have the same background, and the animals are the same color with similar facial expressions. However, I would say the style sharing is a bit too much. The dog and the cat are too similar to the mouse, losing their uniqueness.

StyleAlign of ControlNet is actually fully shared attention. The authors have also correctly pointed out that sharing attention fully generates overly simple images. They do not recommend this method.

Method 2: ControlNet Reference

The result of StyleAligned is a little underwhelming. But don’t worry, there’s already something much better in the extension: The Reference ControlNet.

You will need a reference image. We will use the image below.

Step 1: Enter the txt2img settings

Select an SDXL checkpoint model: juggernautXL_v8.

- Prompt:

{dog|cat}, cartoon logo, cute, anime style, vivid, professional

Note: We will use a reference image of a mouse so we will only generate images of dog and cat.

- Negative Prompt:

text, watermark

- Sampling method: DPM++ 2M Karras

- Sampling Steps: 20

- CFG scale: 7

- Batch size: 2 (Important)

- Seed: -1

- Size: 1024×1024

Step 2: Enter Dynamic Prompts settings

Scroll down to and expand the Dynamic Prompts section.

Select the Combinatorial generation option.

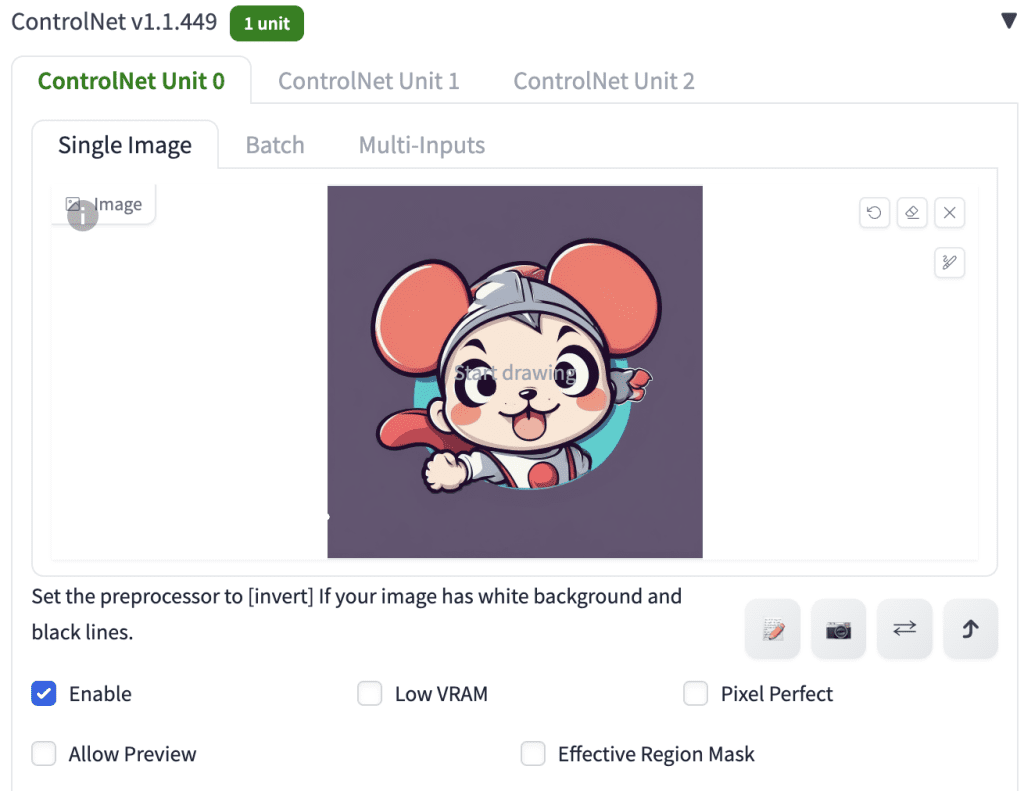

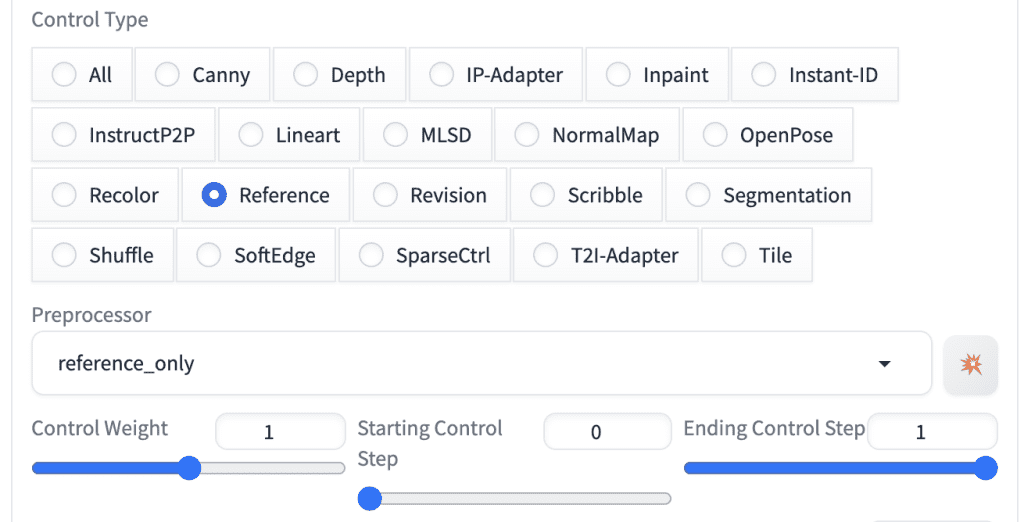

Step 3: Enter ControlNet settings

Scroll down to and expand the ControlNet section. Upload the reference image to the Single Image canvas of the ControlNet Unit 0.

- Enable: Yes

- Pixel Perfect: No

- Control Type: Reference

- Preprocessor: Reference_only (or reference_adain, reference_adain+attn)

- Control Weight: 1

- Starting Control Step: 0

- Ending Control Step: 1

Step 4: Generate images

Press Generate. Below are a comparison of results.

Reference only:

Reference AdaIN:

Reference AdaIN + attn.

Recall that the three methods use the following settings.

| Reference method | Attention hack | Group normalization hack |

|---|---|---|

| Reference_only | Yes | No |

| Reference_adain | No | Yes |

| Reference_adain+attn | Yes | Yes |

The group normalization hack does not work well in generating a consistent style. The attention hack works pretty well.

I recommand using the Reference_only or Reference_adain+attn methods.

ComfyUI

We will use Style Aigned custom node works to generate images with consistent styles.

You will need to have ComfyUI Manager installed to follow the instructions below.

Step 1: Load the workflow

Download the workflow JSON file below and drop it to ComfyUI.

Select Manager > Install Missing Custom Nodes. Install the nodes that are missing. Restart ComfyUI.

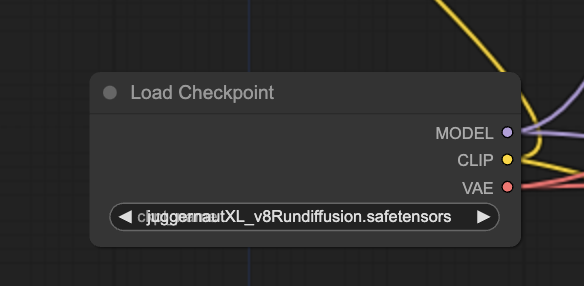

Step 2: Select checkpoint model

Download the Juggernaut XL v8 model. Put it in the folder models > checkpoints.

Select Refresh on the side menu. Select the model in the Load Checkpoint node.

Step 3: Generate images

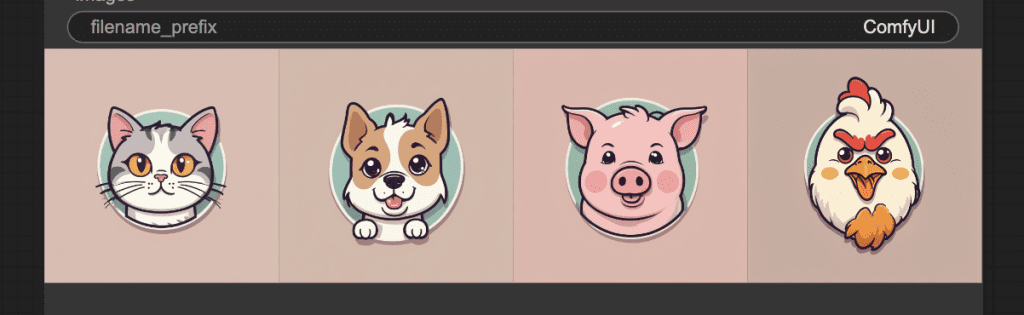

Click Queue Prompt.

You will see the images generated with Style Align results in a consistent style.

The original images without Style Aligned are much more different.

Additional Settings

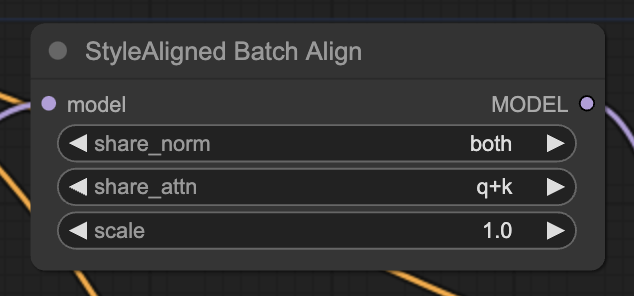

There are three settings in the StyleAligned Batch Align you can change.

The share_norm setting doesn’t do anything. You can keep it at both.

The share_attn setting has options q+k and q+k+v. They produce very similar results. You can keep it at q+k.

The scale setting controls the strength of the effect.

Changing the Prompt

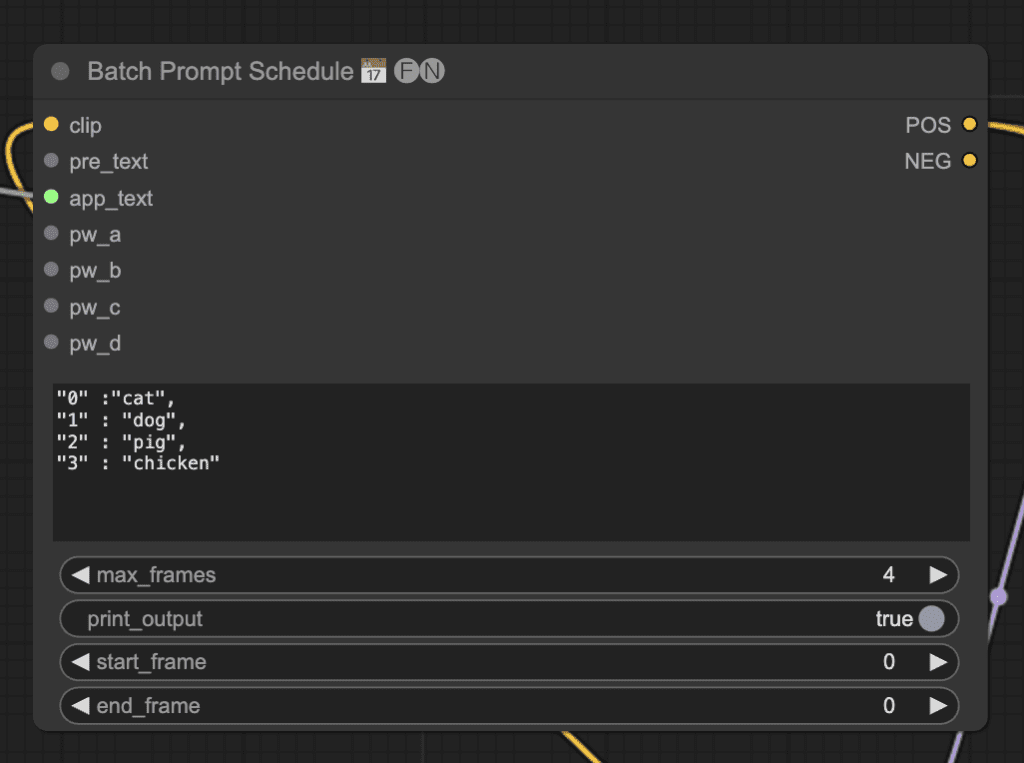

You can add to the prompt by changing the Batch Prompt Schedule. The example uses 4 different prompts. Make sure to change max_frames to 4 and batch size in Empty Latent Image node to 4.

Reference

[2312.02133] Style Aligned Image Generation via Shared Attention – The Style Aigned paper.

brianfitzgerald/style_aligned_comfy – Style Aligned custom node for ComfyUI.

1.1.420 Image-wise ControlNet and StyleAlign (Hertz et al.) · Mikubill/sd-webui-controlnet · Discussion #2295 – Discussion on A1111’s Style Aligned.

[Major Update] Reference-only Control · Mikubill/sd-webui-controlnet · Discussion #1236 – Reference-only ControlNet in A1111.

Great article, thank you!