I have a previous workflow for creating text effect images with ControlNet. It works well but takes quite some work. With the advance in Stable Diffusion, you can now generate text effects directly with text-to-image!

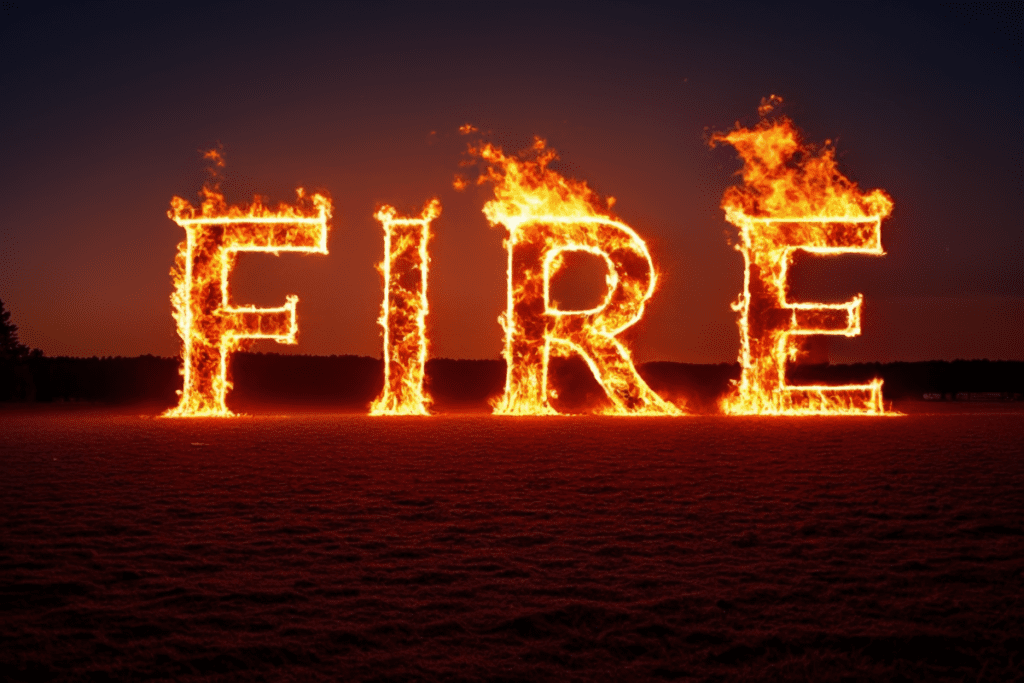

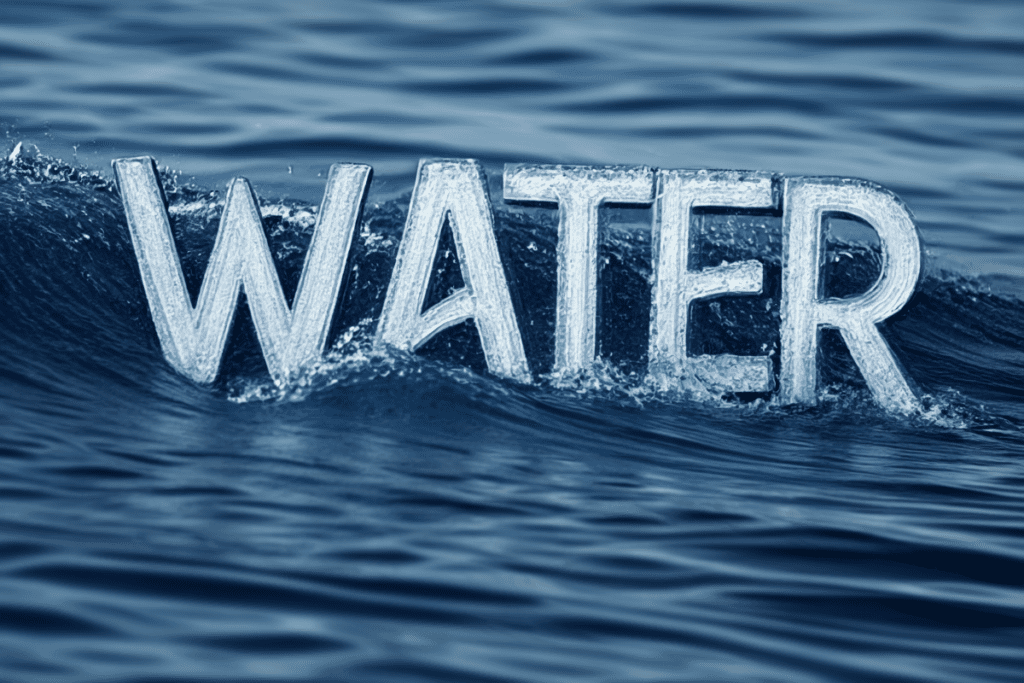

Here are a few samples.

You will get a downloadable ComfyUI workflow with a step-by-step guide to use it.

You must be a member of this site to download the JSON workflow.

Table of Contents

Software

Stable Diffusion GUI

We will use ComfyUI, a node-based Stable Diffusion GUI. You can use ComfyUI on Window/Mac or Google Colab.

Check out Think Diffusion for a fully managed ComfyUI/A1111/Forge online service. They offer 20% extra credits to our readers. (and a small commission to support this site if you sign up)

See the beginner’s guide for ComfyUI if you haven’t used it.

Step-by-step guide

This workflow takes advantage of the text generation capability of the Stable Cascade model.

Step 0: Load the ComfyUI workflow

Download the workflow JSON file below.

Drag and drop it to ComfyUI to load.

Go through the drill

Every time you try to run a new workflow, you may need to do some or all of the following steps.

- Install ComfyUI Manager

- Install missing nodes

- Update everything

Install ComfyUI Manager

Install ComfyUI manager if you haven’t done so already. It provides an easy way to update ComfyUI and install missing nodes.

To install this custom node, go to the custom nodes folder in the PowerShell (Windows) or Terminal (Mac) App:

cd ComfyUI/custom_nodesInstall ComfyUI by cloning the repository under the custom_nodes folder.

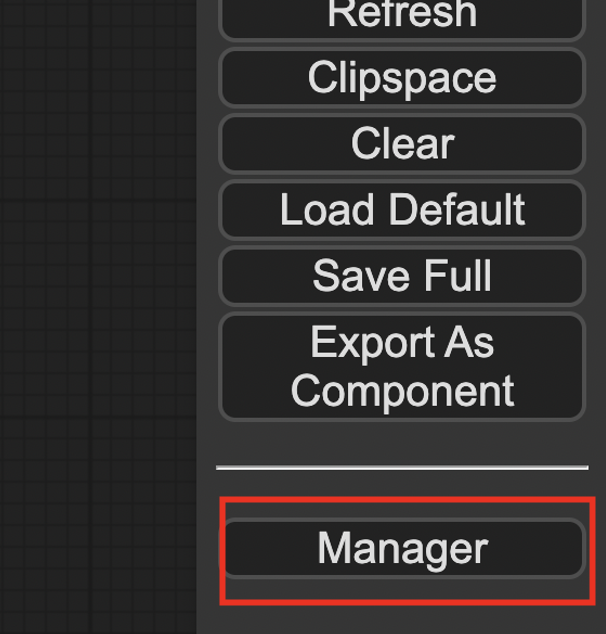

git clone https://github.com/ltdrdata/ComfyUI-ManagerRestart ComfyUI completely. You should see a new Manager button appearing on the menu.

If you don’t see the Manager button, check the terminal for error messages. One common issue is GIT not installed. Installing it and repeat the steps should resolve the issue.

Install missing custom nodes

To install the custom nodes that are used by the workflow but you don’t have:

- Click Manager in the Menu.

- Click Install Missing custom Nodes.

- Restart ComfyUI completely.

Update everything

You can use ComfyUI manager to update custom nodes and ComfyUI itself.

- Click Manager in the Menu.

- Click Updates All. It may take a while to be done.

- Restart the ComfyUI and refresh the ComfyUI page.

Step 1: Download checkpoint model

Download the Stable Cascade models stage B and stage C. Put them in ComfyUI > models > checkpoints.

Step 2: Run the workflow

Click Queue Prompt to run the workflow. You should get an image like this.

Customization

The only thing you need to change to get a different text is the prompt. Below are a few more examples.

The word “fire” made of fire and lightning, sunset

The word “bread” made of bread, top view

The word “water” made of waves

Change the width and height in the Stable Cascade Empty Latent Image node to change the image size.

Can you please advise of the Colab setup for this, as I’m switching between local and Colab as I’m new to Colab setup routine. I need a set of instructions to get this workflow to work in Colab, I have it working locally. But lack the experience of setting up Colab ComfyUI, Thank You

My colab notebook should work. Let me know if you experience any issues.

Hello. I really love these text effects, I am a total novice when it comes to AI and have a few questions? Is Stable Diffusion a desktop app like Photoshop? Is Controlnet a plug-in for Stable Diffusion? Do I have to buy these to gain the results shown in this article?

Thanks for sharing.

Yes, SD is a desktop app. There are options to run it online too.

Yes, controlnet is an addon for SD. You don’t need to buy the software or addons. They are all free.

this is not exact. to generate Art with SD, you normally use an user interface in the browser. But with Automatic1111, ComfyUI and others, you start a local server. this is important to have in mind, in the browser you can controll everything, make the settings and so on. after you press the “Generate” Button (or “Queue Prompt” in ComfyUI), the browser sends the settings to the server and the server runs Stable Diffusion (it’s what you see in the console window)

The server will save the generated Images in a subfolder of the server, or you can download it, that works the same way as you download an image from the internet.

If you want, you could run those servers as a server for the internet, but every user would use your Hardware (GPU) to do the calculations and the images would be stored on your Harddrive.

Have you ever seen what sora video generation AI capable of where is the stable viddiosion 😁

Yes, very impression 🙂 Like an upgraded version of SVD.

Why do the pngs from the samples up not have the comfyUI workflow in it?

Modern CDN is so smart that it sometimes strips the meta info to save a few bytes.

Never saw that at comfyui. Only at Fooocus and on A111 if you turn it off.

Does stable cascade works with 8GB VRAM. I am able to follow 90% of workflows from the website or from opeart, but I haven’t tried stable cascade yet.

This workflow goes up to 12GB on my machine.

@Pradeep Kumar

Q: Does stable cascade works with 8GB VRAM.

A: I have a RTX 2070 super with 8GB VRAM and to my surprise it was generating images, I didn’t think it would.

In my case the answer is YES!

Are there any compatible controlnet for this specific model ? Is it possible to use AnimateDiff to generate animations ?

Thanks a lot !

Not that I know of currently.

Thank you for sharing. I would like to know if there is any way to modify the font or create Chinese characters.

You can try specifying the font in the prompt. If that doesn’t work, the following method with controlnet gives you more control and will work for chinese characters.

https://stable-diffusion-art.com/text-effect/