Stability AI has released a preview of a new model called SDXL Beta (Stable Diffusion XL Beta). They didn’t tell us much about the model, but it is available for anyone who wants to test it.

What’s new about this Stable Diffusion SDXL model? What are its strengths and weaknesses? Let’s find out.

Table of Contents

What is SDXL model

The SDXL model is a new model currently in training. It is not a finished model yet. In fact, it may not even be called the SDXL model when it is released.

All we know is it is a larger model with more parameters and some undisclosed improvements. It is a v2, not a v3 model (whatever that means).

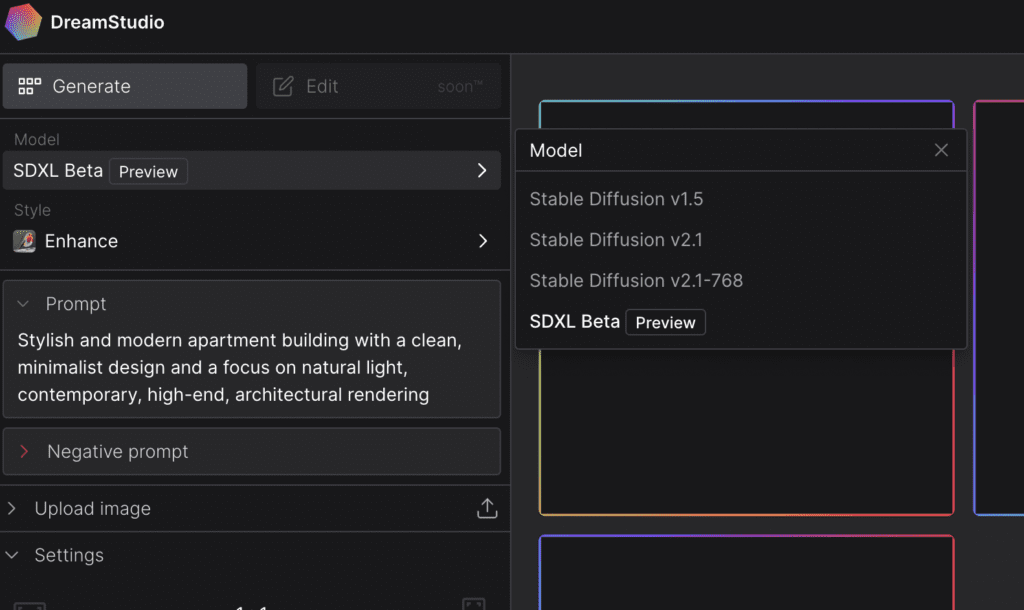

How to use SDXL model

The SDXL model is currently available at DreamStudio, the official image generator of Stability AI. To use the SDXL model, select SDXL Beta in the model menu.

You will need to sign up to use the model. You will get some free credits after signing up.

Improvements

I will highlight some improvements in the SDXL model I have seen so far.

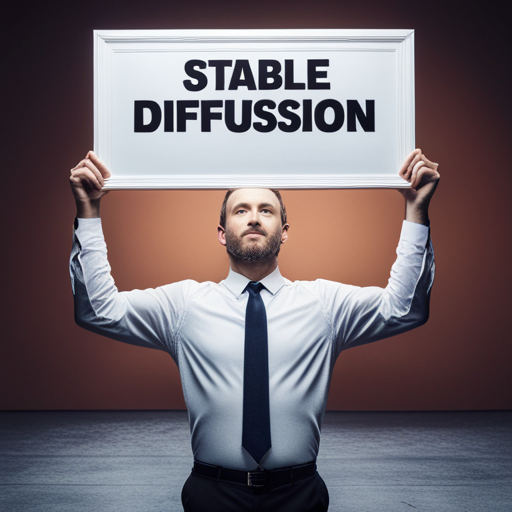

Legible text

Perhaps the most striking capability is the ability to generate legible text. This is not possible in the v1 or v2.1 models.

The text generated by SDXL is not always accurate (as you can see in the Stable Diffusion Text below). But it is much better than v2.1, not to mention v1 models.

Photo of a woman sitting in a restaurant holding a menu that says “Menu”

Photo of a man holding a sign that says “Stable Diffusion”

a young female holding a sign that says “Stable Diffusion”, highlights in hair, sitting outside restaurant, brown eyes, wearing a dress, side light

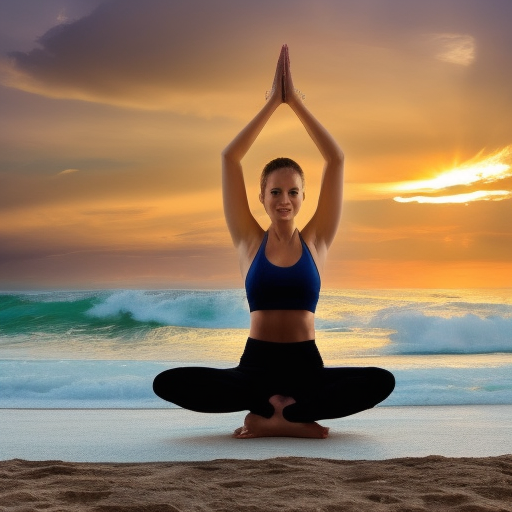

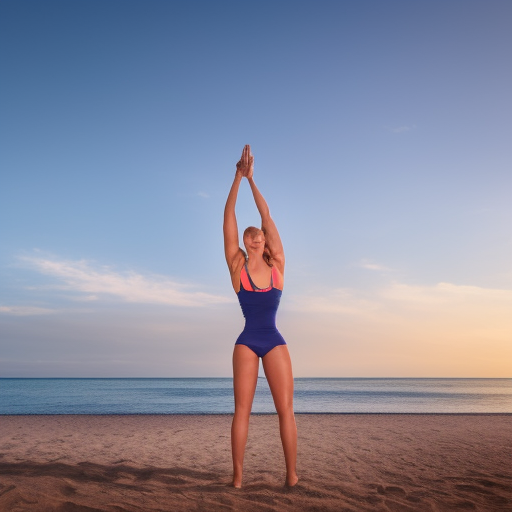

Better human anatomy

Stable Diffusion long has problems in generating correct human anatomy. It is common to see extra or missing limbs. You will usually use inpainting to correct them. Or, more recently, you can copy a pose from a reference image using ControlNet‘s Open Pose function.

I am pleased to see the SDXL Beta model has improvements in this area. Let’s look at an example.

The prompt is:

Photo of a woman in yoga outfit, triangle pose, beach in evening, rim lighting

Here are the SDXL Beta images.

Compare with the v1.5 images below.

It’s not perfect, but human poses are much better in SDXL!

More aesthetic images

The images generated can be quite different. See the following images with the same prompt.

Photo-style portraits are very good in SDXL Beta. I would say it is better than v1.5.

photo shot of a woman

More accurate images

The ability to understand the prompt improves over v1 models.

In the v1.5 model, the keyword duotone always generates black-and-white images. SDXL Beta generates duotone images with a variety of colors. This is an improvement.

duotone portrait of a woman

Since SDXL Beta is a v2 model, it equips with a larger text model. You can expect it to understand your prompt better than v1 models. Indeed that’s what we see.

Let’s look at the images generated by the following prompt with two subjects.

big robot friend sitting next to a human, ghost in the shell style, anime wallpaper

The v1.5 model consistently ignores there are two subjects, the robot and the human, in the prompt. But the SDXL Beta model is able to understand the prompt and generates a more correct image. (I hope the robot can be bigger, but that’s a step forward.)

Likewise, photo-style images are more accurate. See the following prompt and images.

a young man, highlights in hair, brown eyes, in white shirt and blue jean on a beach with a volcano in background

Artistic styles

I checked a few artistic styles. There are some subtle changes, but I can neither say they are better nor worse. It’s just different.

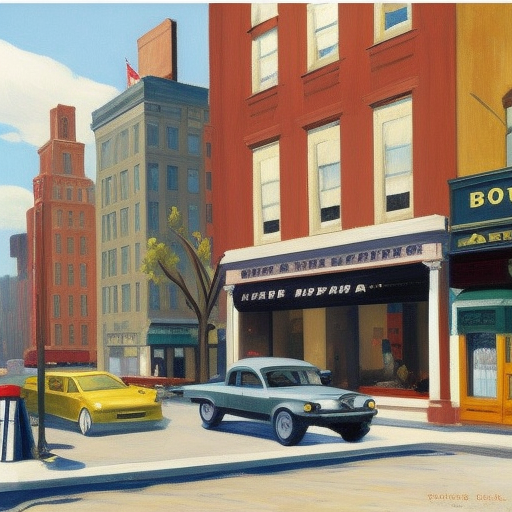

Both v1.5 and SDXL Beta generates Edward Hopper‘s style. Although they are consistently different.

New York city by Edward Hopper

v1.5 generates Leonid Afremov‘s style accurately. The unmistakable colorful board brushstrokes are missing in SDXL Beta. It generates an illustration style and, interestingly, still retains the distinct reflection on the ground.

New York city by Leonid Afremov

Both v1.5 and SDXL Beta produce something close to William-Adolphe Bouguereau‘s style. SDXL Beta’s images are closer to typical academic paintings which Bouguereau produces. In general, portraits from SDXL Beta show more details on faces.

Portrait of beautiful woman by William-Adolphe Bouguereau

Style Shift

Perhaps it’s a glitch in this preview model. Sometimes, the style can abruptly change with the addition of innocent keywords.

For example, I started with this prompt that generates a photo style.

a young man, highlights in hair, brown eyes, in white shirt and blue jean on a beach with a volcano in background

Now I want to add a yellow scarf.

a young man, highlights in hair, brown eyes, wearing a yellow scarf, in white shirt and blue jean on a beach with a volcano in background

Suddenly, the images change to anime style. This happens to a few keywords. It is almost like the model has blended with some cartoon styles and is eager to switch to that.

Hope this issue will be resolved in the release version.

Impressions

Here are what I think about the SDXL Beta model:

- Stable Diffusion finally generates correct text!

- More aesthetic than the v2.1 model and (to a lesser extent) the v1.5 model.

- The images are more accurate as described in the prompts.

- Human anatomy is getting better.

- Do not need negative prompts as much as v2.1.

- Particularly strong in portraits.

- Some peculiar glitches in the model to be fixed before release.

Finally, a few more images from the SDXL beta model.

Any chance of SDXL text rendering capability being merged with SD 1.5?

SD 2 is an attempt to improve the text rendering of SD1 by changing the text encoder. The model needs to be retrained because of that, and it took them a long them to get to SDXL.

Its high version model seems to be breaking away from “bad taste” and bound by more and more “rules”. This is not to the liking of most people, 1.5 will be the most popular

As weird and telling as it may be, it will only find broad acceptance if you can create waifu with it. SD2 is apparently very limited in this regard.

The community hasn’t found good reasons to. Hopefully, SDXL will change that with a better out-of-the-box performance.

As long as it has baked in censorship like 2.0 did, no, no it won’t.

Well written article with some excellent examples. Thanks for getting us the latest update.

Will this model be customizable with Dreambooth?

It should if they release it.

re: “More aesthetic”. That doesn’t make sense. “Aesthetic” doesn’t mean “pleasing to the eye”. The correct wording would be something like “More aesthetically pleasing” or “has better aesthetically qualities”.

Thank you for pointing this out. Haven’t thought about usage of this word much until you said it.

Will be corrected.

not true, usually something aesthetic is something good to see so it’s correct

Although you’re strictly correct, this usage is now so common in an AI art context that there may be no use fighting it any more.

After coming across the comment, I searched for the definition of the word aesthetic in the Merriam-Webster dictionary. It turns out that one of its meanings is “pleasing in appearance.” Therefore, I did not make any alterations. I appreciate everyone for providing me with complimentary English lessons!

“has better aesthetically qualities” maybe should be “has better aesthetics”?