Stable diffusion 2.1 was released on Dec 7, 2022.

Those who have used 2.0 have been scratching their head on how to make the most of it. While we see some excellent images here or there, most of us went back to v1.5 for their business.

See the step-by-step guide for installing AUTOMATIC1111 on Windows.

The difficulty was in part caused by (1) using a new language model that is trained from scratch, and (2) the training dataset was heavily censored with an NSFW filter.

The second part would have been fine, but the filter was quite inclusive and removed a substantial amount of good-quality data. 2.1 promised to bring them back.

This tutorial will cover installing and using 2.1 models in AUTOMATIC1111 GUI so you can judge by using it.

Table of Contents

2.1 models variants

There are two text-to-image models available:

- 2.1 base model: Default image size is 512×512 pixels

- 2.1 model: Default image size is 768×768 pixels

The 768 model is capable of generating larger images. You can set the image size to 768×768 without worrying about the infamous two heads issue.

This is especially useful for generating larger scenes with small characters. The faces can be generated a bit clearer than the 512 model, increasing the chance of success of the downstream upscaling and face restoration.

The downside of the 768 model is it takes longer to generate images. The larger images may limit the batch size, depending on how much VRAM your GPU has.

Install base software

We will go through how to use Stable Diffusion 2.0 in AUTOMATIC1111 GUI.

This GUI can be installed easily in Windows systems, or follow the installation instructions on your respective environment. Ideally, you should have a dedicated GPU card with at least 6GB VRAM.

If you already have this GUI, make sure it is up-to-date by running the following command in the terminal under its installation location (stable-diffusion-webui folder).

git pullDownload Stable Diffusion 2.1 model

2.1 base model (512-base)

- Download the model file (v2-1_512-ema-pruned.ckpt)

https://huggingface.co/stabilityai/stable-diffusion-2-1-base/resolve/main/v2-1_512-ema-pruned.ckpt2. Download the config file, and rename it to v2-1_512-ema-pruned.yaml

https://raw.githubusercontent.com/Stability-AI/stablediffusion/main/configs/stable-diffusion/v2-inference.yamlPut both of them in the model directory:

stable-diffusion-webui/models/Stable-diffusion2.1 model (768)

- Download the model file (v2-1_768-ema-pruned.ckpt)

https://huggingface.co/stabilityai/stable-diffusion-2-1/resolve/main/v2-1_768-ema-pruned.ckpt2. Download the config file, rename it to v2-1_768-ema-pruned.yaml

https://raw.githubusercontent.com/Stability-AI/stablediffusion/main/configs/stable-diffusion/v2-inference.yamlPut both of them in the model directory:

stable-diffusion-webui/models/Stable-diffusionHow to use 2.1 model

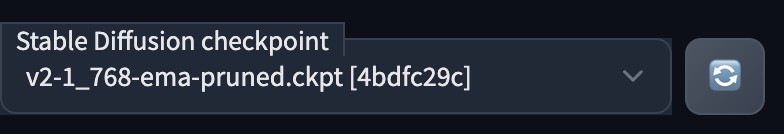

To use the 768 version of the Stable Diffusion 2.1 model, select v2-1_768-ema-pruned.ckpt in the Stable Diffusion checkpoint dropdown menu on the top left.

The model is designed to generate 768×768 images. So, set the image width and/or height to 768 for the best result.

To use the base model, select v2-1_512-ema-pruned.ckpt instead.

Troubleshooting

This is something you can try if your installation doesn’t work.

- See if your AUTOMATIC1111 GUI is outdated. In the terminal, use the command

git pullunder thestable-diffusion-webuidirectory and restart the GUI. - Check if the YAML file is downloaded correctly. Its content should be a simple text file, not with HTML tags.

- Check if the YAML file is correctly renamed as described in the previous section.

- If 2.0 or 2.1 is generating black images, enable full precision with startup arguments

--no-halfor--xformersoptimization.

Tips for using 2.1

2.1 is an improvement over 2.0. The images look better and require less effort in engineering the prompt.

So, I am deleting my 2.0 models.

Here are some tips for using the 2.1 model.

Tip 1: Write more

Similar to 2.0, the prompt needs to be very specific and detailed to get the image you want. Unlike v1 models, simple prompts usually won’t go well with 2.1.

Tip 2: Use negative prompt

Many have already found that a negative prompt is important for v2 models. Keep a boilerplate negative prompt for portraits where many things can go wrong. In fact, Stability uses the following negative prompt in the demo images.

cropped, lowres, poorly drawn face, out of frame, poorly drawn hands, blurry, bad art, blurred, text, watermark, disfigured, deformed, closed eyes

I like the following negative prompt for the v2.1 model.

ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, extra limbs, disfigured, deformed, body out of frame, bad anatomy, watermark, signature, cut off, low contrast, underexposed, overexposed, bad art, beginner, amateur, distorted face

See how it is constructed and the importance of a negative prompt for the v2 models.

Tip 3: Use the correct image size

Finally, set the correct image size. Set at least one side to be 512 px for the 512-base model and 768 px for the 768 model.

Have fun with 2.1!

how come the config file doesn’t go in the actual config folder?

welcome to A1111

2.1 sucks. More processing time for the same results/time spent per image for what I typically get using 1.5 and upscale.

Thank’s for these cristal clear explainations. It worked at the first try. I needed 768 px for better results with deforum. It makes my day !

I’m sure you got this sorted out already, but for anyone else stumbling upon this: it looks like the copy-paste URL for the 768 model config file is incorrect, and using that config will give precisely the results Seth describes.

Using the “Download config file” link instead fixed everything for me.

Not 100% sure you even need a separate config file for 2.1 anymore with the latest webui.

If you’re using the xformers optimization, be aware that your images will never be duplicable, even if you keep your settings, prompt, seed etc. identical. There is a degree of prompt bleed that happens for some reason.

Someone may have mentioned this…but on Apple M1 (laptop), I needed to run a flag for 2.1 to work in A1111: ./webui.sh –no_half

A tensor with all NaNs was produced in Unet. This could be either because there’s not enough precision to represent the picture, or because your video card does not support half type.

same here, this is happening with all sd 2.1 768 models even on high end RTX as 3080 ti

Thank you for a great article! I don’t get one thing… It’s doesn’t matter if I have v1.5 or 2.1. installed if I run another models (AresMix for example). It’s not like I have SD 2.1. running somewhere in the background and I get different results from AresMix…

Hi, Ares Mix is a model itself so its good to go on its own. It doesn’t matter whether you have 1.5 or 2.1 installed.

Thanks for the great tutorial. I found that I had to select a 2.1 checkpoint, then restart the webui. Same to go back to 1.5 models.

This should not be necessary. You can look at the command prompt to see what’s the error message.

Thanks for these instructions. When you saw download the config file, the file opens in a browser tab. What exactly do I download? Do I save in a text editor and then rename to v2-1_768-ema-pruned.yaml?

*when you *say* to downlead the config file…

Hi yes, use “save page as…” and name it accordingly.

Andrew;

Have an M2 38 core and would love to have SD USE it!

Do you know of where an Apple Silicon Enabled checkpoint file might be, or is what we’ve installed enabled? Render times tell me it’s not being used.

Thank you very much!

David

I believe A1111’s mac setup is using GPU cores. You can check by looking at activity monitor. It’s slow because it is not using cuda library.

Checkpoints are agnostic to cpu or gpu.

Apple has its own optimization of stable diffusion which is the fastest. I don’t think it comes with a GUI.

Ya blew a lot of money on the wrong type of computer for this stuff, dude. lol

Hello, despite following the instructions, SD 2.1 is not producing even semi-coherent results. At best, I’m getting grainy, off-color blobs which are shaped *sort of* like what I want. Contrast with SD 1.5, which runs perfectly.

I’ve got the yaml file and it looks correct (no html tags), I’ve set –xformers in the command arguments, and the resolution is 768×768.

Mmm, you can try updating automatic1111 (do a git pull), increasing number of steps to 30, and write longer prompts and switching the sampler.

Hey Andrew!

Thanks for your guide!!

I’m trying to use Jaks Creepy Critters, which requires 2.1. As you said above, the native AUTOMATIC1111 uses SD ver 1.4-1.5 and thus errors out when trying to load Jaks Creepy Critters. I tried checkpoint merging both 2.1 (512) and Jaks but still it errors out. Any idea what I might be doing wrong?

Hi Andrew, I tried this version

https://huggingface.co/plasmo/food-crit

and it loaded fine. It’s a v1 model which you can just download and use.

I see there are several variants. Which one do you have problem with?

I will try that and let you know the conclusion! Thanks for the quick reply!

Sorry to be a bother!

Do I just need to download the ckpt and place it in the Models/Stable-Diffusion directory or download the entire directory from that on hugging face link you provided?

Just the ckpt file. Make sure it is about 2gb after you download.

Thank you, got it. I appreciate your time!

Hi Andrew, I’m a noob and using your wonderful guide to install this for the first time. I’m curious about the “config” files you instructed us to download – alongside the model files.

In other tutorials I’ve seen related to installing the “1.5” model, there was never any mention or instruction about config files.

Is this some new innovation related to the 2.1 model specifically? What is the purpose of these “config” files?

Hello, You don’t need a config file for 1.4 or 1.5 models in AUTOMATIC1111. The GUI has used a default one. The config files are used to deal with different model architectures. The reason v1 models doesn’t need a config file is mostly historical: During that time there was only one or two architectures so GUI dealt with config files behind the scene. But now we have v2 and beyond. Having the config file along with model is a better way going forward.

Thanks