Stable Diffusion has a hand problem. It is pretty common to see deformed hands or missing/extra fingers. In this article, we will go through a few ways to fix hands.

We will cover fixing hands with

- Basic Inpainting

- DW Pose ControlNet

- Hand Refiner with Mesh Graphormer

Table of Contents

Software

We will use AUTOMATIC1111, a popular and free Stable Diffusion AI image generator. You can use this GUI on Windows, Mac, or Google Colab.

If you are new to Stable Diffusion, check out the Quick Start Guide to get started.

Check out the Stable Diffusion courses to level up your skills.

You will need the ControlNet extension to follow this tutorial.

Basic Inpainting

This method uses the basic inpainting function. You can use Stable Diffusion 1.5 or XL with this method.

Step 1: Generate an image

On the txt2img page, generate an image. I will use the Realistic Vision v5. (A SD 1.5 model)

Prompt:

a cute woman showing two hands, christmas, palm open, town, decoration

- Sampling method: DPM++ 2M Karras

- Sampling Steps: 20

- CFG scale: 7

- Seed: -1

- Size: 512×768 (Adjust the image size accordingly for SDXL)

The hands should be pretty bad in most images. This is typical for an SD 1.5 model.

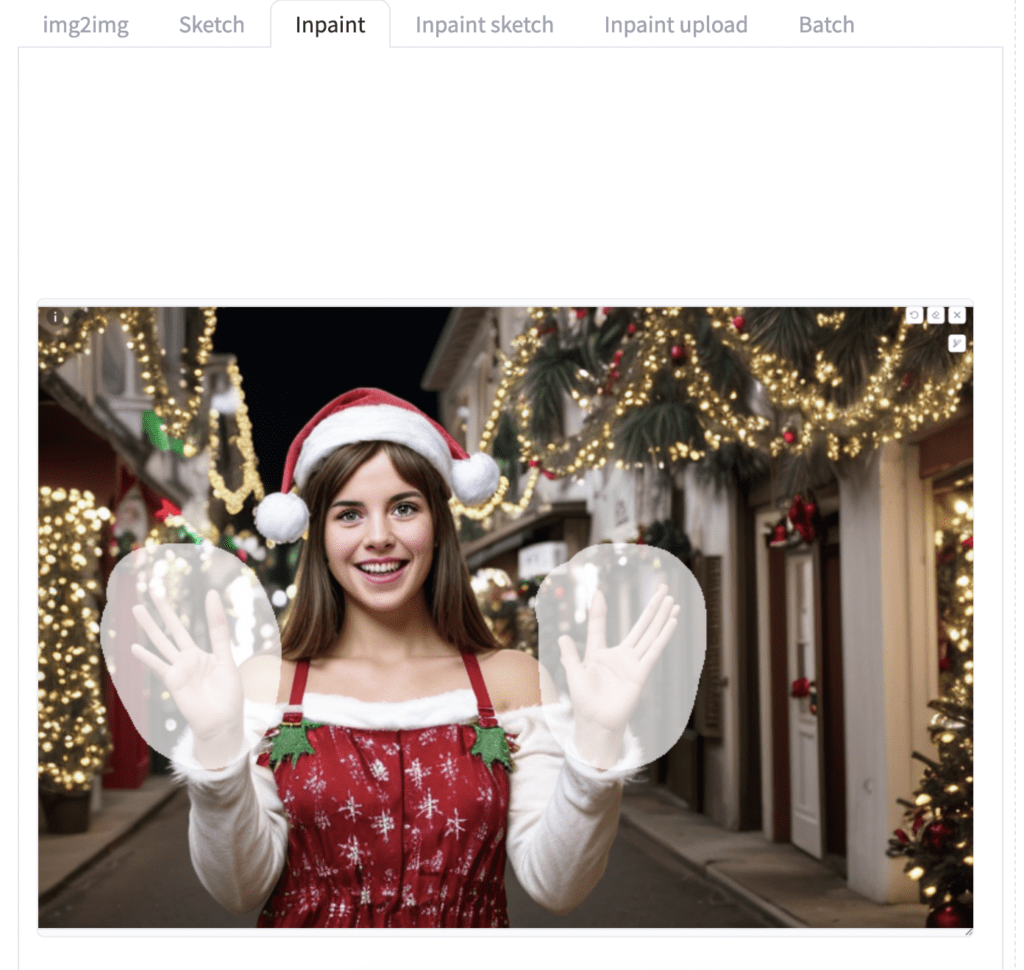

Step 2: Inpaint hands

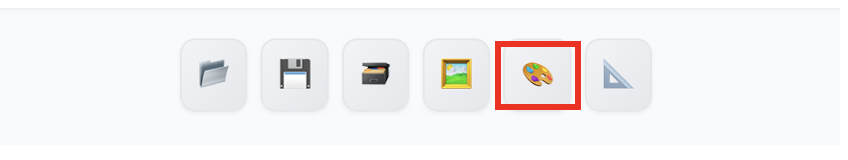

Now, let’s send this image to Inpainting by clicking the Send to Inpaint button under the image.

You should now be on img2img > Generation > Inpaint tab.

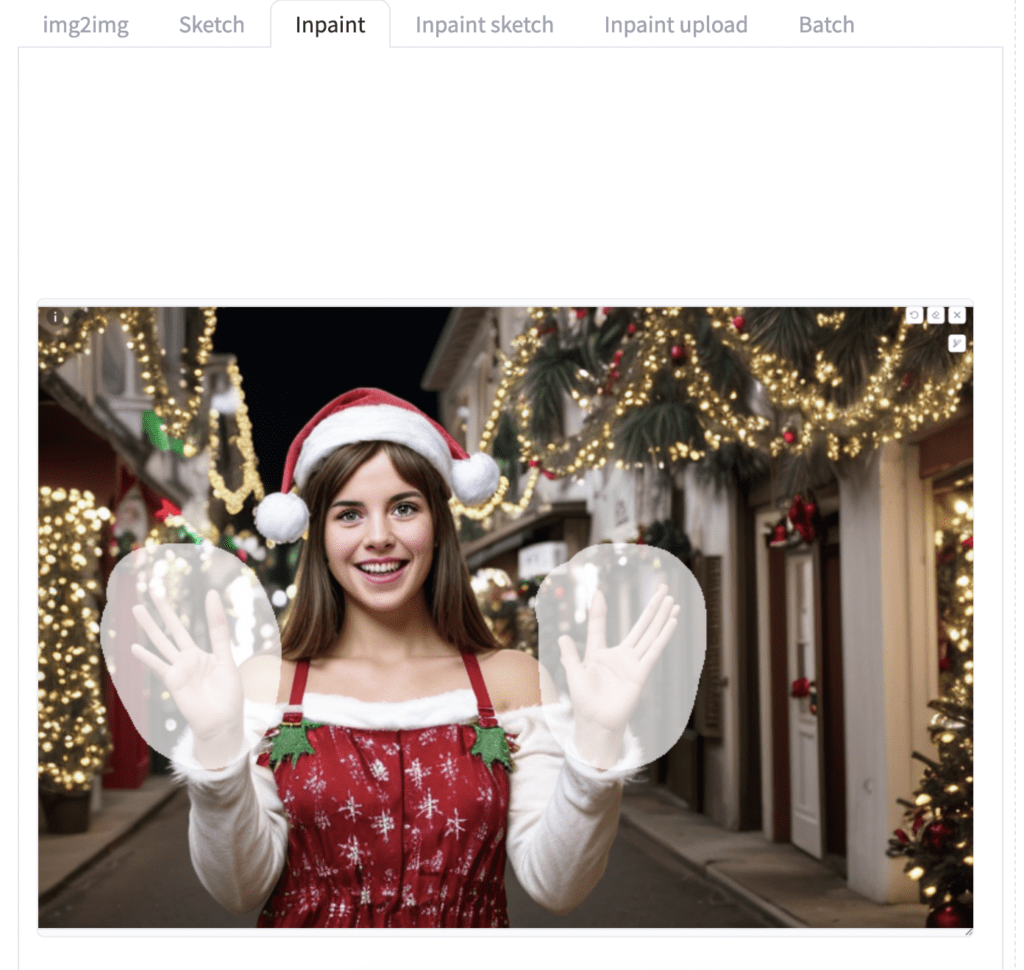

Mask the area you want to regenerate. For the best result, fix one hand at a time.

Set the Inpaint Area to Whole Picture.

Set denoising strength to 0.5. (Increase to change more)

This method requires some cherry-picking. Generate a few images and pick the best one to go forward with.

Here’s the one I chose.

Next, repeat the same process for the other hand. Use the Send to Inpaint button and redraw the mask.

You can also apply it to one finger to adjust its length.

After a few rounds of inpainting the hands and fingers, I got the following image with repaired hands.

ControlNet DWPose Inpainting

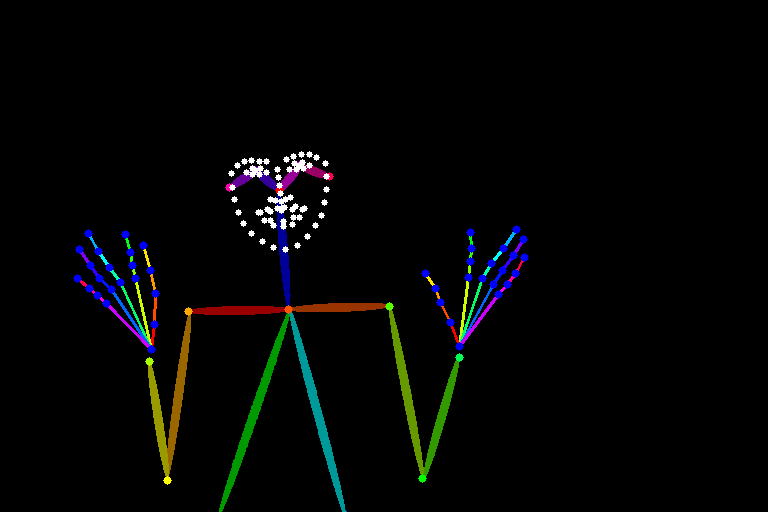

DWPose is a powerful preprocessor for ControlNet Openpose. It can extract human poses, including hands.

The trick is to let DWPose detect and guide the regeneration of the hands in inpainting. I will explain how it works.

You will need the ControlNet extension and OpenPose ControlNet model to apply this method. See this post for installation.

Step 2: Inpaint with DWPose

Mask the area you want to regenerate, which is the hands in this case. You should include a bit of the surrounding areas.

Set Inpaint area to Whole Picture.

It is important to set a size compatible with SD 1.5. It is 512×768 in this case for a 2:3 aspect ratio.

Set the denoising strength to 0.7.

- Enable: Yes

- Control Type: OpenPose

- Preprocessor: dw_openpose_full

- Model: control_v11p_sd15_openpose

Click Generate.

While not perfect, you should at least get hands with 5 fingers!

How does it work? Turning on ControlNet in inpainting uses the inpaint image as the reference. The DW OpenPose preprocessor detects detailed human poses, including the hands.

It made the correct assumption that a hand has 5 fingers. However, the lengths and joints can still be incorrect.

Refining the hands

To fix the hands further, you can do another round of inpainting.

If a finger is too long, you can mask only the part you don’t want to erase.

Turn off the ControlNet and click Generate.

Now you get a shorter thumb.

Mask the more of the surrounding area.

Increase the denoising strength to 0.85 and generate a new image.

Now you get a better hand.

You can do multiple rounds of this refinement by turning ControlNet on and off.

Upscaling

Finally, doing a standard ControlNet Tile Upscale can mitigate some issues coming from low resolution.

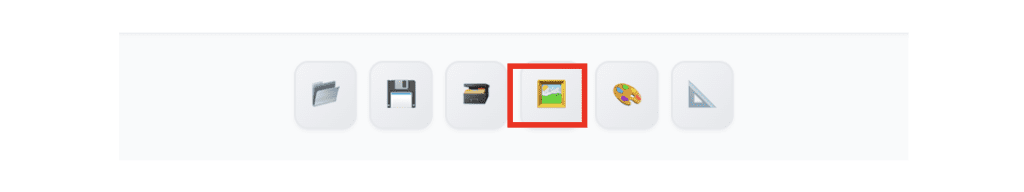

Send the image to the img2img tab by clicking the Send to Img2img button.

You should now be in img2img > Generation > img2img tab.

Set Resize by to 2. We are upscaling the image 2x to 1536×1024 pixels.

Set denoising strength to 0.9.

In the ControlNet section, set:

- Enable: Yes

- Control Type: Tile/Blur

- Preprocessor: tile_resample

- Model: control_v11f1e_sd15_tile

Generate an image. You add some details to the hands to make it look more realistic.

Here’s a comparison between the original and the final image.

You can also use this method in ComfyUI. However, the interactive nature of this approach makes it hard to use in ComfyUI effectively.

Applying to SDXL models

This inpainting method can only be used with an SD 1.5 model.

If you have generated an image with an SDXL model, you can work around it by switching to an SD 1.5 model for inpainting.

Make sure to scale the image back to a size compatible with the SD 1.5 model. (e.g. 768 x 512)

Hand Refiner

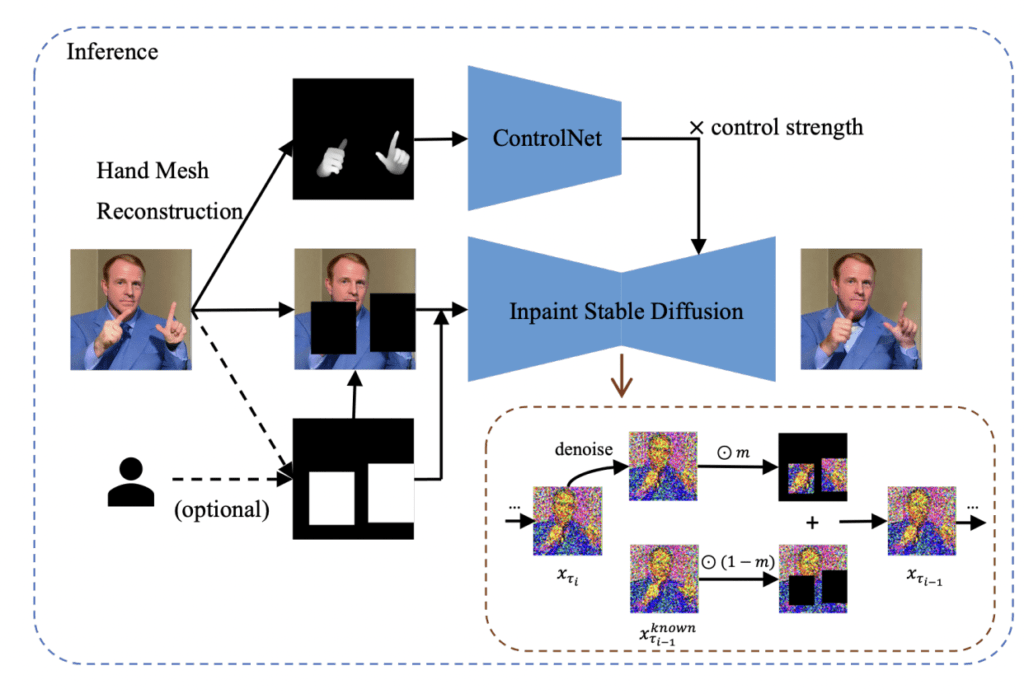

HandRefiner is a ControlNet model for fixing hands.

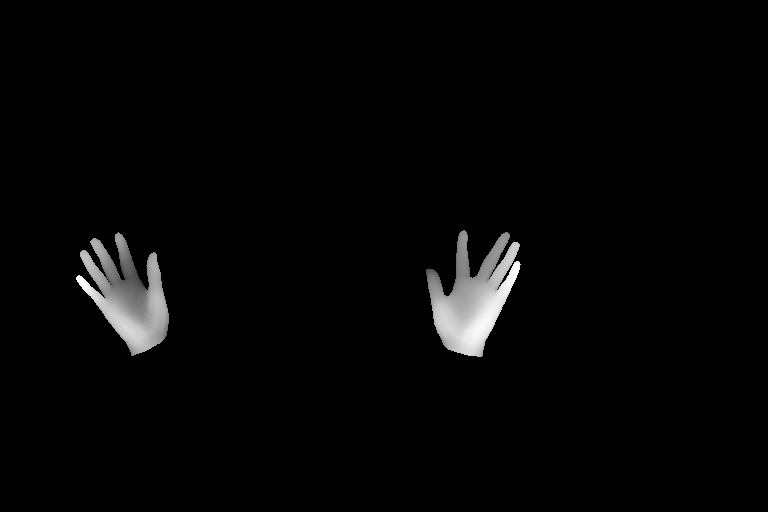

The preprocessor uses a hand reconstruction model, Mesh Graphormer, to generate the mesh of restored hands. It then converts the mesh to a depth map.

The ControlNet model is a depth model trained to condition hand generation. You can also use the standard depth model, but the results may not be as good.

Using HandRefiner in AUTOMATIC1111

Download the HandRefiner ControlNet model. Put the file in the folder stable-diffusion-webui > models > ControlNet.

After generating an image, use the Send to Inpaint button to send the image to inpainting.

Mask the area you want to regenerate, which is the hands in this case. You should include a bit of the surrounding areas.

Set Inpaint area to whole picture.

It is important to set a size compatible with SD 1.5. It is 512×768 in this case for a 2:3 aspect ratio.

Set the denoising strength to 0.75.

- Enable: Yes

- Control Type: OpenPose

- Preprocessor: depth_hand_refiner

- Model: control_sd15_inpaint_depth_hand_fp16

Update the ControlNet extension if you don’t see the preprocessor.

Click the Refresh button next to the Model dropdown if you don’t see the control model you just downloaded.

Click Generate. Now, you get fixed hands!

You should also get the control image. Check to see if it looks right.

I keep getting Error running postprocess_batch_list when using reforge with stability matrix with this particular model, any idea how I can fix it?

Control type should be “Depth”? is there any SDXL model for it?

Hand fixer is only available for SD 1.5

Do you have any solution (maybe in Comfy?) to generate good hands close to the side? It seems possible to inpaint/generate hands when they’re in front of the picture but how about different situations?

I am Radical Rick, PrinceofFallen’s male partner and yes I approve of his message like I compliment his VHS Collection and talk crap about a lot of people online because I really don’t have a life but make good money. I’m also on YouTube at Radical Rick, you’ll find me with the swastika stuff.

Looks like the photographer was trying to get the gal to lift her breasts for the photograph but it aroused me like I do on my videos on YouTube

In many cases before, I simply took a picture of my own hands, then resized and pasted them into the image, and inpaint with a medium to high denoise strength.