Mochi is a new state-of-the-art local video model for generating short clips. What if you want to tell a story by chaining a few together? You can easily do that with this Mochi movie workflow, which generates and combines 4 Mochi video clips to form a long video. The movie is generated from text and created in ComfyUI.

See an example of a Midwest-style movie below.

You must be a member of this site to download this workflow.

Table of Contents

Software

We will use ComfyUI, a free AI image and video generator. You can use it on Windows, Mac, or Google Colab.

Think Diffusion provides an online ComfyUI service. They offer an extra 20% credit to our readers.

Read the ComfyUI beginner’s guide if you are new to ComfyUI. See the Quick Start Guide if you are new to AI images and videos.

Take the ComfyUI course to learn how to use ComfyUI step by step.

Step-by-step guide

Step 0: Update ComfyUI

This workflow does not require any custom nodes. But before loading the workflow, make sure your ComfyUI is up-to-date. The easiest way to do this is to use ComfyUI Manager.

Click the Manager button on the top toolbar.

Select Update ComfyUI.

Restart ComfyUI.

Step 1: Load the ComfyUI workflow

Download the workflow JSON file below. Log in and download the workflow below.

Drag and drop it to ComfyUI to load.

Step 2: Download the Mochi FP8 checkpoint

Download the Mochi checkpoint model and put it in the folder ComfyUI > models > checkpoint

Step 3: Generate video

Press Queue Prompt to generate the video. This is the MP4 video:

Customization

Prompts

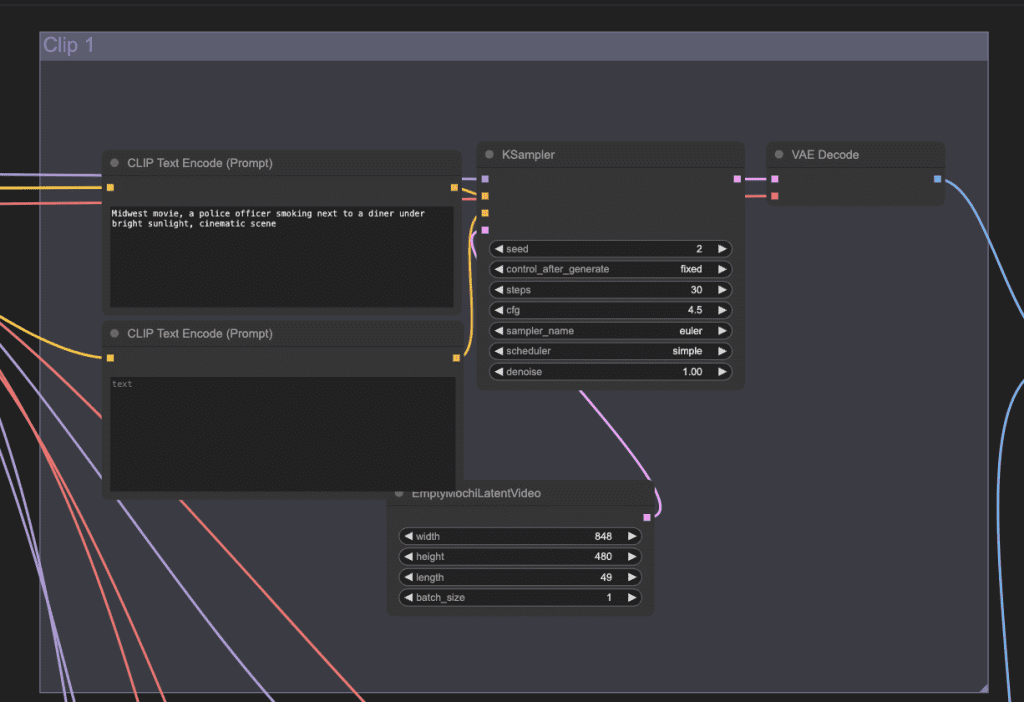

Change the prompts of the video clips to customize the video. For example, change the prompt in the Clip 1 group to change the first video.

Tips:

- Use action words to describe how the subject should move. E.g. Run, walk, drinking coffee.

- Use camera words to describe the camera’s motion. E.g. Zoom in, zoom out.

Video length

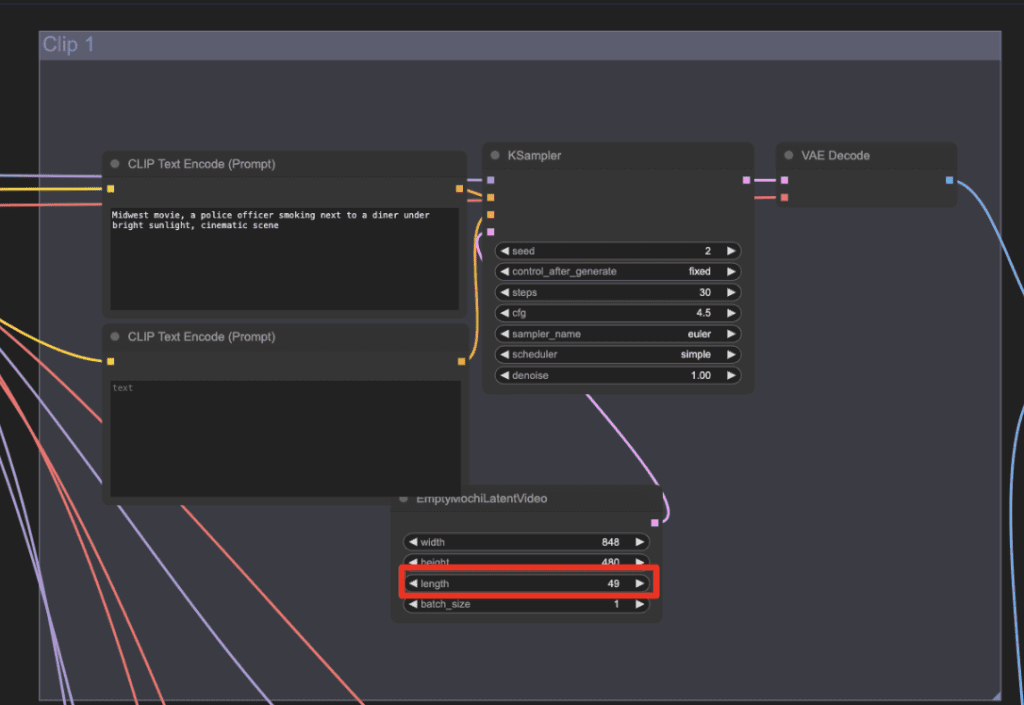

Adjust the length parameter in the Empty Mochi Latent Video node to change the length of each clip.

how do i solve this? I have 16gb vram rtx 5080. VAEDecode

CUDA error: out of memory

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1

Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.

You can try reducing the video resolution or length. But mochi is known to be memory intensive.

Interesting model and I found it responded well to detailed prompts that I made with ChatGPT. I found the animationṡ weren’t very high quality at the resolution in the workflow. I’ll try increasing the image size but are there any other tweaks that are worth doing?

Is there a way to save the animation in other formats? Webp is difficult to view and I had to find an online converter to make a mp4.

Yes, use Video Combine node. I updated the workflow.