Framepack is a video generation method that consumes low VRAM (6 GB) regardless of the video length. It supports image-to-video, turning an image into a video with text instructions.

In this tutorial, I will talk about:

- An introduction to FramePack.

- How to use FramePack in Windows.

5-second FramePack video:

10-second FramePack video:

Table of Contents

What is FramePack?

FramePack predicts the next frame based on the previous frames in the video. It uses a fixed context length in the transformer regardless of the video length. This overcomes the drawback of many Video generators (such as Wan 2.1, Hunyuan, and LTX Video) that limit video length due to available memory. For FramePack, it takes the same amount of VRAM to generate a 1-second and a 1-minute video.

Importantly, FramePack is plug-and-play: It works with existing video models by finetuning some layers and some code changes in sampling.

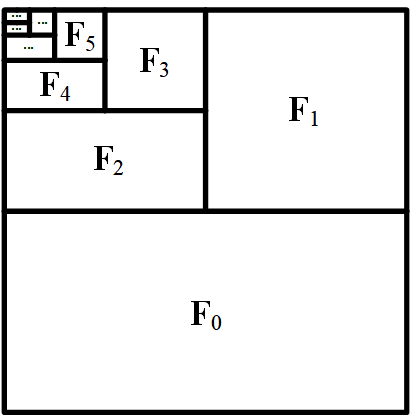

Frame packing

When predicting the next frame of a video, not all frames are equally important. The key insight of FramePack is to downsample the context of the frames based on how long ago the frame was taken. The further away it is, the more the frame is downsampled.

Anti-drifting sampling

However, errors (called drift) accumulate when we predict the next frames and then use them for further predictions.

The inverted anti-drifting sampling used in the software generates the video in reverse order. Each generation is anchored on the high-quality initial frame.

Video model

The demo software applies FramePack to the Hunyuan Video model.

Installing FramePack on Windows

FramePack is an open-source software. You can use it for free on your local machine with an NVidia video card RTX 3000 series or above.

If you have problems running FramePack locally, I maintain a notebook that you can run on Google Colab.

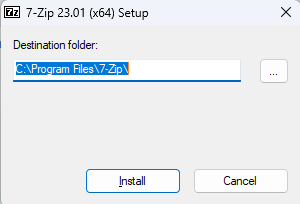

Step 1: Install 7-Zip

You need the 7-zip software to uncompress FramePack’s zip file.

Download 7-zip on this page or use this direct download link.

Double-click to run the downloaded exe file. Click Install to install 7-zip on your PC.

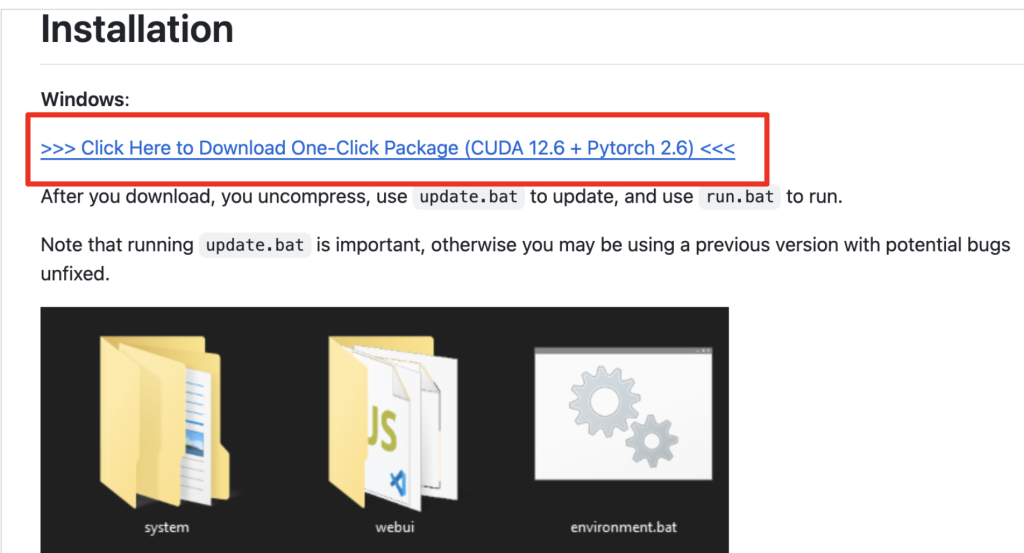

Step 2: Download FramePack

Go to FramePack’s Github page. Click the Download link under Windows.

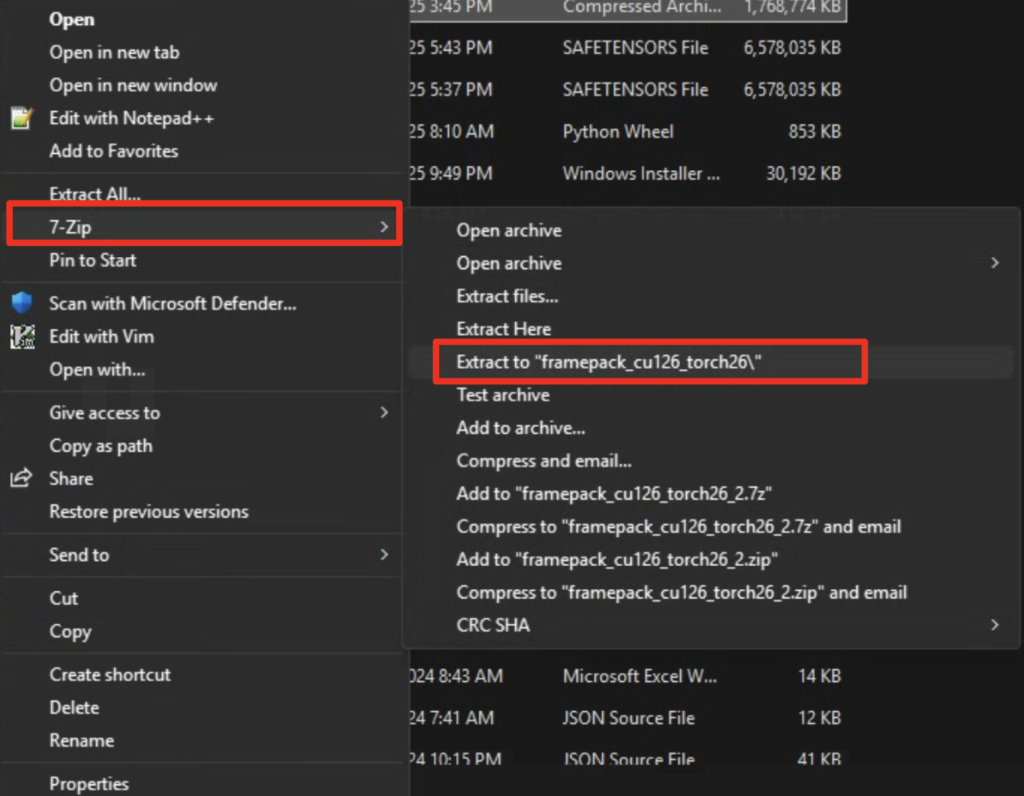

Step 3: Uncompress FramePack

Right-click on the downloaded file, select Show More Options > 7-Zip > Extract to “framepack_cu126_torch26\”.

When it is done, you should see a new folder framepack_cu126_torch26. You can move the folder to another location you like.

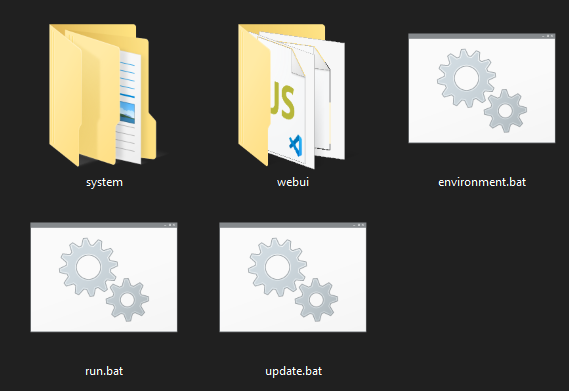

Step 4: Update Framepack

In the folder famepack_cu126_torch26, double-click the file update.bat to update Framepack.

Step 5: Run FramePack

In the folder famepack_cu126_torch26, double-click the file run.bat to start Framepack.

It will take some time to start when you run it for the first time because it needs to download ~30GB of model files.

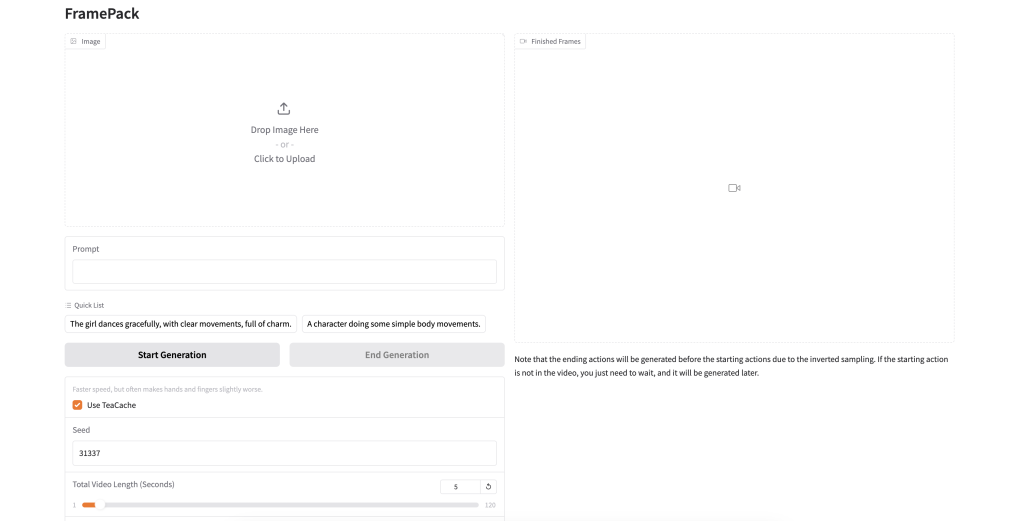

Using FramePack

FramePack generates a short video clip using the input image as the initial frame and the text description of the video.

In this section, we will use the video generation settings recommended by FramePack.

Step 1: Upload the initial image

Upload the following image to FramePack’s Image canvas.

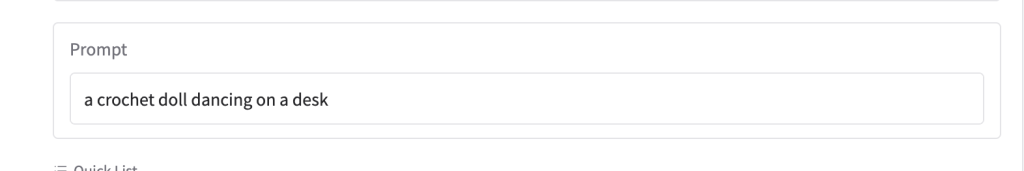

Step 2: Enter the prompt

Enter the following prompt in FramePack.

a crochet doll dancing on a desk

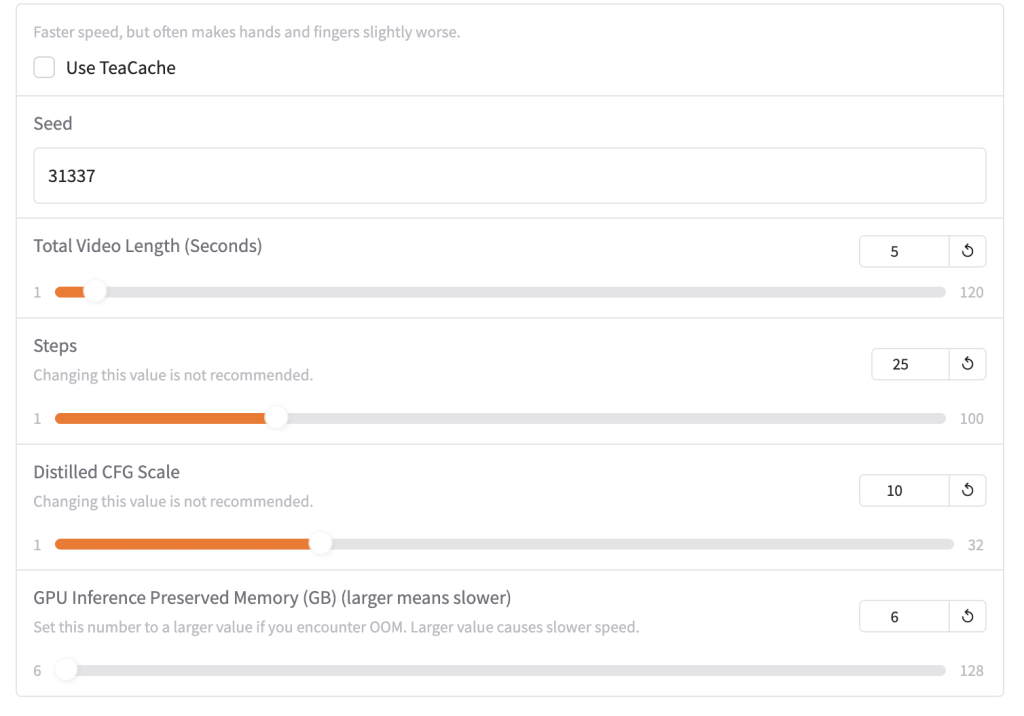

Step 3: Revise video settings

Revise the generation settings.

- Teacache: speeds up video generation but may introduce artifacts in small details, such as fingers.

- Seed: Different value generates different videos.

- Steps: The number of diffusion steps. Keep the default setting.

- Distilled CFG Scale: The CFG scale controls how closely the prompt should be followed. Keep the default setting.

- GPU inference preserved memory (GB): Increase this value if you run into an out-of-memory error.

Step 4: Generate a video

Click Start Generation to generate a video.

It will generate the end of the video first, and extend to the beginning. On the console, you will see several progress bars before a video is generated.

It takes about 10 minutes on an RTX 4090 GPU card to generate a 5-second video.

Longer videos with Framepack

Unlike Wan 2.1, Hunyuan, and LTX Video, Framepack uses the same amount of VRAM regardless of the video’s length. That means you can generate a minute-long video using 6 GB VRAM!

See this 10-second video:

Reference

GitHub – lllyasviel/FramePack: Lets make video diffusion practical!

It works well, but the AI lets its imagination run wild. People are suddenly displayed in a landscape image. I don’t know how to prevent this. I’ve tried several times with different settings.

I successfully installed FramePack (by using Pinokio), it starts, but it does not seem to work with my NVIDIA GeForce GTX 1060 (6GB) graphics card. Is there any settings, tweaks installations I could do to get this working?

I have it installed on to my PC. (RTX4090) First I tried to install it in a docker. But failed. So its on my PC now. I find its good for videos. But does not follow the prompt very good. I have started to increase the video length hoping I will be able to use some of the video.

Last night a asked it for a 1 minute video and broke it down into 5 scenes, starting with an image. It never got pass the first scene and just kept extending it.

All my generations have been done with a start image at this time. I will be running it most of today as its not that fast to kick out the final results. Hopefully by the end of the day I will have learnt a few things on how to get the best out of it.

There is a Comfyui node for that already.

https://github.com/kijai/ComfyUI-FramePackWrapper

It works great on my weak 6 GB graphics card, thanks!

Another humble request here for a notebook since this is a Windows-only solution — would be much appreciated. Some of us Mac-based folks would have a beefed-up Windows system if we could.

First of all, you are not reaching the right people by complaining here. These are all open source solutions, you need to complain to the people who maintain the project.

Secondly, it’s not a Windows/Mac thing — if anything, many of these software run the best on Linux. Most of the problems of your Mac are related to the GPU and the amount of VRAM. Some projects are highly optimized (or rely entirely on) nVidia’s CUDA. Some require a lot more VRAM than your Mac has. There are projects optimized for Mac, but they are unlikely the cutting edge software that you are interested in. May be wait a couple years. When the field becomes more mature, everyone will be treated equally.

It’s unfortunate you decided I was complaining. I was stating a fact that I would indeed utilize a Windows (or Linux) system with a high-powered GPU if I could, but I don’t have access to either of those systems. I understand that the majority of image and video generation is optimized for NVIDIA technology — I’ve been working with Stable Diffusion in some form or another for the last 2+ years. In my case, the limitations of the Mac system are not based solely on VRAM/GPU but rather a lack of supporting technologies, such as Sage Attention and Triton. These are simply unavailable on MacOS, while the underlying tools used for actual generation like ComfyUI, Forge, Auto1111, Invoke, etc, are all intended to run on the platform. They’ve worked fine for me (even if taking a bit longer to run), but video continues to be somewhat elusive.

I’ve also been a paid supporter of Andrew since 2023, so I imagined it was reasonable to add my voice to an already existing request for an alternate solution.

In the future, you might consider asking people about their experience and system capabilities before making assumptions about their comments.

This is amazing Andrew! Works perfectly and was super easy to set up. Thanks for posting this.

This looks like this will be extremely useful, Andrew. Hope you can put it into a Colab notebook at some point.

The 5 sec video has a bug and won’t play

The file may be too large. I replaced with a small one.

Will make a notebook.

Great, thanks, Andrew. If you have to choose a model to go with it, I think Wan2.1 would be my choice 🙂

me too but currently only hunyuan is available. 🙂