Long-time member Heinz Zysset kindly shares this high-resolution text-to-video workflow.

This workflow uses:

- The Rapid All-In-One WAN 2.2 checkpoint model to generate videos, which merges multiple checkpoints such as text-to-video and image-to-video, and fixes the first and last frames.

- An AI upscaler model to enlarge the video.

Table of Contents

Software needed

We will use ComfyUI, a free AI image and video generator. You can use it on Windows, Mac, or Google Colab.

Think Diffusion provides an online ComfyUI service. They offer an extra 20% credit to our readers.

Read the ComfyUI beginner’s guide if you are new to ComfyUI. See the Quick Start Guide if you are new to AI images and videos.

Take the ComfyUI course to learn how to use ComfyUI step by step.

Wan 2.2 upscale workflow

Step 1: Download models

Download the Wan 2.2 AIO model wan2.2-t2v-rapid-aio.safetensors. Put it in ComfyUI > models > checkpoints.

Download the 4x-Ultrasharp model. Put it in ComfyUI > models > upscale_models folder.

Step 2: Download the workflow

Download the workflow JSON file below.

Drop the file into ComfyUI to load the workflow.

Step 3: Install missing nodes

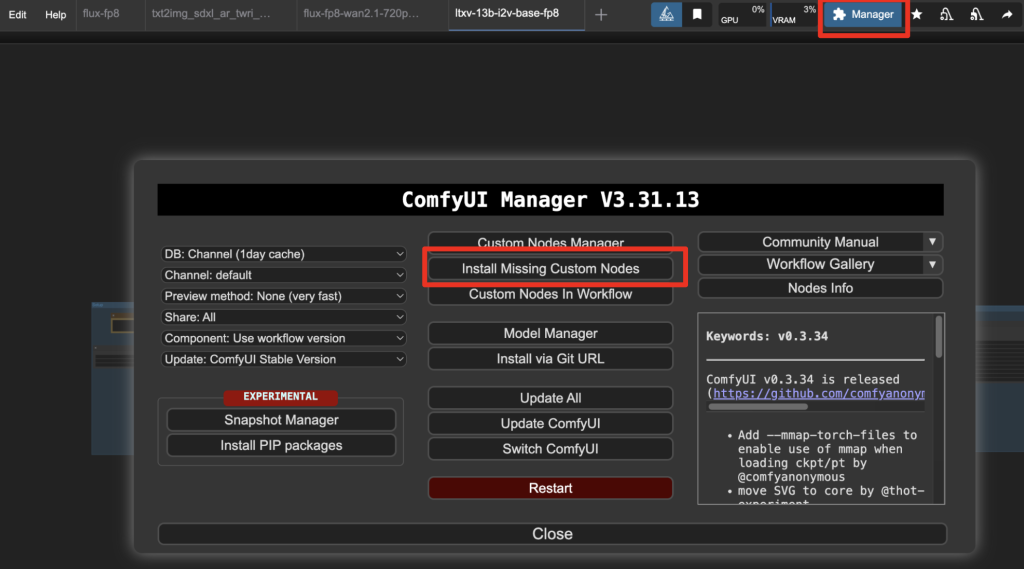

If you see nodes with red borders, you don’t have the custom nodes required for this workflow. You should have ComfyUI Manager installed before performing this step.

Click Manager > Install Missing Custom Nodes.

Install the nodes that are missing.

Restart ComfyUI.

Refresh the ComfyUI page.

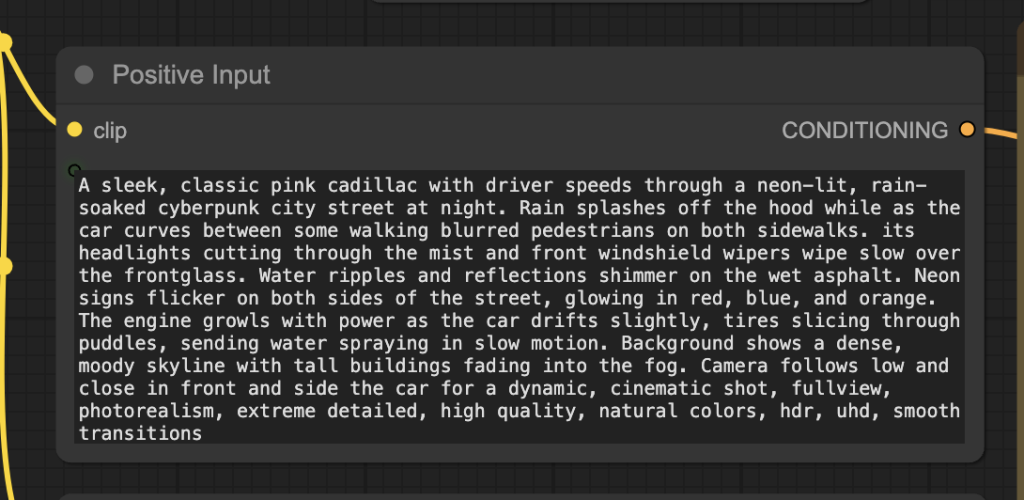

Step 4: Revise the prompt

Revise the prompt to match the image you want to generate.

Step 5: Generate a video

Click the Run button to run the workflow.

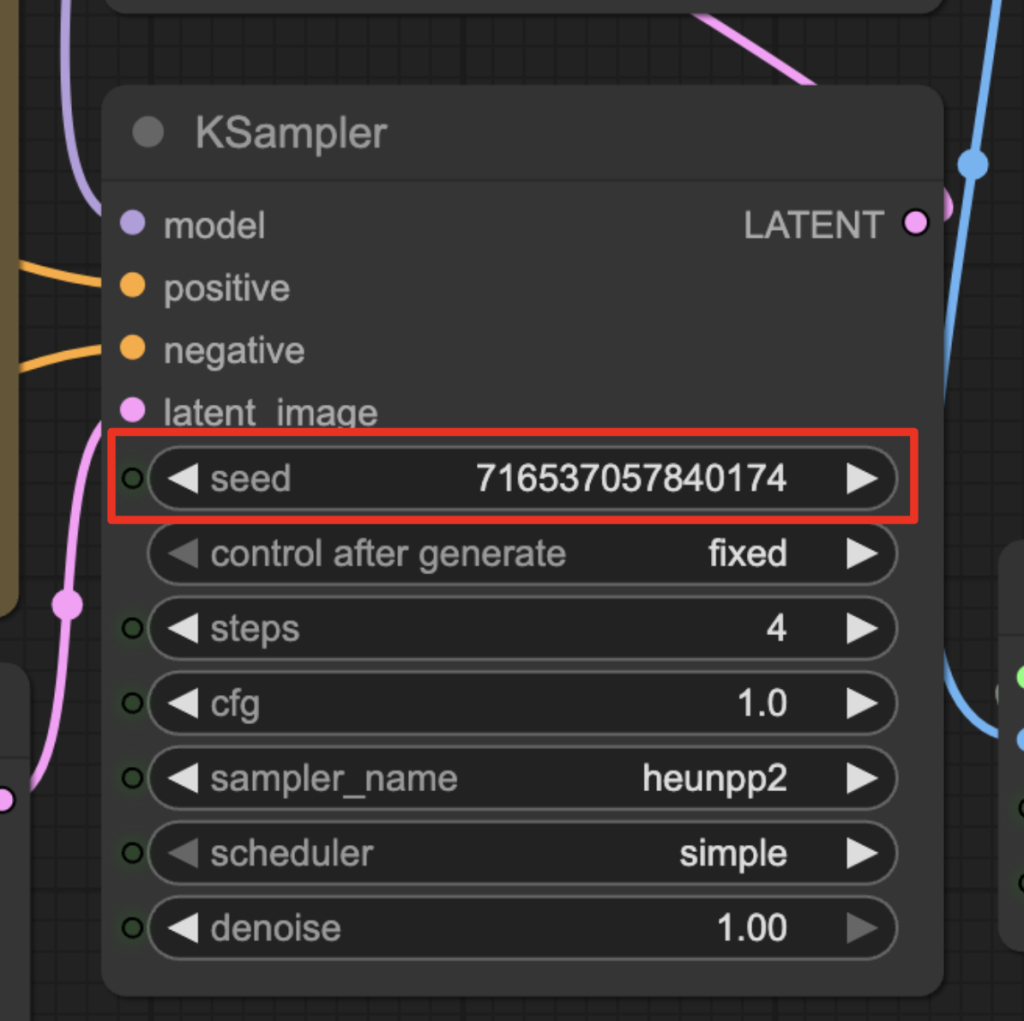

Change the seed value in the KSampler node to generate a new video.