Tutorials

How to use ComfyUI API nodes

ComfyUI is known for running local image and video AI models. Recently, it added support for running proprietary close models …

Clone Your Voice Using AI (ComfyUI)

Have you ever wondered how those deepfakes of celebrities like Mr. Beast were able to clone their voices? Well, those …

How to run Wan VACE video-to-video in ComfyUI

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing AI model that you can run …

Wan VACE ComfyUI reference-to-video tutorial

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing model developed by the Alibaba team …

How to run LTX Video 13B on ComfyUI (image-to-video)

LTX Video is a popular local AI model known for its generation speed and low VRAM usage. The LTXV-13B model …

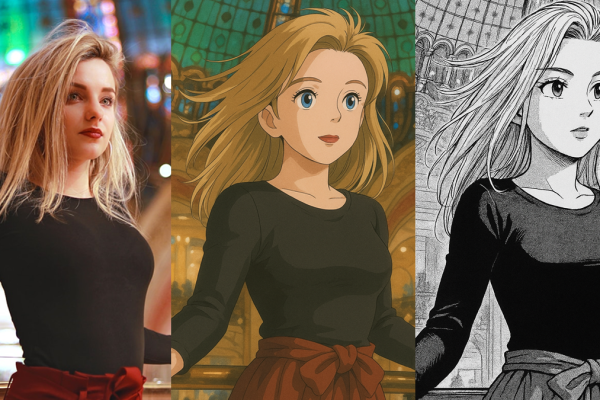

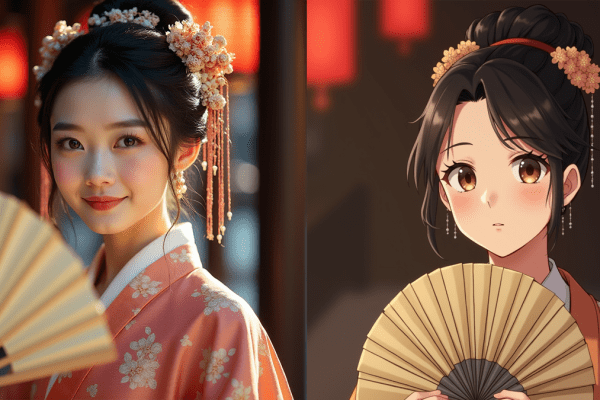

Stylize photos with ChatGPT

Do you know you can use ChatGPT to stylize photos? This free, straightforward method yields impressive results. In this tutorial, …

How to generate OmniHuman-1 lip sync video

Lip sync is notoriously tricky to get right with AI because we naturally talk with body movement. OmniHuman-1 is a …

How to create FramePack videos on Google Colab

FramePack is a video generation method that allows you to create long AI videos with limited VRAM. If you don’t …

How to create videos with Google Veo 2

You can now use Veo 2, Google’s AI-powered video generation model, on Google AI Studio. It supports text-to-image and, more …

FramePack: long AI video with low VRAM

Framepack is a video generation method that consumes low VRAM (6 GB) regardless of the video length. It supports image-to-video, …

Speeding up Hunyuan Video 3x with Teacache

The Hunyuan Video is the one of the highest quality video models that can be run on a local PC …

How to speed up Wan 2.1 Video with Teacache and Sage Attention

Wan 2.1 Video is a state-of-the-art AI model that you can use locally on your PC. However, it does take …

How to use Wan 2.1 LoRA to rotate and inflate characters

Wan 2.1 Video is a generative AI video model that produces high-quality video on consumer-grade computers. Remade AI, an AI …

How to use LTX Video 0.9.5 on ComfyUI

LTX Video 0.9.5 is an improved version of the LTX local video model. The model is very fast — it …

How to run Hunyuan Image-to-video on ComfyUI

The Hunyuan Video model has been a huge hit in the open-source AI community. It can generate high-quality videos from …

How to run Wan 2.1 Video on ComfyUI

Wan 2.1 Video is a series of open foundational video models. It supports a wide range of video-generation tasks. It …

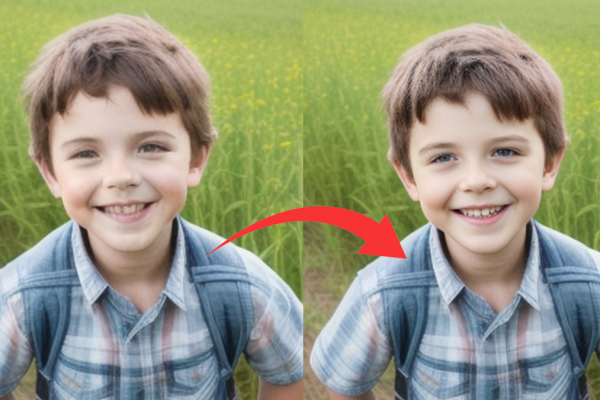

CodeFormer: Enhancing facial detail in ComfyUI

CodeFormer is a robust face restoration tool that enhances facial features, making them more realistic and detailed. Integrating CodeFormer into …

3 ways to fix Queue button missing in ComfyUI

Sometimes, the “Queue” button disappears in my ComfyUI for no reason. It may be due to glitches in the updated …

How to run Lumina Image 2.0 on ComfyUI

Lumina Image 2.0 is an open-source AI model that generates images from text descriptions. It excels in artistic styles and …

TeaCache: 2x speed up in ComfyUI

Do you wish AI to run faster on your PC? TeaCache can speed up diffusion models with negligible changes in …

How to use Hunyuan video LoRA to create consistent characters

Low-Rank Adaptation (LoRA) has emerged as a game-changing technique for finetuning image models like Flux and Stable Diffusion. By focusing …

How to remove background in ComfyUI

Background removal is an essential tool for digital artists and graphic designers. It cuts clutter and enhances focus. You can …

How to direct Hunyuan video with an image

Hunyuan Video is a local video model which turns a text description into a video. But what if you want …

How to generate Hunyuan video on ComfyUI

Hunyuan Video is a new local and open-source video model with exceptional quality. It can generate a short video clip …

How to use image prompts with Flux model (Redux)

Images speak volumes. They express what words cannot capture, such as style and mood. That’s why the Image prompt adapter …

How to outpaint with Flux Fill model

The Flux Fill model is an excellent choice for inpainting. Do you know it works equally well for outpainting (extending …

Fast Local video: LTX Video

LTX Video is a fast, local video AI model full of potential. The Diffusion Transformer (DiT) Video model supports generating …

How to use Flux.1 Fill model for inpainting

Using the standard Flux checkpoint for inpainting is not ideal. You must carefully adjust the denoising strength. Setting it too …

Local image-to-video with CogVideoX

Local AI video has gone a long way since the release of the first local video model. The quality is …

How to run Mochi1 text-to-video on ComfyUI

Mochi1 is one of the best video AI models you can run locally on a PC. It turns your text …

Stable Diffusion 3.5 Medium model on ComfyUI

Stable Diffusion 3.5 Medium is an AI image model that runs on consumer-grade GPU cards. It has 2.6 billion parameters, …

How to use Flux LoRA on ComfyUI

Flux is a state-of-the-art image model. It excels in generating realistic photos and following the prompt, but some styles can …

How to install Stable Diffusion 3.5 Large model on ComfyUI

Our old friend Stability AI has released the Stable Diffusion 3.5 Large model and a faster Turbo variant. Attempting to …

Flux AI: A Beginner-Friendly Overview

Since the release of Flux.1 AI models on August 1, 2024, we have seen a flurry of activities around it …

SDXL vs Flux1.dev models comparison

SDXL and Flux1.dev are two popular local AI image models. Both have a relatively high native resolution of 1024×1024. Currently, …

How to run ComfyUI on Google Colab

ComfyUI is a popular way to run local Stable Diffusion and Flux AI image models. It is a great complement …