Wan 2.2 is a high-quality video AI model you can run locally on your computer. In this tutorial, I will cover: Table of ContentsSoftware neededComfyUI Colab NotebookText-to-video with the Wan 2.2 14B modelStep 0: Update ComfyUIStep 1: Load the workflowStep 2: Download modelsStep 3: Revise the promptStep 4: Generate the videoText-to-video with the Wan 2.2…

Blog

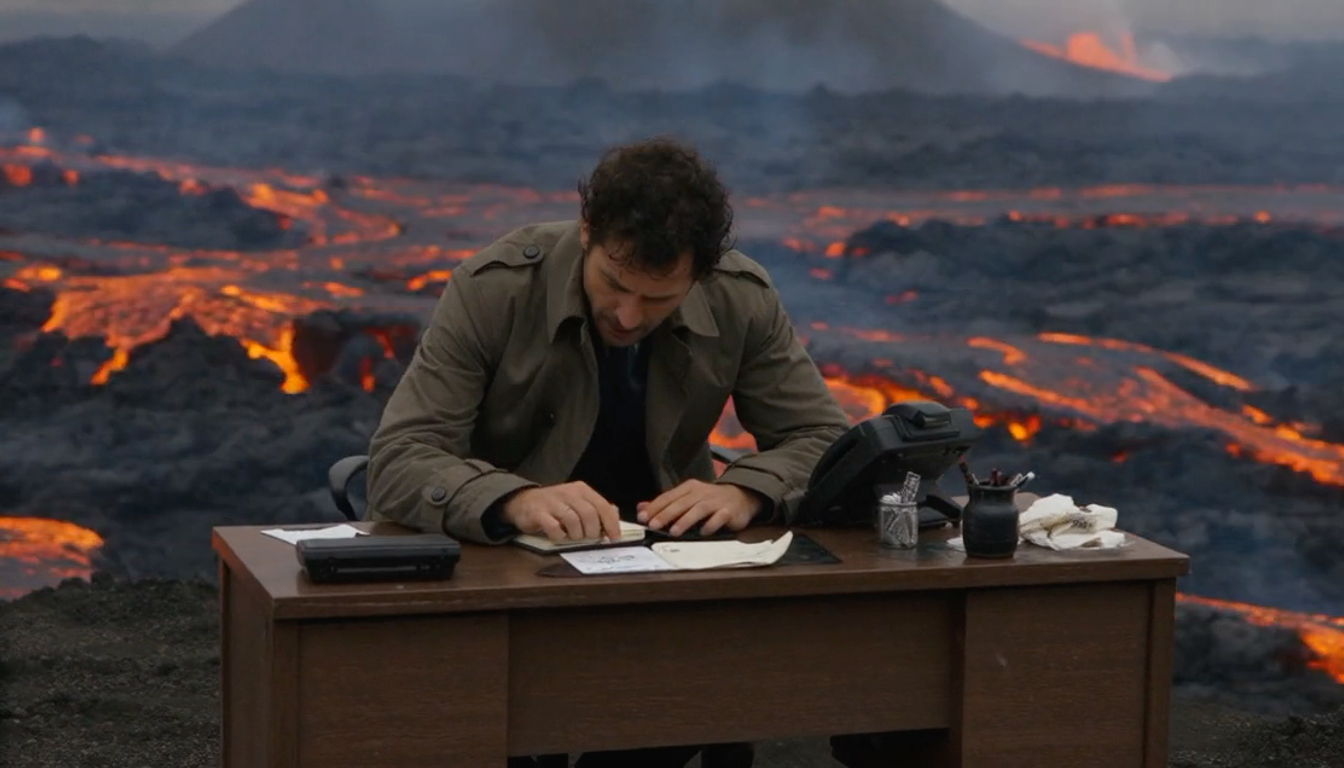

Turn an image into a video with Wan 2.2 local model

Wan 2.2 is a local video model that can turn text or images into videos. In this article, I will focus on the popular image-to-video function. The new Wan 2.2 models are surprisingly capable in motion and camera movements. (But not necessarily controlling them) This article will cover how to install and run the following…

Turn any image into Arcane style

Do you have a photo you want to turn it into an unique animation style? Long-time member Heinz Zysset kindly shares his stylization workflow to our site readers. It turns any images into the style of the Arcane animation series. Table of ContentsSoftware neededWorkflow overviewArcane stylization workflowStep 1: Download modelsStep 2: Download the workflowStep 3:…

How to use ComfyUI API nodes

ComfyUI is known for running local image and video AI models. Recently, it added support for running proprietary close models through API. As of writing, you can use popular models from Kling, Google Veo, OpenAI, RunwayML, and Pika, among others. In this article, I will show you how to set up and use ComfyUI API…

Cutting a gold bar

This workflow generates a fun video of cutting a gold bar with the world’s sharpest knife. You can run it locally or using a ComfyUI service. It uses Flux AI to generate a high-quality image, followed by Wan 2.1 Video for animation with Teacache speedup. You must be a member of this site to download…

Clone Your Voice Using AI (ComfyUI)

Have you ever wondered how those deepfakes of celebrities like Mr. Beast were able to clone their voices? Well, those people use voice cloners like the F5-TTS model in order to do this, and in this article, you’ll learn that it’s actually not that hard! How it works: Table of ContentsSoftwareStep-by-step guideStep 0: Update ComfyUIStep…

How to run Wan VACE video-to-video in ComfyUI

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing AI model that you can run locally on your computer. It unifies text-to-video, reference-to-video (reference-guided generation), video-to-video (pose and depth control), inpainting, and outpainting under a single framework. VACE supports the following core functions: This tutorial covers the Wan Vace Video-to-Video (V2V)…

Wan VACE ComfyUI reference-to-video tutorial

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing model developed by the Alibaba team. It unifies text-to-video, reference-to-video (reference-guided generation), video-to-video (pose and depth control), inpainting, and outpainting under a single framework. VACE supports the following core functions: You can use the WAN VACE model in ComfyUI with the…

How to run LTX Video 13B on ComfyUI (image-to-video)

LTX Video is a popular local AI model known for its generation speed and low VRAM usage. The LTXV-13B model has 13 billion parameters, a 6-fold increase over the previous 2B model. This translates to better details, prompt adherence, and more coherent videos. In this tutorial, I will show you how to install and run…

Flux-Wan 2.1 four-clip movie (ComfyUI)

This workflow generates four video clips and combines them into a single video. To improve the quality and control of each clip, the initial frame is generated with the Flux AI image model, followed by Wan 2.1 Video with Teacache speed up. You can run it locally or using a ComfyUI service. You must be…