Tutorials

How to use ComfyUI API nodes

ComfyUI is known for running local image and video AI models. Recently, it added support for running proprietary close models …

Clone Your Voice Using AI (ComfyUI)

Have you ever wondered how those deepfakes of celebrities like Mr. Beast were able to clone their voices? Well, those …

How to run Wan VACE video-to-video in ComfyUI

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing AI model that you can run …

Wan VACE ComfyUI reference-to-video tutorial

WAN 2.1 VACE (Video All-in-One Creation and Editing) is a video generation and editing model developed by the Alibaba team …

How to run LTX Video 13B on ComfyUI (image-to-video)

LTX Video is a popular local AI model known for its generation speed and low VRAM usage. The LTXV-13B model …

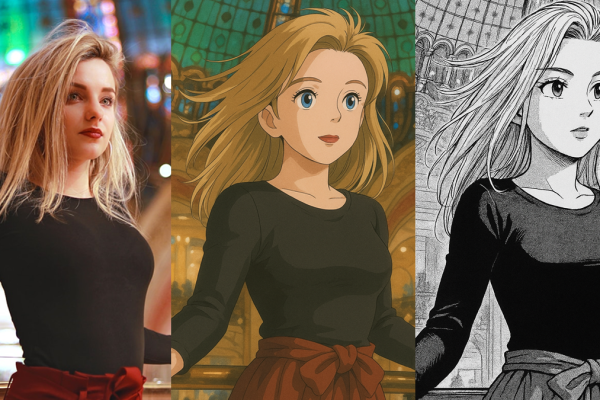

Stylize photos with ChatGPT

Do you know you can use ChatGPT to stylize photos? This free, straightforward method yields impressive results. In this tutorial, …

How to generate OmniHuman-1 lip sync video

Lip sync is notoriously tricky to get right with AI because we naturally talk with body movement. OmniHuman-1 is a …

How to create FramePack videos on Google Colab

FramePack is a video generation method that allows you to create long AI videos with limited VRAM. If you don’t …

How to create videos with Google Veo 2

You can now use Veo 2, Google’s AI-powered video generation model, on Google AI Studio. It supports text-to-image and, more …

FramePack: long AI video with low VRAM

Framepack is a video generation method that consumes low VRAM (6 GB) regardless of the video length. It supports image-to-video, …

Speeding up Hunyuan Video 3x with Teacache

The Hunyuan Video is the one of the highest quality video models that can be run on a local PC …

How to speed up Wan 2.1 Video with Teacache and Sage Attention

Wan 2.1 Video is a state-of-the-art AI model that you can use locally on your PC. However, it does take …

How to use Wan 2.1 LoRA to rotate and inflate characters

Wan 2.1 Video is a generative AI video model that produces high-quality video on consumer-grade computers. Remade AI, an AI …

How to use LTX Video 0.9.5 on ComfyUI

LTX Video 0.9.5 is an improved version of the LTX local video model. The model is very fast — it …

How to run Hunyuan Image-to-video on ComfyUI

The Hunyuan Video model has been a huge hit in the open-source AI community. It can generate high-quality videos from …

How to run Wan 2.1 Video on ComfyUI

Wan 2.1 Video is a series of open foundational video models. It supports a wide range of video-generation tasks. It …

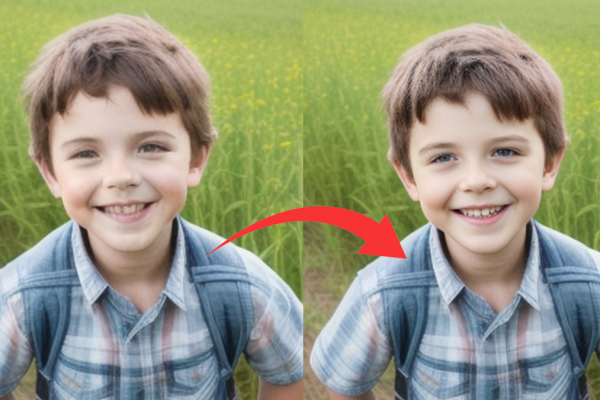

CodeFormer: Enhancing facial detail in ComfyUI

CodeFormer is a robust face restoration tool that enhances facial features, making them more realistic and detailed. Integrating CodeFormer into …

3 ways to fix Queue button missing in ComfyUI

Sometimes, the “Queue” button disappears in my ComfyUI for no reason. It may be due to glitches in the updated …